Introduction

In the dynamic and ever-evolving realm of Artificial Intelligence (AI), Large Language Models (LLMs) have carved out a significant niche for themselves. These powerful tools have been instrumental in numerous AI applications, yet they grapple with substantial challenges. One of the most prominent among these is the maintenance of accuracy and reliability in the information they generate.

Enter the Search-Augmented Factuality Evaluator, or SAFE, a revolutionary solution to these challenges. SAFE enhances the fact-checking capabilities of LLMs, thereby making a substantial contribution to the broader AI ecosystem. It represents a significant stride in the quest for verifiable information and the integrity of AI-generated content.

SAFE is the brainchild of a dedicated team of researchers at Google’s DeepMind. This team developed SAFE with a clear objective in mind. They aimed to enhance the factuality of responses generated by LLMs, thereby addressing a critical need in the field of AI.

Google DeepMind’s commitment to advancing AI responsibly is evident in the development of SAFE. The organization has undertaken extensive research and collaboration within the AI field to bring SAFE to fruition. This innovative model stands as a testament to their dedication and their unwavering focus on the future of AI.

What is SAFE?

The Search-Augmented Factuality Evaluator, abbreviated as SAFE, is a sophisticated tool that leverages the power of Large Language Models (LLMs) to dissect long-form responses into individual facts. It then embarks on a multi-step reasoning process, which includes querying Google Search, to verify the accuracy of each fact. This innovative approach to fact-checking mirrors the human process, utilizing the vast expanse of online information to confirm the truthfulness of each fact.

Key Features of SAFE

SAFE is characterized by several unique features that set it apart:

- Multi-step Reasoning: One of the standout features of SAFE is its ability to perform multi-step reasoning. It breaks down complex responses into verifiable facts and cross-references these facts with search engine data to assess their accuracy.

- Fact Verification: SAFE verifies each fact against search engine results, ensuring the accuracy of the information.

- F1 Score Extension: SAFE extends the F1 score, a widely used metric in machine learning, as an aggregated measure for long-form factuality. This allows for a more comprehensive evaluation of the factuality of long-form responses.

Capabilities of SAFE

SAFE’s capabilities are vast and varied, extending to numerous real-world applications:

- Enhancing AI Information Quality: SAFE can significantly enhance the quality of information disseminated by AI agents. By verifying the accuracy of each fact in a long-form response, it ensures that the information provided by AI agents is reliable and trustworthy.

- Robust Fact-Checking Framework: SAFE provides a robust framework for fact-checking, making it a valuable tool in fields such as journalism and academia where the accuracy of information is paramount.

- Real-World Applications: The potential applications of SAFE are vast, ranging from enhancing the quality of AI-generated content to providing a reliable fact-checking tool for journalists and researchers.

Its unique capabilities make it a valuable asset in the quest for accurate and reliable information.

How does SAFE Work?

The Search-Augmented Factuality Evaluator is designed to assess the factuality of long-form responses. It operates by breaking down a response into individual facts and then evaluating each fact’s relevance and support from search results.

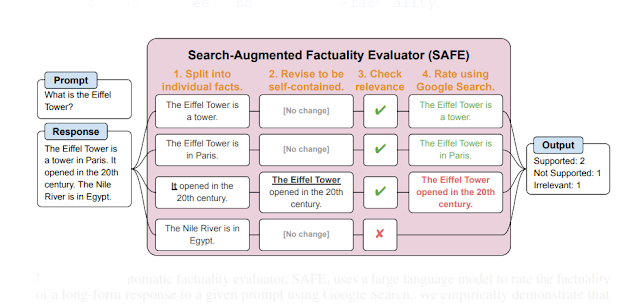

SAFE initiates its process by dissecting a long-form response into individual facts. This is accomplished by prompting a language model to split each sentence into distinct facts. Each fact is then revised to be self-contained, replacing vague references with their proper entities, as shown in figure above. This ensures that each fact can stand alone for evaluation.

The subsequent step involves determining the relevance of each fact in the context of the response. This is crucial as it filters out facts that do not contribute to answering the prompt. The remaining relevant facts are then subjected to a multi-step evaluation process. In each step, the model generates a Google Search query based on the fact under scrutiny and the previously obtained search results. After a set number of steps, the model reasons whether the fact is supported by the search results, as illustrated in figure above.

SAFE’s output metrics for a given prompt-response pair include the number of supported facts, irrelevant facts, and not-supported facts. This approach ensures a comprehensive and precise evaluation of long-form responses, making SAFE a significant advancement in the field of automated factuality evaluation.

Performance Evaluation of SAFE with Other Models

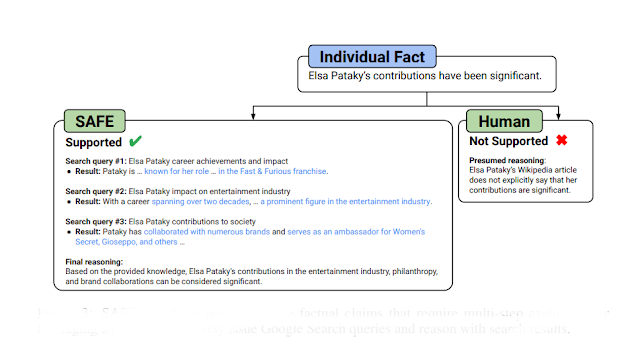

When it comes to the accuracy of Large Language Models (LLMs) like the Search-Augmented Factuality Evaluator (SAFE), recent studies have shown promising results. In a comprehensive analysis involving around 16,000 facts, SAFE’s conclusions were in sync with those of human fact-checkers 72% of the time. Even more impressive, when SAFE’s findings diverged from human opinions, it turned out to be correct in 76% of those instances.

Cost-effectiveness is another strong suit of SAFE. It operates at a fraction of the cost—over 20 times less expensive than manual fact-checking. This efficiency makes SAFE an attractive option for any organization that prioritizes both accuracy and economy in handling extensive factual data.

As shown in above figure, The prowess of SAFE was further highlighted in a comparative study of thirteen different language models spanning four distinct families: Gemini, GPT, Claude, and PaLM-21. The findings revealed a clear trend: the larger the language model, the more adept it is at managing and verifying long-form factual content.

To sum up, SAFE stands out among LLMs for its ability to assess long-form factuality with a high degree of accuracy and at a lower cost. This combination of precision and affordability positions SAFE as a valuable asset for any entity that manages a significant volume of factual information.

Evolving Fact-Checking in AI and SAFE’s Pivotal Role

The evolution of fact-checking in artificial intelligence has been marked by a variety of approaches, each tailored to specific aspects of knowledge verification. Benchmarks like FELM, FreshQA, and HaluEval have focused on assessing a model’s grasp of facts, while adversarial benchmarks such as TruthfulQA and HalluQA challenge models to avoid replicating common falsehoods.

In the realm of long-form content, innovative methods have emerged. For example, various researchers introduced prompts that prompt language models to create detailed biographies, which are then compared to Wikipedia for accuracy. Similarly, FActScore uses Wikipedia as a benchmark for evaluating extensive biographical narratives.

RAGAS takes a different approach in reference-free scenarios, checking the self-sufficiency of responses from retrieval-augmented systems by examining the internal consistency of questions, context, and answers.

Amidst this diverse landscape, SAFE distinguishes itself. It operates autonomously, without the need for fine-tuning or predefined answers, offering a reliability that surpasses human crowdsourced efforts at a fraction of the cost. SAFE’s unique capability to evaluate both the precision and recall of long-form responses without relying on predetermined correct answers positions it as a comprehensive tool for assessing the factuality of language models.

How to Access and Use SAFE?

SAFE Model is available and accessible through Google DeepMind’s GitHub repository. You can clone the repository and follow the instructions provided in the README file to install and use SAFE. The model is open-source, allowing for broad commercial and academic use under its licensing structure.

If you are interested to learn more about this AI model then all relevant links are provided under the 'source' section at the end of this article.

Limitations

Despite its advancements, SAFE is not without limitations.

- Dependence on LLMs: The performance of LongFact and SAFE is contingent on the capabilities of the underlying LLMs, particularly in instruction following, reasoning, and creativity.

- Quality of LongFact Prompts: Ineffective instruction following by an LLM can lead to off-topic or inadequate LongFact prompts.

- Decomposition and Verification Challenges: Weak LLMs may incorrectly decompose complex responses or fail to propose relevant search queries for fact verification.

- Reliance on Google Search: SAFE’s dependency on Google Search for ground truths can be problematic in niche or expert-level domains where information is scarce or lacks depth.

- Labeling Limitations: The model labels facts as 'supported' or 'not supported' by Google Search rather than as universally factual, which may not always reflect global factuality.

Conclusion

SAFE represents a significant stride forward in the quest for factual integrity within the AI-generated content. As AI continues to integrate into various aspects of our lives, tools like SAFE ensure that the information we rely on remains accurate and trustworthy.

Source

Research paper: https://arxiv.org/abs/2403.18802

Research document: https://arxiv.org/pdf/2403.18802.pdf

Safe GitHub Repo: https://github.com/google-deepmind/long-form-factuality/tree/main/eval/safe

GitHub Repo: https://github.com/google-deepmind/long-form-factuality

No comments:

Post a Comment