In the rapidly evolving landscape of artificial intelligence, Large Language Models are making significant strides. These models, capable of generating text that closely mirrors human language, have become indispensable tools in a myriad of applications. Yet, the world of LLMs is not without its challenges, including issues of accessibility, efficiency, and ethical AI use. DBRX stands out as a solution to these challenges, offering an open-source model with unparalleled performance.

DBRX is the brainchild of Databricks, developed by their Mosaic Research Team. Known for their comprehensive data analytics platform, Databricks has made a foray into the realm of AI with the goal of making data intelligence accessible to all enterprises. The creation of DBRX aligns perfectly with this vision, providing top-tier AI capabilities to businesses everywhere. The driving force behind the development of DBRX was to establish a new benchmark in the field of open LLMs.

What is DBRX?

DBRX is a cutting-edge, open-source Large Language Model (LLM) . It is a transformer-based, decoder-only, general-purpose LLM. DBRX stands as an adept code model, outshining specialized counterparts like CodeLLaMA-70B in the realm of programming. Simultaneously, it flexes its versatility as a robust general-purpose language model.Key Features of DBRX

DBRX boasts several unique features that set it apart from other models:

- Efficient Mixture-of-Experts (MoE) Architecture: DBRX utilizes a fine-grained MoE architecture, which allows it to perform inference up to 2x faster than previous models like LLaMA2-70B. This architecture contributes to the model’s efficiency without compromising its performance.

- Large Parameter Count: DBRX has a total of 132 billion parameters, a significant number in the world of LLMs. However, only 36 billion of these parameters are active for any given input, making the model more manageable and efficient.

- Transformer-based Decoder-Only Model: As a transformer-based decoder-only model, DBRX has been trained using next-token prediction, a method that enhances its ability to generate human-like text.

- Extensive Training Data: DBRX has been trained on a diverse dataset of 12 trillion tokens of text and code data, allowing it to handle a wide range of tasks effectively.

Capabilities/Use Case of DBRX

DBRX offers unique capabilities and benefits that make it a valuable tool in various applications:

- Enhanced Data Analysis: DBRX has the ability to analyze massive datasets, uncovering hidden patterns and trends that might otherwise go unnoticed. This makes it a valuable tool for data-driven decision making.

- Supercharged Content Creation: With its ability to generate human-like text, DBRX can be used to create content, offering a solution for overcoming writer’s block and enhancing creativity.

- Streamlined Development Processes: Developers can leverage DBRX for code completion, suggesting functionalities or even generating simple functions. This can streamline the development process and increase productivity.

- Industry Applications: The potential use cases for DBRX span across industries. In financial services, the model can be fine-tuned for tasks such as risk assessment, fraud detection, and customer service chatbots. Healthcare organizations can leverage DBRX for medical record analysis, drug discovery, and patient engagement. These real-world applications demonstrate the versatility and potential of DBRX.

How does DBRX work?/ Architecture/Design

DBRX leverages a cutting-edge fine-grained mixture-of-experts (MoE) architecture. This innovative structure distributes the learning process across several specialized subnetworks. By doing so, it optimizes data allocation for each task, ensuring both high-quality output and computational efficiency.

The MoE architecture represents a transformative class of transformer models. It departs from traditional dense models by adopting a sparse approach. Here, only a select group of the model’s components referred to as ‘experts’ are activated for each input. This method enhances pretraining efficiency and accelerates inference, even as the model scales up. Within the MoE framework, each expert is a neural network, typically a feed-forward network (FFN). A gate network, or router, directs tokens to the appropriate expert. This specialization allows the model to tackle a broader array of tasks with greater adeptness.

At the heart of DBRX is a transformer-based, decoder-only model. Such models, known as auto-regressive models, exclusively utilize the decoder component of a Transformer. They operate sequentially, with attention layers accessing only preceding words at each step. This design is tailored for generating new text, rather than interpreting or analyzing existing content.

DBRX’s architecture includes a dynamic pre-training curriculum that innovatively varies the data mix during training. This strategy allows each token to be processed effectively using the active 36 billion parameters. The model has been fine-tuned to run on mlx platforms, highlighting its versatility and advanced capabilities.

Performance Evaluation with Other Models

DBRX’s performance is noteworthy, excelling in various tasks and benchmarks, and outshining both open and proprietary models.

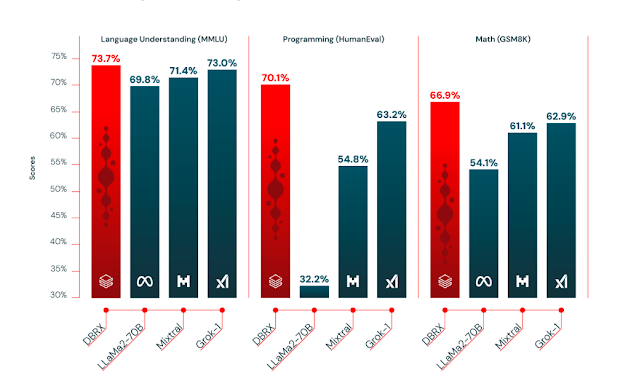

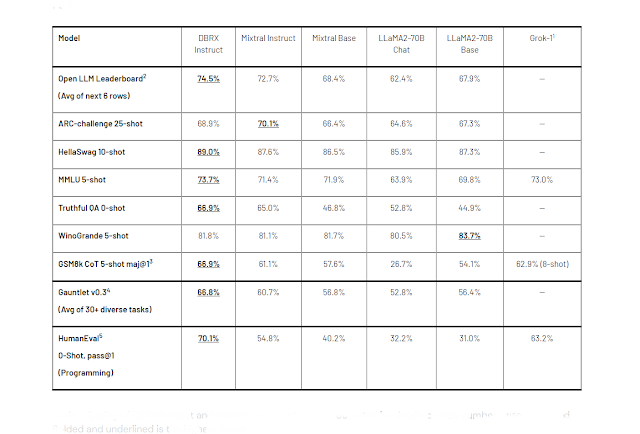

Open Model Comparisons Referencing Table above, DBRX Instruct takes the lead in composite, programming, and mathematics benchmarks, including MMLU. It outpaces all models fine-tuned for chat or instruction on these standard benchmarks. Notably, on the Hugging Face Open LLM Leaderboard and Databricks Gauntlet, DBRX Instruct achieves top scores. In programming and mathematics, DBRX Instruct’s capabilities surpass those of other open models on benchmarks like HumanEval and GSM8k, even eclipsing CodeLLaMA-70B Instruct, which is tailored for programming tasks. For MMLU, DBRX Instruct’s scores are unmatched.

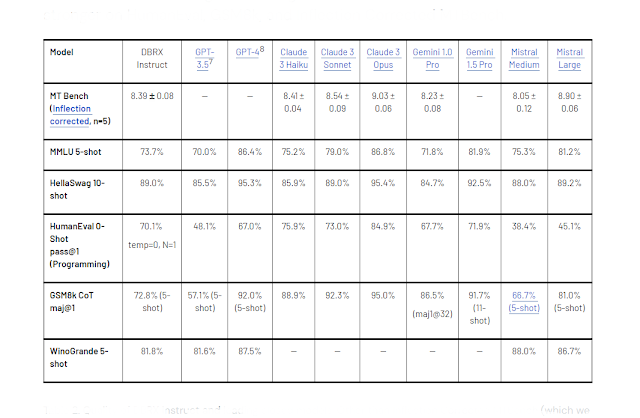

Table above shows Closed Model Comparisons , DBRX Instruct not only exceeds GPT-3.5 but also rivals Gemini 1.0 Pro and Mistral Medium. It demonstrates superior performance in general knowledge (MMLU), commonsense reasoning (HellaSwag and WinoGrande), and excels in programming and mathematical reasoning (HumanEval and GSM8k). DBRX Instruct contends with Gemini 1.0 Pro and Mistral Medium, scoring higher in Inflection Corrected MTBench, MMLU, HellaSwag, and HumanEval.

DBRX Instruct outperforms GPT-3.5 Turbo across all context lengths and sequences in long-context benchmarks. In RAG tasks, DBRX Instruct holds its ground against open models like Mixtral Instruct and LLaMA2-70B Chat, as well as the latest GPT-3.5 Turbo.

For inference efficiency, DBRX’s throughput is 2-3 times greater than that of a 132B non-MoE model. With higher quality than LLaMA2-70B and about half the active parameters, DBRX’s inference speed is nearly double.

A Comparative Analysis with Leading LLMs

When we compare DBRX with models such as LLaMA2-70B, Mixtral Instruct, and Grok-1, it becomes evident that DBRX has several distinguishing features:

DBRX is constructed with a fine-grained MoE architecture. This innovative design enables it to perform inference operations up to twice as quickly as earlier models like LLaMA2-70B and Grok-1. In contrast, Mixtral Instruct also utilizes a MoE architecture, but it may not be as fine-grained as DBRX.

DBRX boasts a total of 132 billion parameters, marking its significance in the LLM arena. However, it’s the model’s strategic operation activating only 36 billion parameters for each input that truly differentiates it, ensuring both manageability and efficiency. This selective activation of parameters is a distinctive characteristic not observed in other models, including Mixtral Instruct, which effectively utilizes about 45 billion parameters.

The training of DBRX is comprehensive, encompassing 12 trillion tokens of text and code data. This extensive training empowers DBRX with the versatility to effectively perform a vast array of tasks. The breadth of this training dataset is a detail not specified for other models, including Mixtral Instruct.

So, the unique features and capabilities of DBRX position it as a formidable tool for a multitude of applications across various industries. Its efficient MoE architecture, substantial parameter count, and broad training data distinguish it from other models. This makes it a valuable resource for decision-making, content creation, development processes, and industry applications.

How to Access and Use DBRX Model?

The model is available on multiple platforms, ensuring its wide accessibility and ease of use.

The weights of the base model, known as DBRX Base, and the finetuned model, referred to as DBRX Instruct, are hosted on Hugging Face. This platform is renowned for its extensive collection of pre-trained models, making it a go-to resource for machine learning enthusiasts and professionals alike.

In addition to Hugging Face, the DBRX model repository is also available on GitHub. This platform allows users to delve into the model’s code, offering a deeper understanding of its workings and facilitating potential customizations.

For Databricks customers, DBRX Base and DBRX Instruct are conveniently available via APIs through the Databricks Foundation Model APIs. This not only provides easy access but also allows users to build custom models on private data, thereby ensuring data governance and security.

Limitations and Future Work

While DBRX has made significant strides in the field of machine learning, it is important to acknowledge its limitations and the potential for future enhancements.

- DBRX may produce inaccurate responses due to its training process.

- Depending on the use case, fine-tuning of DBRX might be required for optimal results.

- Access to the model is restricted and granted upon request, which may limit its widespread adoption.

Future work includes enhancing the model’s performance, scalability, and usability across various applications and use cases.

Conclusion

DBRX is a significant advancement in the field of Large Language Models. Its unique features and capabilities make it a powerful tool for a wide range of applications across various industries. However, like any model, it has its limitations and areas for future work. As an open-source model, DBRX provides a platform for continuous improvement and innovation in the field of AI.

Source

Blog Website: https://www.databricks.com/blog/introducing-dbrx-new-state-art-open-llm

Weights: https://huggingface.co/databricks/dbrx-base

GitHub Repo: https://github.com/databricks/dbrx

No comments:

Post a Comment