Introduction

Language models are powerful tools that can generate natural language texts based on some input, such as a prompt, a keyword, or a context. They have many applications in natural language processing, such as text summarization, machine translation, question answering, and conversational agents. However, most of the state-of-the-art language models are very large and complex, requiring huge amounts of data and computational resources to train and run. This poses challenges for researchers and developers who want to experiment with language models or deploy them in resource-constrained environments.

To address this problem, a team of researchers from the StatNLP Research Group at the Singapore University of Technology and Design developed new model, an open-source small language model that can generate diverse and fluent texts with minimal data and resources. The motto behind the development of this model was to create a compact yet powerful language model that could be used in various applications, especially those with limited computational resources. This new model is called 'TinyLlama'.

What is TinyLlama?

TinyLlama is a compact 1.1B language model pretrained on around 1 trillion tokens for approximately 3 epochs. It is built on the architecture and tokenizer of Llama 2, and leverages various advances contributed by the open-source community.

Key Features of TinyLlama

Some of the key features of TinyLlama are:

- Small and Fast: TinyLlama is a compact model with 1.1 billion parameters. It’s designed to be efficient, making it suitable for various devices and platforms.

- Diverse and Fluent: TinyLlama can generate diverse and fluent texts across different domains and genres.

- Remarkable Performance: Despite its small size, TinyLlama demonstrates remarkable performance in a series of downstream tasks. It outperforms existing open-source language models of comparable sizes.

- Open-Source and Accessible: TinyLlama is open-source and available on GitHub. It’s also accessible online in the form of a chat demo. TinyLlama is licensed under the Apache License 2.0, which allows both commercial and non-commercial use of the model.

These features make TinyLlama a unique and powerful tool in the field of language models. Its compactness, speed, diversity, performance, and accessibility set it apart from other models and make it a valuable resource for researchers, developers, and users alike.

Capabilities/Use Case of TinyLlama

TinyLlama has many potential capabilities and use cases, such as:

- Deployment on Edge Devices: TinyLlama’s compactness and efficiency make it ideal for deployment on edge devices, which control data flow at network boundaries. This is beneficial for data privacy and real-time applications.

- Assisting Speculative Decoding of Larger Models: TinyLlama can assist in the speculative decoding of larger models by generating multiple predictions in parallel, helping to improve their performance.

- Content Generation: TinyLlama excels in content generation across different domains and genres. It can adapt to different styles and tones based on the input, making it a versatile tool for various content generation tasks.

These capabilities and use cases highlight the versatility and power of TinyLlama. Despite its small size, it can perform a wide range of tasks efficiently and accurately, making it a valuable tool in the field of natural language processing.

Architecture of TinyLlama

TinyLlama is a small 1.1B parameter language model that strategically takes on Llama 2's tried-and-true architectural design and tokenizer. This gives it a solid foundation, such as the utilization of a SentencePiece byte pair encoding (BPE) tokenizer to maintain efficient vocabulary control. TinyLlama is not merely a downscale, however; its architecture is deliberately set up with 22 transformer layers, hidden dimension 2048, and 32 attention heads. This intentional design solution is meant to produce a strong model in a much reduced computational footprint.

One of the foundations of TinyLlama's efficiency in training is incorporating FlashAttention-2. As opposed to being an approximation, FlashAttention-2 is an I/O-efficient algorithm that calculates the exact softmax attention but with highly optimized computation. By cleverly tiling computation and reducing reads/writes to sluggish GPU high-bandwidth memory, it significantly lowers the memory footprint and accelerates attention mechanism. This optimization was essential in its massive pre-training on three trillion tokens, allowing for efficient handling of extended sequences and big batch sizes.

To enhance efficiency further, especially during inference, TinyLlama's architecture also employs Grouped-Query Attention (GQA). In regular multi-head attention, every query head has an identical key head and value head. GQA alters that so many query heads share one key and value head. For TinyLlama, this translates to there being 32 query heads but being clumped into sharing merely 4 key/value heads. This structure greatly optimizes the memory bandwidth needed for model weight loading, resulting in accelerated token generation and reduced memory consumption during inference.

The architecture of the model is also strengthened by various contemporary elements adopted from the Llama 2 structure. It utilizes Rotary Positional Embeddings (RoPE) to encode token positions efficiently, a more flexible method compared to standard techniques. For normalization, it incorporates RMSNorm, which helps improve training stability as well as efficiency. As opposed to the typical ReLU activation function, TinyLlama uses SwiGLU, a sophisticated activation function which combines Swish and Gated Linear Unit, proven to enhance model performance and train dynamics.

Ultimately, all these well-chosen architecture pieces and tweaks characterize TinyLlama. By maintaining a strong foundation in the Llama 2 framework with the incorporation of important augmentations such as Grouped-Query Attention, FlashAttention-2, and contemporary neural network elements, the endeavor provides an amazingly powerful model. The end result is an efficient yet powerful language model that maintains a fine balance between performance and computational costs, which is a true marvel in the world of open-source AI.

Performance Evaluation

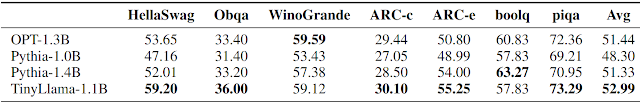

TinyLlama’s performance has been evaluated on a wide range of commonsense reasoning and problem-solving tasks, and it has been compared with several existing open-source language models with similar model parameters. The primary focus was on language models with a decoder-only architecture, comprising approximately 1 billion parameters. Specifically, TinyLlama was compared with OPT-1.3B, Pythia-1.0B, and Pythia-1.4B.

To understand the commonsense reasoning ability of TinyLlama, various tasks were considered, including Hellaswag, OpenBookQA, WinoGrande, ARC-Easy and ARC-Challenge, BoolQ, and PIQA. The models were evaluated in a zero-shot setting on these tasks using the Language Model Evaluation Harness framework. The results, presented in Table above, show that TinyLlama outperforms the baselines on many of the tasks and obtains the highest averaged scores.

TinyLlama’s problem-solving capabilities were also evaluated using the InstructEval benchmark. This benchmark includes tasks such as Massive Multitask Language Understanding (MMLU), BIG-Bench Hard (BBH), Discrete Reasoning Over Paragraphs (DROP), and HumanEval. The models were evaluated in different shot settings depending on the task. The evaluation results, presented in Table above, demonstrate that TinyLlama exhibits better problem-solving skills compared to existing models.

These evaluations highlight the impressive performance of TinyLlama in both commonsense reasoning and problem-solving tasks, further establishing its effectiveness and versatility as a compact language model.

How to Access and Use this Model?

TinyLlama can be downloaded for free via GitHub. All model checkpoints are also available. TinyLlama is suitable for commercial use as per its Apache-2.0 license. The team behind the model recommends using the fine-tuned chat version of TinyLlama at present. Use the chat demo online, where user can interact with TinyLlama and see its outputs in real time.

If you are interested to learn more about TinyLlama, all relevent links are provided under the 'source' section and the end of this article.

Limitations

Despite its impressive capabilities, TinyLlama has certain limitations:

- Factual Errors and Inconsistencies: TinyLlama can sometimes generate factual errors, inconsistencies, or biases in its outputs, especially when the input is vague, noisy, or out-of-domain1. This may affect the reliability and trustworthiness of the model and its applications.

- Complex Reasoning Tasks: TinyLlama may struggle with complex reasoning, logic, or arithmetic tasks that require more than generating natural language texts. For example, it may have difficulty answering questions that involve calculations, comparisons, or deductions.

- Multimodal Outputs: TinyLlama is not able to generate multimodal outputs, such as images, audio, or video, that may complement or enhance the natural language texts. This may limit the expressiveness and creativity of the model and its applications.

- Experimental Nature: It’s important to note that TinyLlama is an experiment designed to challenge the claim that the potential of training smaller models with larger datasets remains under-explored. This means that while it has shown impressive capabilities, there is still much to learn and improve upon.

Conclusion

TinyLlama demonstrates remarkable performance and outperforms existing models of comparable sizes. Its compactness and power make it an ideal solution for various applications, especially those with limited computational resources. The future looks promising for TinyLlama, and it will be interesting to see how it continues to evolve and impact the field of AI.

Source

research paper - https://arxiv.org/abs/2401.02385

GitHub Repo - https://github.com/jzhang38/TinyLlama

Chat demo Link - https://huggingface.co/spaces/TinyLlama/tinyllama-chat

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.

No comments:

Post a Comment