Introduction

In the ever-evolving landscape of Artificial Intelligence, video generation has emerged as a crucial area of exploration. The past decade has witnessed AI making significant strides in fields like image synthesis and natural language processing. However, the domain of video generation, with its unique challenges of maintaining temporal consistency, handling diverse content, and ensuring high-resolution output, remained relatively unexplored. This is where Mora, a state-of-the-art model, steps in.

Mora is a pioneering multi-agent framework that aims to empower generalist video generation. It is the brainchild of a collaborative effort between researchers at Lehigh University and Microsoft Research. The team behind Mora envisioned a model that could democratize the process of video creation, making it accessible to a wider audience. The driving force behind the development of Mora was to create a versatile video generation system capable of catering to a wide range of tasks.

What is Mora?

Mora is a multi-agent framework designed to facilitate generalist video generation tasks. Leveraging a collaborative approach with multiple visual agents, it aims to replicate and extend the capabilities of OpenAI’s Sora. While Sora garnered significant attention, Mora takes a step further by incorporating advanced agents to tackle various video generation challenges.

Key Features of Mora

- Text-to-Video Generation: Mora has the unique ability to convert textual descriptions into high-resolution videos that are temporally consistent. This means you can describe a scene in words, and Mora can bring it to life in video form.

- Text-Conditional Image-to-Video Generation: Mora can take a text description and an image, and generate a video that combines both elements. This feature bridges the gap between language and visual content, opening up new possibilities for video creation.

- Extending Generated Videos: It can extend videos seamlessly, ensuring coherence and continuity throughout the video.

- Video-to-Video Editing: Mora is not just a video generator, but also a video editor. It can remix existing videos, allowing for creative transformations.

- Connecting Videos: Mora can stitch together different video segments, ensuring smooth transitions and a cohesive final product.

- Simulating Digital Worlds: Mora can generate digital environments, which can be used for simulations or artistic expressions.

Capabilities/Use Case of Mora

- Versatile Task Performance: Mora can perform a variety of tasks, from text-to-video generation to video-to-video editing. This versatility makes it a powerful tool for a wide range of video creation needs.

- Multiple Visual Agents: Mora can utilize multiple visual agents, allowing it to mimic the video generation capabilities of other models like Sora.

- Structured System of Agents: Mora features a structured yet adaptable system of agents. This structure, paired with an intuitive interface for configuring components and task pipelines, makes Mora user-friendly and adaptable to different video creation needs.

- Digital World Simulation: Whether for simulations or artistic expressions, Mora’s ability to generate digital environments opens up new possibilities for video creation. This feature can be particularly useful in fields like gaming, virtual reality, and digital art.

Mora has such many impressive features and capabilities, it’s always important to consider the specific needs and constraints of your project when choosing a video generation model.

How does Mora work?/ Architecture/Design

Mora operates as a groundbreaking multi-agent framework that is designed to revolutionize the field of video generation. It achieves this by leveraging the collaborative power of various specialized agents, each with distinct roles and operational skills. These agents work in harmony to accomplish a diverse range of video-related tasks in a modular and extensible approach.

The process begins with the text-to-image generation agent, which is responsible for translating textual descriptions into high-quality initial images. Following this, the image-to-image generation agent modifies these source images in response to specific textual instructions. The image-to-video generation agent then transitions these static frames into vibrant video sequences, ensuring a seamless narrative flow and visual consistency throughout. Additionally, the video connection agent utilizes the video-to-video agent to create seamless transition videos based on two input videos, ensuring a smooth and visually consistent transition between them.

Mora’s architecture and design are meticulously structured to enable the effective coordination of multiple agents, each specializing in a segment of the video generation process. This collaborative process starts with generating an image from text, followed by using both the generated image and the input text to produce a video. The framework’s internal components are designed to facilitate the completion of an expanded range of tasks and projects, making Mora an indispensable tool for diverse application scenarios.

So, Mora’s internal architecture involves multiple visual agents collaborating harmoniously. While the specifics are intricate, it draws inspiration from Sora’s design. The agents communicate, negotiate, and collectively contribute to video synthesis, making Mora a powerful tool in the realm of AI video generation.

Performance Evaluation with Other Models

Mora’s performance has been rigorously evaluated against other models in the realm of video generation. The evaluation covers a wide range of tasks, including text-to-video generation, text-conditional image-to-video generation, extending generated videos, video-to-video editing, connecting videos, and simulating digital worlds.

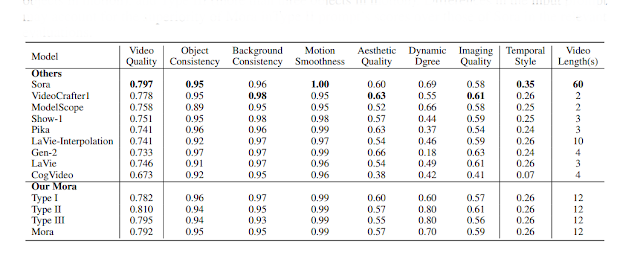

source - https://arxiv.org/pdf/2403.13248.pdf

In the context of text-to-video generation (shown in above figure), Mora has been compared with several open-sourced models and has shown superior performance, ranking second only to Sora. This evaluation takes into account various metrics such as video quality, object consistency, background consistency, motion smoothness, aesthetic score, dynamic degree, and imaging quality.

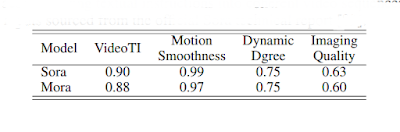

The evaluation also extends to text-conditional image-to-video generation. Table above reveals Mora's impressive performance in text-conditional image-to-video generation. Here, Mora comes in a close second to the powerful Sora model. Mora showcases its potential in accurately interpreting textual prompts and generating smooth motion sequences. As shown in figure below, Mora closely trails behind Sora, highlighting its ability to align video with textual instructions and maintain the thematic and aesthetic essence in the generated videos.

In the tasks of extending generated videos and video-to-video editing, Mora showcases its proficiency by closely matching Sora’s performance. It effectively preserves narrative flow and visual continuity in extended videos, and emulates stylistic temporal consistency in video editing. Despite Sora’s slightly higher imaging quality, Mora’s capabilities and potential enhancements highlight its effectiveness and promise in the realm of video generation. These evaluations and their results can be found in detail in the research paper.

Overall, The comprehensive results affirm the capabilities of Mora and position it as a groundbreaking tool, promising significant advancements in how video content is created and utilized.

Mora’s Role and Potential in AI Video Generation

The Mora model’s multi-agent framework is its key differentiator. This framework allows Mora to incorporate several advanced visual AI agents, each with their own specialized capabilities. This is a significant departure from the single-model approach used by many other AI video generation models. The multi-agent approach allows Mora to tackle a variety of tasks with a high degree of flexibility and adaptability.

In contrast, other models like VideoCrafter1, Show-1, and LaVie, while impressive in their own right, have more specialized capabilities. VideoCrafter1, for instance, excels at generating videos based on both text and image inputs. Show-1 can generate high-quality videos from textual descriptions, and LaVie can synthesize visually realistic and temporally coherent videos. However, none of these models offer the same breadth of capabilities as Mora.

Moreover, Mora can generate videos that are not only high-quality but also closely aligned with the style and aesthetics of the Sora model. This capability allows Mora to generate videos that are visually appealing and engaging, further enhancing its value in the field of AI video generation.

So, these features allow Mora to deliver high-quality, versatile and visually appealing video content, making it a valuable tool in the rapidly evolving field of AI video generation.

How to Access & Use This Model?

While details are still emerging, the Mora model shows promise in the field of video generation. Those interested in using Mora should keep an eye on official Github repository for updates on code availability. Once released, accompanying documentation will provide instructions for setting up and utilizing the model for video creation tasks.

Limitations and Future Directions

Mora has made significant strides. However, like any cutting-edge technology, it faces certain limitations. Let’s explore these challenges:

- Lifelike Movements: Mora faces challenges in achieving realistic movements in generated videos.

- Quality and Length: Maintaining video quality and appropriate length remains a hurdle. While Mora excels at generating high-quality content, extending past 12 seconds can lead to a significant drop in visual fidelity.

- Precision in Instructions: Accurately following specific textual prompts is essential.

- Access to High-Quality Datasets: Obtaining suitable video datasets for training is crucial.

Looking ahead, Mora’s future research directions promise exciting developments:

- Sophisticated Natural Language Understanding: Integrating advanced language understanding capabilities would enhance Mora’s context-awareness and accuracy.

- Real-Time Feedback Loops: Interactive video creation through user-guided feedback loops could personalize the process.

- Resource Optimization: Optimizing computational requirements would democratize access to Mora.

- Collaborative Research Environments: Open collaboration fosters innovation and collective advancement.

These directions aim to elevate Mora’s capabilities and drive progress in video generation.

Conclusion

Mora represents a significant step forward in the field of AI video generation. Despite its limitations, its unique approach and capabilities make it a promising tool for future developments in the field. As the field continues to advance, models like Mora that offer a wide range of capabilities and high-quality output are likely to lead the way.

No comments:

Post a Comment