Introduction

In specialized fields like healthcare, it creates a classic paradox, where developing greater and greater AI models is becoming more and more impractical. In cases where accuracy and efficiency is key, huge Large Language Models (LLMs) provide impressive use cases but are operationally impractical because of an unsustainably high computational cost and latency for real-time clinical use. Top-tier clinical data is limited and sensitive, and useful real-world AI solutions often rely on valuable professional context. MediPhi, a family of Small Language Models (SLMs), is designed to fill the gap. Instead of realizing that size doesn't matter, MediPhi represents a strategic pivot towards cost-effective, highly-specific, computationally efficient models. MediPhi represents a value-driven approach not only to create research projects, but to create real-world AI deployment capabilities in clinical Natural Language Processing (NLP) where it adds value.

This forward-thinking approach reflects a clear vision to cut through the bottlenecks that continue to stall AI in medicine. The relatively high barriers to entry for LLMs, given their costs and data requirements, have delayed the widespread adoption of clinical AI in direct patient care. With its modular architecture of simple, compact models, MediPhi plans to democratize access to executing clinical AI. This approach enhances reproducibility, creates the conditions for sustained improvement, and may ultimately facilitate the direct incorporation of advanced NLP tools into workflows within clinical practice, which may fundamentally redefine productivity and patient care.

Development and Contributors

The collection of MediPhi models was created by Microsoft Healthcare & Life Sciences in collaboration with partners from Microsoft Research Montréal and IKIM, University Hospital Essen. The primary inspiration for creating MediPhi was to present a new framework for converting SLMs into high-performance clinical models by immediately addressing the cost and latency constraints of LLMs that limit their application in patient care environments.

What is MediPhi?

MediPhi consists of seven specialized Small Language Models (SLMs) with 3.8 billion parameters each based on the Phi3.5-mini-instruct base model. The models are carefully fine-tuned for clinical NLP applications and are mainly designed for research purposes in English. Microsoft highly recommends that all model outputs should be validated by a medical professional since the models are solely for research purposes and should be thoroughly reviewed for accuracy, safety, and fairness before any deployment.

MediPhi's Variants

The MediPhi Model Family is a general-purpose toolkit with multiple different variants, each with its own specific relevance:

- MediPhi-Instruct: The ultimate, premier model, trained to carry out full-clinical NLP jobs utilizing the large-scale MediFlow synthetic instruction dataset.

- MediPhi: The single generalist expert, produced by combining the five task-specific experts with the novel BreadCrumbs method, strikes a good balance of strong average performance across the CLUE+ benchmark.

- Five Domain-Specific MediPhi Experts: These models constitute the base, each being fine-tuned on separate medical corpora before being combined back with the base model to retain general capabilities:

- MediPhi-PubMed: Fine-tuned on 48 billion tokens of PubMed scientific documents and abstracts, this expert is best suited to take advantage of biomedical literature.

- MediPhi-Clinical: Trained on open-source clinical documents (patient summaries, doctor-patient conversations), this variant is designed for tasks associated with immediate patient care.

- MediPhi-MedWiki: Trained on Medical Wikipedia, this specialist had the highest average improvement across individual specialists (3.2%), presumably because it learned from wide educational content.

- MediPhi-Guidelines: Transformed based on guidelines from established health organisations, prioritising structured authoritative knowledge.

- MediPhi-MedCode: Treated on medical coding corpora, this specialist achieved a staggering 44% relative improvement in ICD-10 coding compared to its base model, even surpassing GPT-4-0125 by 14% on this particular task.

Key Features of MediPhi

MediPhi possesses a number of central characteristics that identify it as a unique value proposition:

- MediPhi demonstrates a modular and streamlined structure. Each SLM in the collection can be adapted as a high-performance clinical model with minimal computational cost compared to LLMs.

- MediPhi retains the general capabilities. By utilizing advanced merging techniques like SLERP, MediPhi mitigates 'catastrophic forgetting' with respect to the general capabilities, so that each merge retains the basic skills of the Phi3.5 about following instructions and working with long context.

- MediPhi has increased safety and groundedness. MediPhi-Instruct retains the safety protocols in its base model and is even more grounded, effectively refusing to respond to potentially harmful queries from both the clinician and patient vantage points.

- MediPhi's license is commercially permissive. As the first high-performance SLM collection with an MIT license in the medical domain, MediPhi provides additional pathways for research and commercial use cases.

Capabilities and Use Cases of MediPhi

MediPhi's distinctive configuration presents exciting real-world use cases:

- Automated Medical Coding: As accurate as a MediPhi-MedCode specialist, MediPhi is ideal to automate and enhance medical documentation and billing.

- Custom Clinical NLP Solutions: Developers can quickly build custom summarisation, named entity recognition (NER), and relation extraction tools using the modular structure and commercial licence.

- Specialized Knowledge Extraction: MediPhi-PubMed and MediPhi-Guidelines knowledge specialists provide an extremely accurate, context-aware information extraction mechanism from complex medical texts.

- A New Benchmark for Strong Medical AI: Nearly as performant as models with twice the size, MediPhi is an excellent basis for a research program on strong and efficient medical AI.

How MediPhi Achieves its Specialisation

MediPhi's development represents a real-world example of model specialisation achieved via a sophisticated two-step process.

This process starts with Continual Pre-Training; the base Phi3.5-mini-instruct model has knowledge to a specific domain programmed into. This knowledge was incorporated via Domain Adaptation Pre-Training (DAPT) on large text corpora (e.g., PubMed), and a new approach called Pre-Instruction Tuning (PIT) in which the model learns from a similar data set and tasks (e.g., summarisation, NER) then learns on both instruction data and the pre-training data during the combined stage of training. After the first phase, there is an important Model Merging step. Each of the 5 fine-tuned experts is each merged back with the base model separately using Spherical Linear Interpolation (SLERP) to maintain general capabilities, with the five experts merged together into the general MediPhi SLM using the BreadCrumbs technology, which was found to be an optimal merging operator by the evolutionary algorithm.

The final stage of development is known as Clinical Alignment. This developmental stage involves the specification of the model for clinical tasks. In this work, we used the unique, large-scale synthetic dataset, MediFlow, which consists of a total of 2.5 million high-quality instruction examples distributed across 14 clinical task categories. The unified MediPhi model underwent Supervised Fine-Tuning (SFT) on the MediFlow dataset and was followed by Direct Preference Optimization (DPO) using a smaller curated dataset. Although this rigorous, multistage process may have been computationally expensive, taking approximately 12,000 GPU hours to complete, these processes enable MediPhi to attain a very specialized and robust performance.

Performance Evaluation

The assessment on the extended CLUE+ benchmark highlights both the efficiency and power of MediPhi.

The flagship model, MediPhi-Instruct (3.8B) achieved an average accuracy of 43.4% - a significant 18.9% relative increase from the base model. This shows the power of the specialized training and alignment process, with considerable enhancements to tasks such as identifying social determinants of health and question-answering on radiology reports. The individual experts performed well too; the MedCode model outperformed the substantially larger GPT-4-0125 model in ICD-10 coding, and the MedWiki expert showed the most consistent increases across the datasets.

From a competitive standpoint, MediPhi definitely exceeds its weight. While MediPhi-Instruct is less than half the size of equivalent models, such as Meta-Llama-3-8B-Instruct (8B), it provides near equal performance (less than a 1% difference) on the CLUE+ benchmark and outperforms all LLaMA3 models on 4 datasets (it leads LLaMA3 by 29.2% on ICD10CM coding!). Compared to other medical models like Llama3-Med42-8B, MediPhi provides more overall uplift versus its original base model with improvements across 9-11 datasets to 5 for Med42. The evidence stacks up - MediPhi provides a perfect balance of size-efficiency and high-quality performance, as a new reference point for what specialised SLMs can achieve.

MediPhi vs. MedGemma

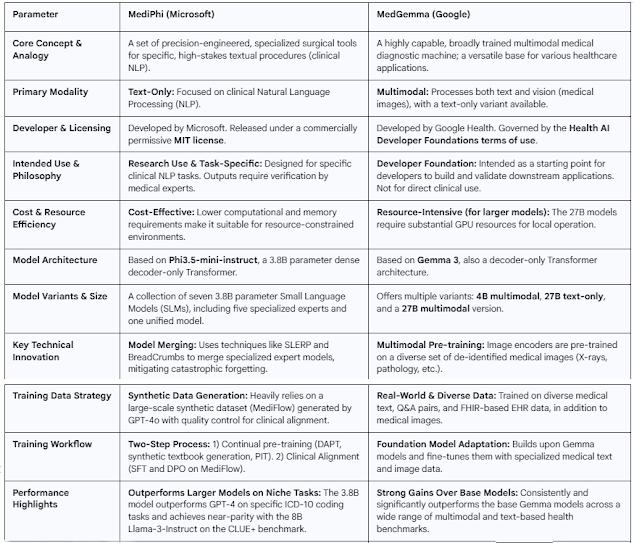

The table below highlights the strategies taken by Microsoft and Google in medical AI with MediPhi and MedGemma, respectively. MediPhi is, in essence, envisioned as a series of precision-crafted 'surgical instruments' for text-based clinical NLP tasks only. By contrast, MedGemma is a multi-modal diagnostic machine that can handle both text and medical images. In the end, the choice is a strategic one: MediPhi offers surgical precision for tasks with text; while MedGemma offers a wider base to stand on for multi-modal applications.

How to Access and Use MediPhi

It is easy to get started with MediPhi for developers and researchers. The full model set, including the lead model MediPhi-Instruct and all of its domain-specific counterparts, is posted on Hugging Face and can be loaded locally through the transformers library in PyTorch. The models work best with prompts in a particular chat format (<|system|>.<|user|>.<|assistant|>). For hardware, the models fall back to Flash Attention, which necessitates state-of-the-art NVIDIA GPUs (A100/H100), but older GPU users can set attn_implementation="eager". Importantly, the whole bundle is released under a commercially permissive MIT license, a key step that stimulates both academic research and the creation of commercial clinical tools.

Limitations

Although there have been many exciting developments with MediPhi, it is also important to articulate limitations. The early development of the model was a computationally expensive process and, of course, this has implications for reproducibility. The core dataset provided by MediFlow has its own limitations, in that it's currently English only and does not support multi-turn conversational design. Although we attempted to maintain general capacities, this highly specialized development may have limited capabilities for non-medical tasks like multi-lingual support. Furthermore, like all language models MediPhi can deliver inaccurate information or societal biases. Long conversations may also be a vice to the driver of performance. Microsoft has made it a point to emphasize that these models must only be used for research purposes and any uses in a high-risk context such as direct medical advice should be properly evaluated and highly scrutinized by a written professional.

Conclusion

MediPhi is just a great example of one potentially very important idea: perhaps the future of clinical AI does not lie in a singular, ultimate model for the field, but rather a broad assortment of various specialized tools. The proposition of many tools will help us optimize efficiencies, and will help bring us to a future when AI augments care and operations with patient care, not only just in the medical field, but adaptable and portable for many contexts.

MediPhi Model: https://huggingface.co/microsoft/MediPhi

MediPhi Variants: https://huggingface.co/collections/microsoft/mediphi-6835cb830830dfc6784d0a50

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.

No comments:

Post a Comment