Introduction

In the rapidly advancing landscape of video captioning, the need for accessible content is more pressing than ever. Traditional methods have often fallen short, struggling with the dynamic nature of videos and frequently producing delayed or inaccurate captions. To address these challenges, a revolutionary approach has emerged - ‘streaming dense video captioning’. This innovative model, developed by a team of researchers at Google, leverages the power of AI to provide real-time, accurate, and detailed captions.

The development and contribution of ‘streaming dense video captioning’ is a testament to the collaborative spirit within the AI research community. Backed by Google’s extensive resources and dedication to innovation, this project aims to significantly enhance video accessibility and comprehension on a global scale.

Primary motivation of team, behind this groundbreaking model, was to overcome the limitations of existing dense video captioning models, which process a fixed number of down sampled frames and make a single full prediction after viewing the entire video. This innovative approach promises to redefine the field of video captioning.

What is Streaming Dense Video Captioning?

Streaming Dense Video Captioning is a model that predicts captions localized temporally in a video. It is designed to handle long input videos, predict rich, detailed textual descriptions, and produce outputs before processing the entire video. Unlike traditional models that require the entire video to be processed before generating captions, this model stands out with its ability to produce outputs in real-time, as the video streams.

Key Features of Streaming Dense Video Captioning

The Streaming Dense Video Captioning model is distinguished by two groundbreaking features:

- Memory Module: This novel component is based on clustering incoming tokens. It is designed to handle arbitrarily long videos, thanks to its fixed-size memory. This feature allows the model to process extended videos without compromising on performance or accuracy.

- Streaming Decoding Algorithm: This feature enables the model to make predictions before the entire video has been processed. It allows for immediate caption generation, setting it apart from traditional captioning methods and demonstrating the model’s advanced capabilities.

Capabilities and Use Cases of Streaming Dense Video Captioning

The Streaming Dense Video Captioning model’s unique ability to process long videos and generate detailed captions in real-time opens up a plethora of applications:

- Video Conferencing: The model can enhance communication by providing real-time captions, making meetings more accessible and inclusive.

- Security: In security applications, the model can provide real-time descriptions of video footage, aiding in immediate response and decision-making

How does Streaming Dense Video Captioning Work? / Architecture / Design

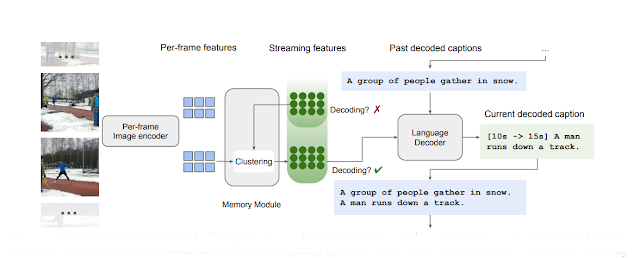

The Streaming Dense Video Captioning (SDVC) model is a sophisticated AI model designed to generate captions for videos in real-time. It operates by encoding video frames one by one, maintaining an updated memory, and predicting captions sequentially.

Frame-by-Frame Encoding - The SDVC model begins by encoding each frame of the video individually. This process involves analyzing the visual content of each frame and converting it into a format that the model can understand and process. This is typically done using a convolutional neural network (CNN), which is a type of deep learning model particularly suited to image analysis.

Memory Module - The encoded frames are then passed to the memory module. This module is based on clustering incoming tokens, which are essentially the encoded representations of the frames. The memory module groups similar tokens together, creating clusters that represent different aspects of the video content. This process allows the model to keep track of what has been shown in the video so far and helps it generate relevant captions.

Streaming Decoding Algorithm - The final component of the SDVC model is the streaming decoding algorithm. This algorithm takes the clusters generated by the memory module and uses them to predict captions for the video. The algorithm operates sequentially, meaning it generates captions one word at a time, in the order they appear in the sentence. This allows the model to generate captions in real-time as the video plays.

The SDVC model’s design allows it to generate accurate and relevant captions for videos in real-time. However, it’s important to note that the model’s performance can be influenced by the quality of the video input, the accuracy of the frame encoding, and the effectiveness of the memory module and decoding algorithm.

Performance Evaluation with Other Models

The Streaming Dense Video Captioning model has made significant strides in the field of video captioning, outperforming the state-of-the-art on three key benchmarks: ActivityNet, YouCook2, and ViTT. As illustrated in table below, the model has achieved substantial improvements over previous works, notably enhancing the CIDEr score on ActivityNet by 11.0 points and YouCook2 by 4.0 points. Furthermore, the model has demonstrated its superiority in video captioning tasks by achieving state-of-the-art results on paragraph captioning tasks.

source - https://arxiv.org/pdf/2404.01297.pdf

In comparison to traditional global dense video captioning models, as shown in figure below, the proposed streaming model has proven to be more effective. It surpasses the baseline in dense video captioning tasks across multiple datasets, setting new standards in the field. When applied to both GIT and Vid2Seq architectures, the streaming dense video captioning model consistently outperforms the baseline, further demonstrating its robustness.

The model’s effectiveness and versatility across different backbones and datasets are evident when evaluated on three widely used dense video captioning datasets: ActivityNet, YouCook2, and ViTT. The proposed method has achieved significant gains over previous works, underscoring the generality and effectiveness of the streaming model in the realm of video captioning.

How to Access and Use this Model?

The code for the Streaming Dense Video Captioning model is released and can be accessed at the official GitHub repository. The repository provides instructions on how to use the model. Its open-source nature encourages collaboration and innovation in the field.

If you are interested to learn more about this AI model, all relevant links are provided under 'source' section at the end of this article.

Limitations and Future Work

While the Streaming Dense Video Captioning model has made significant strides in the field of video captioning, there is always room for further improvement.

- Integration of ASR: The model could potentially be enhanced by integrating Automatic Speech Recognition (ASR) as an additional input modality. This could be particularly beneficial for datasets like YouCook.

- Development of New Benchmarks: There is a need for a benchmark that requires reasoning over longer videos. This would provide a more robust evaluation of streaming models and could lead to further advancements in the field of dense video captioning.

- Integration of Multiple Modalities: While the current focus is on paragraph captioning, future work could explore the integration of multiple modalities. This could potentially enhance the performance of dense video captioning models, making them even more effective and versatile.

Conclusion

Streaming Dense Video Captioning represents a significant advancement in the field of video captioning. Its ability to handle long videos and generate detailed captions in real-time opens up new possibilities for applications such as video conferencing, security, and continuous monitoring. However, like all models, it has its limitations and there is always room for improvement and future work. As technology continues to advance, we can look forward to seeing how this model evolves and impacts the field of video captioning.

Source

Research paper : https://arxiv.org/abs/2404.01297

Research Document : https://arxiv.org/pdf/2404.01297.pdf

Main Github repo: https://github.com/google-research/scenic

Project Github repo: https://github.com/google-research/scenic/tree/main/scenic/projects/streaming_dvc

HF paper : https://huggingface.co/papers/2404.01297

No comments:

Post a Comment