Introduction

The journey towards autonomous software improvement has been fraught with challenges that have historically impeded progress. Traditional approaches to software development have relied heavily on human expertise for tasks such as issue summarization, bug reproduction, fault localization, and program repair. These tasks are complex and multifaceted, often requiring a nuanced understanding of both the codebase and the problem domain.

Enter AutoCodeRover, a cutting-edge system designed to tackle these historical difficulties head-on. AutoCodeRover represents a paradigm shift in program modification and patching. It offers a glimpse into a future where software can self-improve, reducing the reliance on human intervention and accelerating the development cycle.

The development of AutoCodeRover has been propelled by the dedicated efforts of researchers from the National University of Singapore. Their contributions have been pivotal in advancing the field of autonomous software engineering. With a motto and vision centered on enabling autonomous software engineering, AutoCodeRover aims to elevate the efficacy of AI in software development. It seeks to bridge the gap between the potential of AI and its practical application, ultimately fostering an ecosystem where software not only supports but also enhances human creativity and efficiency.

What is AutoCodeRover?

AutoCodeRover represents a fully automated method designed to address GitHub issues. This includes correcting errors and introducing new features. It utilizes advanced Large Language Models (LLMs) along with comprehensive code search technology to produce modifications or updates to programs.

Key Features of AutoCodeRover

AutoCodeRover boasts several unique features:

- Boasts a 22% success rate on the SWE Bench Lite Benchmark.

- Surpasses competitors SWE Agent in performance on the SWE- Bench Lite Benchmark.

- Incorporates code search APIs that are aware of program structure.

- Uses an abstract syntax tree to understand code elements like methods and classes.

- Utilizes Spectrum-based Fault Localization to identify potential errors by analyzing test results.

Capabilities/Use Cases of AutoCodeRover

- Skilled in addressing GitHub issues by automating debugging, generating code, and adding new features.

- Proven success in fixing a specific Django issue, referenced as #32347.

- Provides personalized consulting services to foster business development and AI integration.

- Improves the likelihood of fixing errors by using statistical analysis of test case results.

- Enables several attempts to create the right fixes by testing the modified programs with all test cases.

How does AutoCodeRover work?

AutoCodeRover is designed to automate software engineering tasks, with a focus on program repair and feature addition. It uses Large Language Models (LLMs) and advanced code search capabilities to autonomously modify programs or generate patches. The framework is engineered to tackle real-world GitHub issues by iteratively navigating the codebase and enhancing the LLM’s understanding of specific issues.

The framework operates in two stages: context retrieval and patch generation. During context retrieval, the LLM analyzes the issue description, extracts keywords, and employs a stratified search process to iteratively retrieve code context. The context is continuously refined, with the LLM agent deciding which code search APIs to use in each iteration. Once enough project context is gathered, the patch generation stage commences. Here, another LLM agent extracts precise code snippets from the retrieved context and crafts a patch.

The framework also integrates spectrum-based fault localization (SBFL) analysis to enhance the workflow. SBFL identifies software fault locations by analyzing control-flow differences in passing and failing test executions and assigning suspiciousness scores to different program locations. These SBFL-identified locations augment the search process, improving the accuracy and effectiveness of both context retrieval and patch generation stages.

The document also presents a taxonomy of challenges in the SWE-bench-lite benchmark, offering insights into the practical hurdles in achieving fully automated software improvement. It categorizes task instances into groups such as 'Success,' 'Wrong patch,' 'Wrong location in correct file,' 'Wrong file,' and 'No patch,' providing a distribution of the 300 task instances and the framework’s effectiveness in resolving GitHub issues.

As depicted in figure above, the AI program improvement framework illustrates the iterative process of context retrieval, patch generation, and the integration of SBFL analysis. This visual representation provides a detailed and graphical depiction of the framework’s workflow and the interactions between the different components and stages.

Performance Evaluation with Other Models

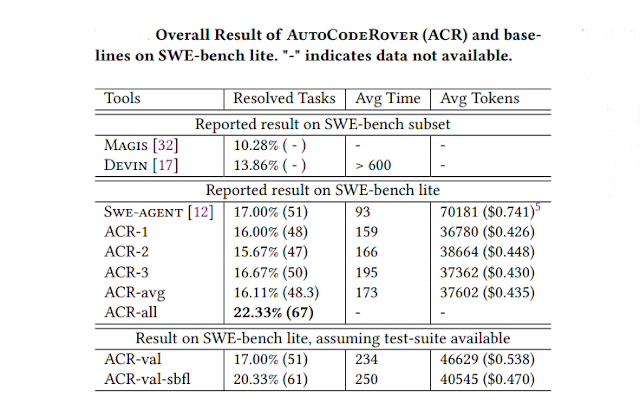

The performance of AutoCodeRover (ACR) is evaluated against other models, namely Swe-agent and Magis, using the SWE-bench lite benchmark. This benchmark includes 300 real-life GitHub issues from 11 popular repositories. The evaluation metrics encompass the percentage of resolved instances, average time cost, and average token cost, providing a measure of effectiveness, time efficiency, and economic efficacy.

The evaluation process involves repeating the experiments three times to account for the inherent randomness of Large Language Models (LLMs). The results, reported as the average and total number of resolved instances across the repetitions, demonstrate AutoCodeRover’s superior performance in resolving real-life software issues.

Above table provides a detailed comparison of the results. It shows that AutoCodeRover outperforms Swe-agent in resolving task instances, achieving a resolution rate of 16.11% on average and up to 22.33% across all resolved tasks in the three runs. This highlights AutoCodeRover’s effectiveness and potential impact in the field of autonomous software engineering.

Potential threats to the validity of the approach and experiments, such as the randomness of LLMs, the operating system environment, and the correctness of generated patches, are carefully considered and mitigated to ensure the reliability and robustness of the evaluation results.

Overall, the evaluation offering insights into its effectiveness, efficiency, and economic efficacy in resolving real-world GitHub issues.

Evolving Landscape of AI in Software Issue Resolution

The evolution of issue resolution in software engineering is being significantly influenced by advancements in AI technology. Three models, AutoCodeRover, MAGIS, and SWE-agent, each contribute uniquely to this progression.

AutoCodeRover is revolutionizing software development and maintenance. It integrates Large Language Models with advanced code search capabilities, autonomously navigating through code complexities to pinpoint and resolve issues. By delving deep into the program’s structure and utilizing classes and methods, it streamlines the search for context and solutions.

On the other hand, MAGIS embodies the collaborative spirit of GitHub’s issue resolution journey. It emphasizes the synergy between diverse roles such as Managers, Repository Custodians, Developers, and QA Engineers. This model underscores the importance of teamwork in AI, demonstrating adaptability and flexibility in forming teams to address a variety of issues.

SWE-agent, while harnessing the power of LLMs like GPT-4, focuses on providing a user-friendly interface for interacting with repositories. It simplifies the process of bug fixing and issue resolution.

As GitHub project issue resolution advances, AutoCodeRover is at the forefront, driving progress with its innovative capabilities. Its methodical approach to understanding and solving issues represents a significant leap forward in automated program improvement. Meanwhile, MAGIS exemplifies the power of teamwork in AI-driven environments. Together, these models are shaping a future where AI not only complements but enhances human expertise in software development.

How to Access and Use this Model?

AutoCodeRover is an open-source model. It is hosted on GitHub and is available for commercial use under the GPL-3.0 license. To utilize this model, one can follow the setup instructions detailed in its GitHub repository.

Limitations and Future Work

- The performance of AutoCodeRover depends on the quality and extent of the tests available for the code it’s fixing.

- It relies on Large Language Models (LLMs) to create patches, which could lead to inconsistent outcomes.

- The ability of AutoCodeRover to solve GitHub issues successfully might differ based on the issue’s complexity.

- Solely depending on LLMs for making decisions might not be enough, suggesting the need for a system that allows human developers to step in when necessary.

- The unpredictability of LLMs in giving varied results each time could affect how reliable AutoCodeRover’s performance is.

Future work could focus on enhancing its capabilities and addressing these limitations, such as integrating human involvement in the decision-making processes and designing LLM agents for generating bug reproduction tests.

Conclusion

AutoCodeRover represents a significant advancement in autonomous software improvement. By streamlining developer tasks and enhancing issue resolution on GitHub, it demonstrates remarkable capabilities. Despite its limitations, its potential impact on the future of software engineering and AI is promising.

Source

research paper : https://arxiv.org/abs/2404.05427

research document : https://arxiv.org/pdf/2404.05427.pdf

GitHub Repo: https://github.com/nus-apr/auto-code-rover

SWE-Bench lite: https://www.swebench.com/lite.html

No comments:

Post a Comment