Introduction

Human motion is a fascinating and complex phenomenon that can convey rich information and emotions. However, modeling and generating realistic human motion from different modalities, such as text, images, or videos, is still a challenging and largely unexplored task. How can we build a unified and versatile model that can understand and produce human motion as well as language?

In this article, we explain a new AI model, a novel motion-language model that treats human motion as a foreign language and leverages the power of large language models (LLMs) to handle multiple motion-related tasks. This model is developed by researchers from Fudan University, Tencent PCG, and ShanghaiTech University, who aim to bridge the gap between motion and language and enable diverse applications such as gaming, robotics, virtual assistant, and human behavior analysis. This new AI model is called 'MotionGPT'.

What is MotionGPT?

MotionGPT is a unified, versatile, and user-friendly motion-language model that can learn the semantic coupling of motion and language and generate high-quality motions and text descriptions on multiple motion tasks. MotionGPT is based on the idea of treating human motion as a specific foreign language that can be modeled by LLMs such as GPT-3/T5. Moreover, inspired by prompt learning, MotionGPT can be pre-trained with a mixture of motion-language data and fine-tuned on prompt-based question-and-answer tasks.

Key Features of MotionGPT

MotionGPT has several key features that make it unique and powerful:

- It can use multimodal control signals, such as text and single-frame poses, to generate consecutive human motions by treating them as special input tokens in LLMs.

- It can support various motion tasks, such as text-driven motion generation, motion captioning, motion prediction, and motion in-between, by using different prompts and instructions.

- It can achieve state-of-the-art performances on multiple motion benchmarks and datasets, such as AMASS, Human3.6M, etc.

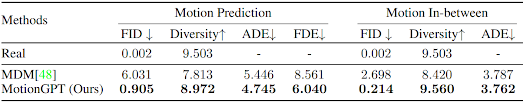

As example, one of the tasks that MotionGPT can perform is motion completion, which means generating missing or intermediate motion frames from partial or sparse motion data. To evaluate the motion completion capability of MotionGPT, the researchers used part of the AMASS dataset. The researchers used Frechet Inception Distance (FID) to measure motion quality and Diversity (DIV) to measure motion diversity. They also used Average Displacement Error (ADE) and Final Displacement Error (FDE) to measure the joints distance between the generated motions and the ground truth motions.source - https://arxiv.org/pdf/2306.14795.pdf

The table above shows the results of the comparison. As we can see, MotionGPT has the best performance on both motion quality and diversity, indicating that it can generate high-quality and diverse motions for both motion prediction and In-between tasks.

Capabilities/Use Case of MotionGPT

MotionGPT has many potential applications and use cases in various fields that require realistic and expressive human motion generation and understanding. For example:

- In gaming, MotionGPT can be used to create diverse and immersive animations for characters based on natural language commands or scenarios.

- In robotics, MotionGPT can be used to control humanoid robots or agents to perform complex actions or interact with humans based on verbal or visual cues.

- In virtual assistant, MotionGPT can be used to generate natural and engaging gestures or expressions for avatars or chatbots based on the context or emotion of the conversation.

- In human behavior analysis, MotionGPT can be used to infer the intention or sentiment of humans based on their body language or movement patterns.

How does MotionGPT work?

MotionGPT is based on the idea of treating human motion as a specific foreign language that can be modeled by large language models (LLMs) such as T5. To achieve this, MotionGPT uses three key components: the motion tokenizer, the motion vocabulary, and the motion-aware language model.

The motion tokenizer is responsible for converting 3D human motion into discrete tokens that can be processed by the LLM. The motion tokenizer consists of an encoder network and a codebook. The encoder network maps a sequence of 3D joint positions to a sequence of latent vectors. The codebook contains K code vectors that represent different motion patterns. The motion tokenizer then assigns each latent vector to the closest code vector in terms of Euclidean distance and outputs the corresponding index as the token.

The motion vocabulary is the set of all possible tokens that can be generated by the motion tokenizer. The motion vocabulary defines the granularity and diversity of the motion representation. The size of the motion vocabulary (K) can be adjusted according to different domains or tasks. For example, a larger K can capture more fine-grained details of the motion, while a smaller K can reduce the complexity and redundancy of the motion.

The motion-aware language model is responsible for modeling the joint distribution of motion tokens and text tokens using a pre-trained LLM such as T5. The motion-aware language model takes a sequence of mixed tokens as input and outputs a probability distribution over the vocabulary for each token position. The motion-aware language model can be fine-tuned on specific motion-language datasets to adapt to different domains or tasks. Moreover, inspired by prompt learning, the motion-aware language model can perform various motion tasks by using different prompts and instructions.

These three components work together to enable MotionGPT to handle various motion-related tasks in a unified and versatile way.

How to Access and Use MotionGPT?

MotionGPT is not publicly available yet, but it will be released soon on its project page and GitHub repository. Users will be able to try out MotionGPT online through an interactive demo that allows them to input different prompts and control signals and see the generated motions and captions in real time. Users will also be able to download MotionGPT from its GitHub repository, where they will find the source code, pre-trained models, datasets, and instructions for running MotionGPT locally or on a server.

MotionGPT is open-source and licensed under the MIT License, which means that users can freely use, modify, and distribute it for both academic and commercial purposes, subject to the conditions of preserving the copyright and license notices.

If you are interested to learn more about MotionGPT model, all relevant links are provided under 'source' section at the end of this article.

Limitations

MotionGPT is a novel and promising model that opens up new possibilities for human motion modeling and generation. However, it also has some limitations that need to be addressed in future work. For example:

- MotionGPT relies on DVQ to discretize human motion into tokens, which may introduce quantization errors or lose some fine-grained details of the original motion.

- MotionGPT uses a fixed codebook size (K) for all motions, which may not be optimal for different domains or tasks that require different levels of granularity or diversity.

- MotionGPT uses pre-trained LLMs such as GPT-3 as its backbone, which may inherit some biases or limitations from these models or require large amounts of data or computational resources to train or fine-tune.

Conclusion

MotionGPT is an innovative model that opens up new possibilities for human motion modeling and generation. It also contributes to the overall future of AI journey by bridging the gap between motion and language, two fundamental modalities of human communication and expression. By enabling diverse applications and challenges in various fields that require realistic and expressive human motion generation and understanding, MotionGPT can enhance the interaction and collaboration between humans and machines, as well as the understanding and appreciation of human behavior and culture.

source

research paper - https://arxiv.org/abs/2306.14795

research document - https://arxiv.org/pdf/2306.14795.pdf

GitHub Repo - https://github.com/OpenMotionLab/MotionGPT

Project details - https://motion-gpt.github.io/

No comments:

Post a Comment