Introduction

Text-rich images, such as memes, comics, advertisements, and infographics, are ubiquitous on the internet and social media. They often convey complex messages that require both visual and textual understanding. However, most existing models for text-rich image understanding are limited by either pre-defined tasks or fixed input formats. How can we build a more flexible and general model that can handle various text-rich image understanding tasks with different input modalities?

A new model is developed by researchers from Georgia Tech, Adobe Research, and Stanford University. They are experts in natural language processing, computer vision, and multimodal learning. The motto behind the development of this model is to create a more general and flexible model for text-rich image understanding that can handle diverse tasks and inputs. This new AI model is called 'LLaVAR'.

What is LLaVAR?

LLaVAR stands for Language-Level Adaptive Visual Instruction Tuning for Text-Rich Image Understanding. It is a model that can perform various text-rich image understanding tasks by taking different types of inputs, such as images, texts, or both. LLaVAR is based on the idea of visual instruction tuning (VIT), which is a method of fine-tuning a pretrained language model (PLM) with visual instructions to adapt it to different tasks. LLaVAR enhances VIT by introducing language-level adaptive attention (LLAA), which is a mechanism that dynamically adjusts the attention weights between the visual and textual inputs based on the language-level information from the visual instructions. This allows LLaVAR to better capture the task-specific and input-specific information and improve the performance.

Key Features of LLaVAR

Some of the key features of LLaVAR are:

- It can handle various text-rich image understanding tasks, such as meme generation, comic generation, text extraction, sentiment analysis, text insertion, text deletion, text replacement, and text style transfer.

- It can take different types of inputs, such as images only, texts only, or both images and texts. It can also handle multiple images or texts as inputs.

- It uses visual instructions to guide the model to perform the desired task and input format. The visual instructions are natural language texts that are easy to write and understand.

- It leverages LLAA to dynamically adjust the attention weights between the visual and textual inputs based on the language-level information from the visual instructions. This allows LLaVAR to better capture the task-specific and input-specific information and improve the performance.

- It achieves state-of-the-art results on several text-rich image understanding benchmarks, such as MemeQA, ComicQA, TextCaps, ST-VQA, OCR-VQA etc.

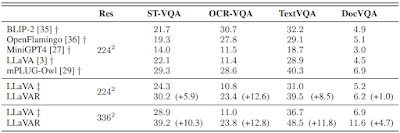

As an example, Text-based VQA is a task where the model has to answer questions about images that contain texts, such as signs, documents, or captions. Researchers tested LLaVAR on four text-based VQA datasets from different domains: ST-VQA, OCR-VQA, TextVQA, and DocVQA .They compared LLaVAR with some baseline models and previous model, LLaVA. Note that one of the baseline models, InstructBLIP, used OCR-VQA as part of its training data, so it is not fair to compare it with researcher's models. They used two different resolutions for the image inputs: 224x224 and 336x336.

source - https://arxiv.org/pdf/2306.17107.pdf

The results show that LLaVAR improves a lot over LLaVA on all four datasets, which means that collected data can help the model learn better. The results also show that LLaVAR does better with higher resolution images, which means that collected data can help even more with bigger or clearer images. Model LLaVAR with 336x336 resolution, beats all the other models on three out of four datasets.

But there are some other factors that can affect the performance, such as the language decoder, the resolution, and the amount of text-image training data. So, researchers can only claim to say that this model is very good (not the best) for the tasks and datasets that they evaluated.

Capabilities/Use Case of LLaVAR

LLaVAR has many potential capabilities and use cases for text-rich image understanding. For example:

- It can generate memes or comics from images or texts or both. This can be useful for creating humorous or informative content for social media or entertainment purposes.

- It can extract texts from images or insert texts into images. This can be useful for extracting information from scanned documents or adding captions or annotations to images.

- It can analyze the sentiment or emotion of texts or images or both. This can be useful for understanding the opinions or feelings of users or customers from their feedback or reviews.

- It can delete or replace texts in images. This can be useful for removing unwanted or sensitive information from images or modifying them for different purposes.

- It can transfer the style of texts in images. This can be useful for changing the tone or mood of texts in images or making them more appealing or persuasive.

How does LLaVAR work?

LLaVAR is based on LLaVA, which is a model that uses visual instruction tuning to adapt a pretrained language model to different text-rich image understanding tasks. LLaVAR enhances LLaVA by introducing language-level adaptive attention, which dynamically adjusts the attention weights between the visual and textual inputs based on the language-level information from the visual instructions.

LLaVAR consists of two main components: a visual encoder and a language decoder. The visual encoder processes the image input and extracts visual features. The language decoder generates or predicts the text output based on the visual features and the text input.

The visual encoder is based on CLIP-ViT-L/14, which is a vision transformer that is pretrained on large-scale image-text pairs. Depending on the resolution of the image input, LLaVAR uses either 224x224 or 336x336 as the input size. The visual encoder outputs a sequence of grid features that represent the local regions of the image. These grid features are then projected into the word embedding space of the language decoder by a trainable matrix.

The language decoder is based on Vicuna-13B, which is a large-scale language model that is fine-tuned with visual instructions. The language decoder takes the visual instruction, the text input, and the projected grid features as inputs and encodes them into a sequence of embeddings. The language decoder then uses masked self-attention and cross-attention layers to generate or predict the text output token by token. The language decoder also uses language-level adaptive attention to modify the attention weights between the visual and textual inputs based on the visual instruction.

How to Access and Use LLaVAR?

LLaVAR is an open-source model that can be accessed and used in different ways, depending on your needs and preferences. Here are some of the options you have:

You can use the online demo of LLaVAR to try out some of the text-rich image understanding tasks, such as meme generation, comic generation, text extraction, and sentiment analysis. The demo allows you to upload your own images or texts or use the provided samples (as shown in above figure). The demo will show you the output of LLaVAR for the given task and input.

The GitHub repository for LLaVAR contains all the necessary resources to run the model on a variety of text-rich image understanding tasks. You can download the code, data, and pretrained models, and then run the provided scripts to fine-tune and evaluate the model on your own tasks. You can also modify the scripts or the visual instructions to customize your experiments.

If you are interested to learn more about LLaVAR model, all relevant links are provided at the end of this article.

Limitations

LLaVAR is an amazing model for text-rich image understanding, but it also has some room for improvement. Here are some of the challenges that LLaVAR faces:

- LLaVAR needs visual instructions to tell it what to do and how to do it. But writing visual instructions can be hard and time-consuming, especially for new or complicated tasks. Also, visual instructions can be vague or confusing, which can mess up the model.

- LLaVAR uses a PLM to learn from a lot of text data. But PLMs may not understand all the visual details or meanings that matter for text-rich image understanding tasks. Also, PLMs are very big and slow and need a lot of power to work.

- LLaVAR uses LLAA to change the attention weights between the visual and textual inputs based on the visual instructions. But LLAA may not always find the best attention weights for different tasks or inputs, especially when there are too many or conflicting visual instructions or inputs.

Conclusion

LLaVAR is a breakthrough model that can advance AI research and applications in text-rich image understanding and multimodal learning.

source

research paper - https://arxiv.org/abs/2306.17107

project details - https://llavar.github.io/

Github repo - https://github.com/SALT-NLP/LLaVAR

demo link- https://eba470c07c805702b8.gradio.live/

No comments:

Post a Comment