Introduction

Artificial intelligence (AI) has made remarkable progress in the past decade, especially in the field of natural language processing (NLP). However, most of the existing NLP models are limited to understanding and generating text, without considering other modalities such as images, videos, or speech. Moreover, these models often lack common sense and world knowledge, which are essential for human-like intelligence.

To address these challenges, a team of researchers from Microsoft Research Asia, Microsoft Research Redmond, and Microsoft AI and Research have developed a novel model, which aims to ground multimodal large language models to the world. It is a general AI model that can learn from diverse data sources and perform various tasks across modalities. It is based on the unifying framework of Vision-Language Pre-training (VLP), which leverages both visual and linguistic information to enhance the model’s representation and reasoning abilities. This new AI model is called 'kosmos-2'.

What is kosmos-2?

Kosmos-2 is a multimodal large language model that can understand and generate natural language as well as images, videos, and speech. It is trained on a massive corpus of multimodal data. Kosmos-2 is named after the Greek word for “world” or “order”, which reflects its goal of grounding language models to the world and achieving general AI.

Key Features of kosmos-2

Kosmos-2 has several key features that make it unique and powerful among existing multimodal models. Some of these features are:

- Large-scale multimodal pre-training: Kosmos-2 is pre-trained on a large-scale multimodal corpus of over 1.8 billion samples, covering text, images, videos, speech, captions, metadata, and web pages. This enables kosmos-2 to learn rich and diverse representations and knowledge from various data sources.

- Unified vision-language framework: Kosmos-2 adopts the vision-language pre-training (VLP) framework as its backbone, which integrates both visual and linguistic information into a unified model. VLP allows kosmos-2 to leverage cross-modal attention and self-attention mechanisms to enhance its representation and reasoning abilities.

- Multimodal fusion and generation: Kosmos-2 can fuse multiple modalities into a single representation and generate new content across modalities. For example, kosmos-2 can generate an image from a text description or a video from a speech transcript. Kosmos-2 can also perform multimodal alignment and retrieval tasks, such as finding the most relevant image or video for a given text query or vice versa.

- Task-adaptive fine-tuning: Kosmos-2 can be fine-tuned on various downstream tasks across modalities with minimal task-specific modifications. Kosmos-2 can also leverage task-specific data augmentation techniques to improve its performance and generalization.

Capabilities/Use Cases of kosmos-2

Kosmos-2 has demonstrated impressive capabilities and use cases across different modalities and domains. Some of the examples are:

- Image captioning: Kosmos-2 can generate natural language descriptions for images that are accurate, fluent, and informative. Kosmos-2 can also handle complex scenes with multiple objects and actions.

- Video question answering: Kosmos-2 can answer natural language questions about videos that require understanding both the visual content and the temporal dynamics. Kosmos-2 can also handle questions that involve reasoning over multiple video clips or external knowledge.

- Text summarization: Kosmos-2 can generate concise and coherent summaries for long texts that capture the main points and salient details. Kosmos-2 can also handle different types of texts such as news articles, scientific papers, or stories.

- Image generation: Kosmos-2 can generate realistic and diverse images from natural language descriptions that match the user’s intention. Kosmos-2 can also handle complex descriptions with multiple attributes or constraints.

- Video generation: Kosmos-2 can generate realistic and dynamic videos from natural language or speech descriptions that match the user’s intention. Kosmos-2 can also handle complex descriptions with multiple events or actions.

- Speech recognition: Kosmos-2 can transcribe speech into text with high accuracy and low latency. Kosmos-2 can also handle different languages, accents, and domains.

- Speech synthesis: Kosmos-2 can synthesize natural and expressive speech from text with high quality and naturalness. Kosmos-2 can also handle different languages, voices, and emotions.

How does Kosmos-2 work?

Kosmos-2 uses VLP to learn from different types of data, like text, images, videos, or speech. It has three parts: an encoder, a decoder, and an aligner. The encoder turns data into hidden states using transformers that pay attention to both words and images. The decoder creates new data from hidden states using transformers that pay attention to both words and images. It also makes one word or image at a time. The aligner matches data across words and images during training. It uses contrastive learning to make similar things closer and different things farther. It also uses masked language modeling to fill in the blanks in data.

Performance evaluation with other Models

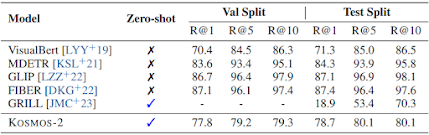

Researchers compare kosmos-2 with other models on different tasks that use words and images, such as multimodal grounding, multimodal referring, perception-language tasks, and language tasks. These tasks measure how well the models can understand and generate content across modalities, such as text, images, and videos. They claim that kosmos-2 can achieve impressive zero-shot performance and outperform previous models that rely on additional components or fine-tuning.

One of the tasks is phrase grounding, which is finding a part of an image from its words. For example, from the words “A man in a blue hard hat and orange safety vest stands in an intersection.” and “orange safety vest”, the model needs to draw a box around the vest in the image. Researchers test kosmos-2 on Flickr30k Entities [PWC+15] val and test splits, which have 31,783 images and 244,035 phrases. Above shows how kosmos-2 and other models do on phrase grounding. Kosmos-2 does very well without training and beats GRILL [JMC+23], which uses a detector, by a lot. Also, our model beats VisualBert [LYY+19] model by 7.4% R@1 on both val and test splits.

For performance evaluation on other tasks, such as referring expression comprehension, video question answering, and language understanding and generation, please refer to the document.

How to access and use kosmos-2?

source - https://44e505515af066f4.gradio.app/

Kosmos-2 is currently not publicly available, but the researchers have released a demo website where you can try some of its capabilities online. The demo website allows you to input text, image, video, or speech queries and see the corresponding outputs generated by kosmos-2. You can also browse some of the pre-trained tasks and see some of the examples and results. The demo website is accessible. You can also find more information about kosmos-2 at GitHub Website.

Kosmos-2 is also not open-source yet, but the researchers have released some of the code and data for the vision-language pre-training (VLP) framework, which is the backbone of kosmos-2. The code and data are available at GitHub website. You can use the code and data to pre-train your own multimodal models or fine-tune them on your own tasks.

Kosmos-2 is intended for research purposes only and is not commercially usable. The licensing structure of kosmos-2 is not clear yet, but it may be subject to Microsoft’s terms and conditions.

If you are interested to learn more about Kosmos-2 model, all relevant links are provided under 'source' section at the end of this article.

Limitations:

Kosmos-2 is amazing for general AI, but not perfect. It still has some problems to solve in the future. Some problems are:

- Data quality and diversity: Kosmos-2 learns from a lot of data, but the data may not be good or real enough. The data may have noise, bias, errors, or gaps that may hurt the model’s performance and generalization.

- Model complexity and scalability: Kosmos-2 is a very big and hard model that needs a lot of power and memory to train and run. The model may not be easy or fast for real use or deployment.

- Model interpretability and explainability: Kosmos-2 is a mystery model that may not be clear or simple to understand or explain what it does or why it does it. The model may also do wrong or bad things that may not be seen or caught by humans.

- Model ethics and social impact: Kosmos-2 is a powerful model that can make real and different things across words and images, but it may also cause ethical and social issues or troubles. The model may make harmful, rude, false, or fake things that may affect human health, privacy, security, or trust.

Conclusion

Kosmos-2 is a remarkable achievement in the field of artificial intelligence, and it opens up new possibilities and opportunities for multimodal learning and generation. It is a step closer to the ultimate goal of general AI, which is to create machines that can understand and interact with the world as humans do.

source

research paper - https://arxiv.org/abs/2306.14824v2

research document - https://arxiv.org/pdf/2306.14824v2.pdf

Hugging Face Paper - https://huggingface.co/papers/2306.14824

GitHub Repo - https://github.com/microsoft/unilm/tree/master/kosmos-2

Demo link - https://44e505515af066f4.gradio.app/

Project link - https://aka.ms/GeneralAI

No comments:

Post a Comment