Introduction

Code completion is a task that aims to generate the next token or statement given a partial code input. It is widely used in modern integrated development environments (IDEs) and code editors to assist programmers in writing code. Code completion can help programmers save time, avoid typos, and discover new APIs or libraries.

However, most existing code completion models are based on standard Transformer models, which have limitations in handling long code input. Transformer models use self-attention mechanism to compute the relevance between every pair of tokens in the input sequence. This mechanism has two drawbacks: (1) it has quadratic complexity with respect to the sequence length, which makes it computationally expensive and memory-intensive for long sequences; (2) it treats every token equally, which may result in noise or redundancy for long sequences.

To overcome these limitations, researchers from Microsoft Research Asia and University of California San Diego proposed a new model, which is a long-range pre-trained language model for code completion. This new Model is called 'LongCoder'.

What is LongCoder?

LongCoder is a sparse Transformer model that can handle long code input for code completion tasks and capture both local and global information. It employs a sliding window mechanism for self-attention and introduces two types of globally accessible tokens - bridge tokens and memory tokens - to improve performance and efficiency.

Key Features of LongCoder

LongCoder has several key features that make it a novel and effective model for code completion tasks.

- LongCoder can handle long code input up to 4,096 tokens, which is much longer than previous models that can only handle up to 512 tokens.

- LongCoder can capture both local and global information in the code using a sliding window mechanism and bridge tokens. It can also memorize important statements using memory tokens.

- LongCoder is pre-trained on a large-scale corpus of Python code from GitHub repositories using masked language modeling (MLM) objective. It can leverage the general knowledge and syntax of Python code learned from pre-training.

- LongCoder can be fine-tuned on specific code completion tasks using different datasets. It can adapt to different domains and scenarios of code completion.

- LongCoder achieves superior performance on code completion tasks compared to previous models while maintaining comparable efficiency in terms of computational resources during inference.

Capabilities/Use Cases of LongCoder

LongCoder can be used for various code completion tasks, such as:

- Token-level code completion: given a partial code input, generate the next token that is most likely to follow.

- Statement-level code completion: given a partial code input, generate the next statement that is most likely to follow.

- Code refinement: given a partial or incorrect code input, generate the correct or improved code output.

- Code suggestion: given a partial or incomplete code input, generate multiple possible code outputs that can complete or extend the input.

LongCoder can also be used for other related tasks, such as:

- Code summarization: given a code input, generate a natural language summary that describes its functionality or purpose.

- Code documentation: given a code input, generate a natural language documentation that explains its usage or parameters.

- Code generation: given a natural language input, generate a code output that implements its functionality or logic.

How does LongCoder work?

LongCoder is a model for code completion that can handle long code input. It is based on a sparse Transformer architecture with an encoder and a decoder. The encoder takes the input code tokens and produces hidden states. The decoder generates the output code tokens based on the hidden states and the previous outputs.

The encoder and decoder have sparse Transformer blocks with three sub-layers: (1) self-attention with sliding window; (2) cross-attention with bridge tokens and memory tokens; (3) feed-forward network.

The self-attention with sliding window splits the input code into segments and processes each segment with self-attention. The output of each segment is concatenated to form the output sequence. This reduces the cost and memory of self-attention and keeps the local information in each segment.

The cross-attention with bridge tokens and memory tokens captures the global information across segments. Bridge tokens and memory tokens are special tokens that can attend to all other tokens in the input code. They act as bridges to aggregate local information and facilitate global interaction.

The feed-forward network applies a non-linear transformation to each token after the cross-attention. It helps to learn non-linear features from the token representations.

The encoder and decoder also have layer normalization, residual connection, and an output layer.

Performance evaluation with other models

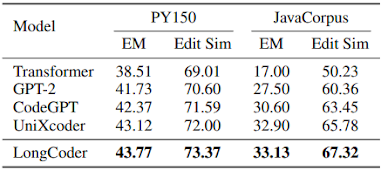

The LCC dataset (as shown in above table) shows that the sparse models (LongFormer and LongCoder) perform better than the non-sparse models on both EM and Edit Sim metrics. They also have a similar inference speed. LongFormer is a modified version of UniXcoder that uses a sliding window attention mechanism. This mechanism helps the model process longer code input faster and more accurately. This proves the usefulness of the sliding window attention mechanism for code completion tasks.

LongCoder improves upon LongFormer by adding bridge tokens and memory tokens. These tokens help the model capture more global information and important statements in the code. LongCoder improves the EM score by 0.8%–1.3% and the Edit Sim score by 4.0%–6.0% compared to other sparse models (as shown in above table). This shows the effectiveness of our proposed tokens.

LongCoder also achieves the best performance on CodeXGLUE code completion benchmarks (as shown in above table). These benchmarks have shorter code input. LongCoder has a bigger advantage over UniXcoder on these benchmarks. This shows its potential for more complex scenarios.

How to access and use LongCoder?

LongCoder is available on GitHub, where you can find the codes and data for pre-training and fine-tuning LongCoder, as well as the instructions for running the experiments.

LongCoder is also available on Hugging Face, where you can load and use LongCoder using PyTorch Transformers library. You can also use LongCoder for feature extraction or fine-tuning on your own datasets.

LongCoder is open-source and free to use for research purposes. However, if you want to use LongCoder for commercial purposes, you need to obtain a license from Microsoft.

If you are interested to learn more about LongCoder model, all relevant links are provided under the 'source' section at the end of this article.

Limitations

LongCoder is a novel and effective model for code completion tasks, but it also has some limitations that need to be addressed in future work.

- LongCoder is currently only pre-trained on Python code, which may limit its generalization to other programming languages. It would be interesting to explore how to pre-train LongCoder on multiple languages or cross-lingual code corpora.

- LongCoder uses a heuristic rule to select memory tokens based on keywords, which may not capture all the important statements in the code. It would be interesting to explore how to use more sophisticated methods to select memory tokens based on semantic or syntactic analysis.

- LongCoder uses a fixed number of bridge tokens and memory tokens, which may not adapt well to different lengths or complexities of code input. It would be interesting to explore how to dynamically adjust the number or position of bridge tokens and memory tokens based on the input context.

LongCoder is a promising model that can handle long code input and generate accurate and relevant code outputs for code completion tasks. It can help programmers write code faster and with fewer errors, as well as discover new APIs or libraries. However, LongCoder also has some limitations that need to be addressed in future work, such as generalizing to other languages, selecting memory tokens more effectively, and adapting to different code input.

Source

research paper - https://arxiv.org/abs/2306.14893

GitHub repo - https://github.com/microsoft/CodeBERT/tree/master/LongCoder

Parent github repo - https://github.com/microsoft/CodeBERT/

Hugging face longcoder base - https://huggingface.co/microsoft/longcoder-base

Microsoft research - https://www.microsoft.com/en-us/research/publication/longcoder-a-long-range-pre-trained-language-model-for-code-completion/

No comments:

Post a Comment