New innovative language model is developed by a team of researchers including Wangchunshu Zhou, Yuchen Eleanor Jiang, Peng Cui, Tiannan Wang, Zhenxin Xiao, Yifan Hou, Ryan Cotterell, Mrinmaya Sachan. The team developed this new model as an initial step towards next-generation computer-assisted writing systems beyond the next form of conventional one. This new model is called as 'RecurrentGPT'.

What is Recurrent GPT?

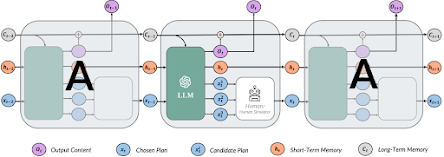

RecurrentGPT is an innovative language model that emulates the recurrence mechanism found in Recurrent Neural Networks (RNNs) using natural language. It leverages the power of a large language model (LLM), like ChatGPT, by replacing the vectorized elements in an LSTM (Long Short-Term Memory RNN) with text paragraphs.

How does Recurrent GPT work?

Through prompt engineering, Recurrent GPT effectively simulates the recurrence mechanism, enabling the generation of text of any desired length. This sets it apart from ChatGPT, which has a fixed-size context in the Transformer architecture, limiting its ability to generate arbitrarily long text. By utilizing the nuances of natural language, Recurrent GPT successfully replicates the functionality of Long Short-Term Memory.

Advantages of Recurrent GPT

Prompt Engineering

Prompt engineering framework enables recurrent prompting with language models by stimulating a recurrent neural network. Natural language components are utilized to define the recurrent computation graph with different prompts.

Recurrent GPT Architecture

The architecture of Recurrent GPT mimics the recurrence mechanism of different Aura and ends. It replaces the natural language representation of different paragraphs that are inputted into the actual prompts.

Benefits of Recurrent GPT

Recurrent GPT combines long-term and short-term networks, utilizing different types of language models to generate larger text. It is a useful tool for generating scripts or contextual generative AI.

Advantages of Recurrent GPT

- Improved Efficiency

Reduces human effort by making progress on bigger context generated using AI. Use of Recurrent GPT led to an increase in efficiency in generating longer text compared to conventional computer-assisted writing systems. Paragraph or chapter-level progress resulted in reduced human labor and faster writing outputs.

- Enhanced Interpretability

Facilitates interaction between humans as well as computers running applications. Recurrent GPT has demonstrated high interpretability, allowing users to observe internal language models. This gives greater insight into decision-making processes and helps understand how generated text is outputted. - Content Creation and Interactivity

Recurrent GPT allows users to see where content is being used to create the next paragraph. The plan that is being used to help create the next formulated response is visible. The interactivity of Recurrent GPT enables interaction between humans and the model. Users can edit and modify generated text using natural language. Editing as well as seeing through what the actual AI is trying to plan for the next paragraph results in a better response that could be more specific to an individual's preference. - Customizable Feature

Users have flexibility in customizing their Recurrent GPT by easily modifying prompts. Changing one word can result in a more unique type of response tailored to an individual's interests and needs. Adjusting the style of generated text and playing around with parameters can be done using the application.

Prompt Engineering

Prompt engineering framework enables recurrent prompting with language models by stimulating a recurrent neural network. Natural language components are utilized to define the recurrent computation graph with different prompts.

Recurrent GPT Architecture

The architecture of Recurrent GPT mimics the recurrence mechanism of different Aura and ends. It replaces the natural language representation of different paragraphs that are inputted into the actual prompts.

Recurrent GPT uses a cell state, hidden state, input state, and output state to mimic the recurrence mechanism of different Aura and ends.

At each time step, recurrent GPT receives a paragraph of text and has a brief plan for the next paragraph. It utilizes previous steps to get you the framework to get you the best output for the next paragraph.

It generates new content based on given prompts by building upon previous steps of information that is given.

There are two main components in recurrent GPT: inputs and outputs at each step of the process. The first input is obviously going to be the paragraph that you input within the system. This is referred to as content. The second input outlines for the next paragraph that is going to be used to generate. This is called a plan. Content and plan generate at each step which are called return GPT.

Limitations

Recurrent GPT is still generating a lot of different types of errors. Sometimes it's not able to give the most accurate information. This is a small problem that will be fixed later on as the AI world progresses.

How to access Recurrent GPT?

To access RecurrentGPT, you can visit its GitHub repository. The repository contains information on how to use the model, including how to install dependencies, prepare the data, and run the model.

The GitHub repository for Recurrent GPT contains examples and prompts that can be used to get a better idea of how it works. Prompt engineering tab shows how outputs of paragraphs are sent out to make the next plan for the paragraph. On the same webpage, A qualitative analysis of using recurrent GPT as both an interactive writing assistant and an interactive fiction generator is presented.

Recurrent GPT Demo

Even the Recurrent GPT demonstration is accessible via a web-based demo link. This interactive platform showcases the remarkable capabilities of Recurrent GPT and its ability to generate extensive textual content. Within the Recurrent GPT interface, you'll find two distinct tabs: "Auto-Generation" and "Human in the Loop."

The "Auto-Generation" tab empowers users to input a prompt and witness the system's prowess in producing elaborate responses. Remarkably, Recurrent GPT can generate vast amounts of content based on a single prompt. Moreover, users can provide specific instructions for each step of a generative paragraph, allowing for fine-grained control over the output.

All desired links are provided under 'source' section at the end of this article.

At each time step, recurrent GPT receives a paragraph of text and has a brief plan for the next paragraph. It utilizes previous steps to get you the framework to get you the best output for the next paragraph.

It generates new content based on given prompts by building upon previous steps of information that is given.

There are two main components in recurrent GPT: inputs and outputs at each step of the process. The first input is obviously going to be the paragraph that you input within the system. This is referred to as content. The second input outlines for the next paragraph that is going to be used to generate. This is called a plan. Content and plan generate at each step which are called return GPT.

Limitations

Recurrent GPT is still generating a lot of different types of errors. Sometimes it's not able to give the most accurate information. This is a small problem that will be fixed later on as the AI world progresses.

How to access Recurrent GPT?

To access RecurrentGPT, you can visit its GitHub repository. The repository contains information on how to use the model, including how to install dependencies, prepare the data, and run the model.

The GitHub repository for Recurrent GPT contains examples and prompts that can be used to get a better idea of how it works. Prompt engineering tab shows how outputs of paragraphs are sent out to make the next plan for the paragraph. On the same webpage, A qualitative analysis of using recurrent GPT as both an interactive writing assistant and an interactive fiction generator is presented.

Recurrent GPT Demo

Even the Recurrent GPT demonstration is accessible via a web-based demo link. This interactive platform showcases the remarkable capabilities of Recurrent GPT and its ability to generate extensive textual content. Within the Recurrent GPT interface, you'll find two distinct tabs: "Auto-Generation" and "Human in the Loop."

The "Auto-Generation" tab empowers users to input a prompt and witness the system's prowess in producing elaborate responses. Remarkably, Recurrent GPT can generate vast amounts of content based on a single prompt. Moreover, users can provide specific instructions for each step of a generative paragraph, allowing for fine-grained control over the output.

All desired links are provided under 'source' section at the end of this article.

Benefits of Recurrent GPT

Recurrent GPT combines long-term and short-term networks, utilizing different types of language models to generate larger text. It is a useful tool for generating scripts or contextual generative AI.

Conclusion

RecurrentGPT presents an innovative method for generating text that possesses a distinct advantage by allowing the generation of text of any desired length. This sets it apart from fixed-context models like ChatGPT, which have limitations in generating lengthy text. Nonetheless, it becomes challenging to establish direct comparisons with other models in the absence of defined evaluation metrics and standardized benchmarks.

source

GitHub Repo - https://github.com/aiwaves-cn/RecurrentGPT

research paper link - https://arxiv.org/abs/2305.13304

research paper doc - https://arxiv.org/pdf/2305.13304.pdf

web demo link - http://server.alanshaw.cloud:8003/

No comments:

Post a Comment