Introduction

In the realm of artificial intelligence, a cutting-edge innovation has emerged an ingenious tool that empowers you to modify pre-existing images according to your exact preferences. This remarkable tool even possesses the ability to rotate three-dimensional images. The true potential of this groundbreaking development lies in its capacity to overcome the limitations of text-to-image generative AI, which often fails to produce desired outputs. By leveraging the power of editing on existing images, users can effortlessly obtain more desirable results without the need to generate an entirely new image.

A collaborative effort among various academic institutions and research organizations has yielded an innovative project. This groundbreaking initiative empowers users to interactively manipulate images by effortlessly dragging specific points within the image, enabling them to achieve precise positioning according to their desired target points. The primary objective of this project is to offer users unparalleled flexibility and meticulous control over the pose, shape, expression, and arrangement of the generated objects. This project is known as "Drag Your GAN".

This project introduces an interactive, point-based manipulation technique applied to the generative image manifold. Its primary focus is on addressing the concept of controllability within deep generative models, specifically targeting generative adversarial networks (GANs). Through Drag Your Gan, users gain unprecedented control over the creative process, allowing them to shape and refine images with unparalleled precision. As researchers delve deeper into the realms of controllability and image manipulation, Drag Your Gan stands as a testament to the vast possibilities that lie ahead in the field of artificial intelligence. By seamlessly blending user interaction with the power of deep generative models, this project paves the way for new avenues of creativity and customization.

What is Drag Your Gan?

Drag Your Gan is an innovative image synthesizer that grants users the power to modify diverse aspects of an image by skillfully synthesizing it based on specific requirements. With this remarkable tool, individuals can effortlessly manipulate spatial attributes such as positions, poses, shapes, and expressions.

Drag Your Gan is an interactive point-based manipulation on the generative image manifold. It addresses controllability in deep generative models, specifically towards GANs. It creates an ideal controllable image synthesizer with three key components - flexibility, precision, and generality.

Flexibility

Refers to the capability of controlling different spatial attributes including positions, poses, shapes, and expressions. Users can manipulate various aspects of what is happening in an image by synthesizing it according to specific requirements.

Precision

Essential for fine-grained control over spatial attributes. Users should be able to precisely adjust desired characteristics without sacrificing quality or coordinates of generative images.

Generative Aspect of Controllable Images

The generative aspect of controllable images allows users to focus on different object categories, including animals, objects, human expressions, and more. This feature prioritizes different aspects and enables users to create various modifications in an image using AI technology.

Features

Drag Your Gan allows users to manipulate various aspects of an image by synthesizing it according to specific requirements.

Users can control different spatial attributes including positions, poses, shapes, and expressions.

Drag Your Gan is an ideal controllable image synthesizer that does not sacrifice quality or coordinates of generative images.

Users can adjust desired characteristics without sacrificing quality or coordinates of generative images.

Proposed Methods

Previous works have made progress in achieving one or two properties for controllable images. However, researchers aim to fulfill all three properties by introducing an intuitive user interface and allowing direct manipulation of specific points in an image using a pointer tool. The proposed approach incorporates all three properties mentioned earlier and enables interactive point-based manipulation on generative image manifold folds.

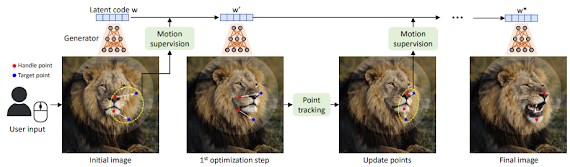

Overview of the pipeline used for controllable images

Below figure shows an overview of the pipeline used for controllable images. Users can manipulate generative images by setting handle points that adjust specific areas based on target points. Users can extend clothes or transform cars into something else using this approach. The AI technology allows users to play around with different landscapes and objects.

source - https://arxiv.org/pdf/2305.10973.pdf

Handle Points

Handle points are represented by red dots in the image, while target points adjust the image based on blue dots. Users can also use a mask indicator, which is an editing region that focuses on a specific area.

Main Steps

The approach involves two main steps: motion-like supervision and point tracking. In the motion supervision step, handle points move towards target areas to achieve desired modifications without affecting image quality. In the point tracking step, handle points update as users subtract objects within the image.

Manipulation Types

The tool allows for extending people's clothing, changing poses, backgrounds, cars, lighting, movements and expressions. It can manipulate real images by focusing on attributes such as pose, hair shape or expression. The power of GANs and latent space manipulation enables manipulation of different outputs such as clothing or tiger expressions.

Real Image Manipulation Using Proposed Method

The process begins with a real image input into the system and undergoes Gan inversion to map it out into the latest latent space of Style. Once in the latent space, images can be edited and modified towards different attributes such as pose, hair shape or expression. Combining the power of GANs with an interactive point-based manipulation interface offers users flexibility and precision to control and modify synthesized visual contents.

Future Implications

With ongoing advancements and enhancements, DragGAN holds the potential to emerge as a practical substitute for conventional image editing tools such as Photoshop. Nevertheless, it is crucial to acknowledge that DragGAN is a relatively nascent technology, and it may not completely supplant Photoshop in the foreseeable future.

More Details

If you require more details about Drag Your GAN, you can find project details, research papers, and GitHub repository in the "source" section at the end of this article. The links include a research paper, a point tracking approach, a GitHub repository, an AI art tool, and a PDF document. These resources provide information about how Drag Your GAN works, its features, and how it can be used for interactive image manipulation.

If you require more details about Drag Your GAN, you can find project details, research papers, and GitHub repository in the "source" section at the end of this article. The links include a research paper, a point tracking approach, a GitHub repository, an AI art tool, and a PDF document. These resources provide information about how Drag Your GAN works, its features, and how it can be used for interactive image manipulation.

Conclusion

Overall, Drag Your Gan is a powerful tool that allows users to manipulate generative images by setting handle points that adjust specific areas based on target points. Though The DragGAN tool is a demo for now, but it is an interesting example of how quickly AI continues to develop.

source

https://vcai.mpi-inf.mpg.de/projects/DragGAN/

GitHub - https://github.com/XingangPan/DragGAN

research pdf - https://arxiv.org/pdf/2305.10973.pdf

research paper - https://arxiv.org/abs/2305.10973

Hugging face - https://huggingface.co/papers/2305.10973

No comments:

Post a Comment