Introduction

The domain of video super-resolution (VSR) is undergoing a significant transformation, with generative models leading the charge towards unprecedented video clarity and detail. The evolution of VSR has been a journey of progressive advancements, each addressing unique challenges such as enhancing resolution, improving temporal consistency, and reducing artifacts. Amidst this backdrop of continuous innovation, VideoGigaGAN has emerged, promising to tackle these enduring challenges and set new benchmarks in video enhancement.

VideoGigaGAN is the product of a collaborative endeavor between researchers from the University of Maryland, College Park, and Adobe Research. The development of VideoGigaGAN was motivated by the ambition to extend the capabilities of GigaGAN, a large-scale image upsampler, to the realm of video. This extension aims to achieve unparalleled levels of detail and temporal stability in VSR, thereby addressing the limitations of existing VSR models and extending the success of generative image upsamplers to VSR tasks.

What is VideoGigaGAN?

VideoGigaGAN is a groundbreaking generative VSR model that has been designed to produce videos with high-frequency details and temporal consistency. It is built upon the robust architecture of GigaGAN, a large-scale image upsampler, and introduces innovative techniques to significantly enhance the temporal consistency of upsampled videos.

Key Features of VideoGigaGAN

- High-Frequency Details: VideoGigaGAN is capable of producing videos with high-frequency details, enhancing the clarity and richness of the visuals.

- 8× Upsampling: It can upsample a video up to 8×, providing rich details and superior resolution.

- Asymmetric U-Net Architecture: VideoGigaGAN builds upon the asymmetric U-Net architecture of the GigaGAN image upsampler, leveraging its strengths for video super-resolution.

Capabilities/Use Case of VideoGigaGAN

VideoGigaGAN’s capabilities extend far beyond just enhancing video resolution. Its ability to generate temporally consistent videos with fine-grained visual details opens up a plethora of potential applications and use cases.

- Film Restoration: One of the most promising applications of VideoGigaGAN is in the field of film restoration. Old films often suffer from low resolution and various forms of degradation. VideoGigaGAN’s ability to enhance resolution and maintain temporal consistency can be used to restore these films to their former glory, making them more enjoyable for modern audiences.

- Surveillance Systems: VideoGigaGAN can also be used to enhance the video quality of surveillance systems. Often, crucial details in surveillance footage can be lost due to low resolution. By upscaling such videos, VideoGigaGAN can help in extracting important details which can be critical in various scenarios.

- Video Conferencing: In the era of remote work and learning, video conferencing has become a daily part of our lives. However, poor video quality can often hinder effective communication. VideoGigaGAN can be used to enhance the video quality in real-time during video calls, providing a better remote communication experience.

- Content Creation: For content creators, especially those working with video, VideoGigaGAN can be a powerful tool. It can help enhance the quality of raw footage, thereby reducing the need for expensive high-resolution cameras.

How does VideoGigaGAN work?

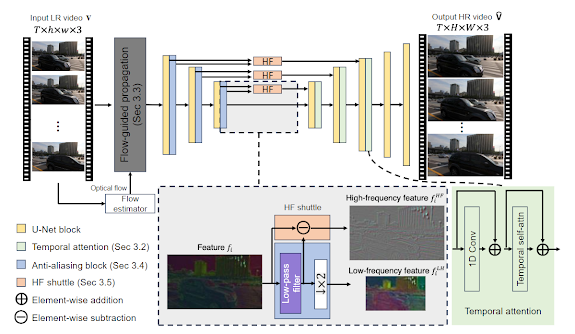

VideoGigaGAN is a state-of-the-art Video Super-Resolution (VSR) model that leverages the power of the GigaGAN image upsampler. It enhances the GigaGAN architecture by incorporating temporal attention layers into the decoder blocks, which helps in maintaining high-frequency appearance details and temporal consistency in the upsampled videos.

The model employs a unique approach to address the issue of temporal flickering and artifacts. It introduces a flow-guided feature propagation module prior to the inflated GigaGAN. This module, inspired by BasicVSR++, uses a bi-directional recurrent neural network and an image backward warping layer to align the features of different frames based on flow information.

To further enhance the quality of the upsampled videos, VideoGigaGAN applies BlurPool layers to replace all the strided convolution layers in the upsampler encoder. This mitigates the temporal flickering caused by the downsampling blocks in the GigaGAN encoder. Additionally, it introduces a high-frequency shuttle that leverages the skip connections in the U-Net and uses a pyramid-like representation for the feature maps in the encoder. This shuttle addresses the conflict of high-frequency details and temporal consistency, ensuring that the upsampled videos have fine-grained details.

The architecture and design of VideoGigaGAN uses standard, non-saturating GAN loss, R1 regularization, LPIPS, and Charbonnier loss during the training, ensuring that it can effectively add fine-grained details to the upsampled videos while mitigating issues such as aliasing or temporal flickering. This makes VideoGigaGAN a promising solution for a variety of video enhancement applications.

Performance Evaluation

The performance evaluation of VideoGigaGAN is an intricate process involving a variety of datasets, metrics, and comparative studies.

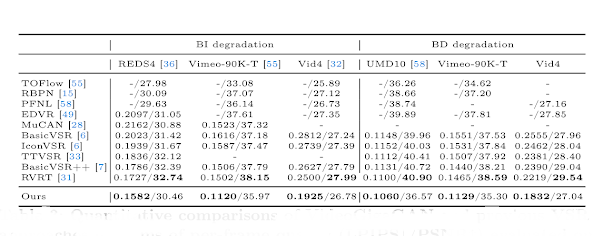

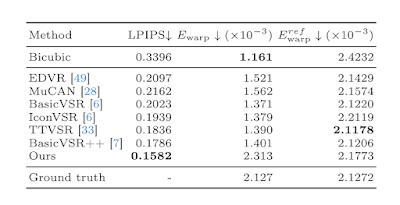

The evaluation metrics focus on two aspects: per-frame quality and temporal consistency. For per-frame quality, PSNR, SSIM, and LPIPS are used. Temporal consistency is measured using the warping error Ewarp. However, as shown in table above, Ewarp tends to favor over-smoothed results. To address this, a new metric, the referenced warping error Eref warp, is proposed.

An ablation study is conducted to demonstrate the effect of each proposed component. The flow-guided feature propagation brings a significant improvement in LPIPS and Eref warp compared to the temporal attention. The introduction of BlurPool as the anti-aliasing block results in a warping error drop but an LPIPS loss increase. The use of HF shuttle brings the LPIPS back with a slight loss of temporal consistency.

In comparison with previous models, as shown in table above, VideoGigaGAN outperforms all other models in terms of LPIPS, a metric that aligns better with human perception. However, it shows a poorer performance in terms of PSNR and SSIM. In terms of temporal consistency, VideoGigaGAN’s performance is slightly worse than previous methods. However, the newly proposed RWE is more suitable for evaluating the temporal consistency of upsampled videos.

The trade-off between temporal consistency and per-frame quality is analyzed. Unlike previous VSR approaches, VideoGigaGAN achieves a good balance between these two aspects. Compared to the base model GigaGAN, the proposed components significantly improve both the temporal consistency and per-frame quality. For more results, please refer project details link.

The VSR Vanguard: VideoGigaGAN’s Technological Supremacy

In the dynamic field of Video Super-Resolution (VSR), models like VideoGigaGAN, BasicVSR++, TTVSR, and RVRT are at the forefront, each bringing distinct advantages to the table. VideoGigaGAN distinguishes itself with its proficiency in generating videos that are rich in detail and consistent over time. It evolves from GigaGAN, a sophisticated image upsampler, and integrates new methods to markedly enhance the temporal stability of videos upscaled by it. VideoGigaGAN’s use of generative adversarial networks (GANs) allows it to transform low-resolution videos into crisp, high-definition outputs.

BasicVSR++ employs a recurrent structure that utilizes bidirectional propagation and feature alignment, maximizing the use of data from the entire video sequence. TTVSR, on the other hand, incorporates Transformer architectures, treating video frames as sequences of visual tokens and applying attention mechanisms along these trajectories. RVRT operates by processing adjacent frames in parallel while maintaining a global recurrent structure, balancing the model’s size, performance, and efficiency effectively.

While BasicVSR++, TTVSR, and RVRT excel in their respective areas, VideoGigaGAN’s unique capability to deliver high-frequency detail and maintain temporal consistency sets it apart. It builds upon the achievements of GigaGAN and adapts its image upscaling prowess for video content, ensuring a seamless viewing experience. This positions VideoGigaGAN as a formidable tool in VSR, differentiating it from contemporaries like BasicVSR++, TTVSR, and RVRT.

How to Access and Use VideoGigaGAN?

VideoGigaGAN is open-source and can be accessed through its GitHub repository. The repository provides the implementation of VideoGigaGAN, a state-of-the-art video upsampling model developed by Adobe AI labs, in PyTorch.

To use VideoGigaGAN, you can clone the repository to your local machine. The repository does not currently provide specific instructions for use, but as it is implemented in PyTorch, you would typically need to install the necessary dependencies, load the model, and then use it to upsample your videos.

Limitations

VideoGigaGAN does encounter certain hurdles. The model struggles with videos that are particularly lengthy those exceeding 200 frames. This issue stems from the propagation of features that go awry due to the optical flow inaccuracies in such extensive sequences. Moreover, the model’s ability to process small-scale objects like text and characters is limited. In low-resolution (LR) inputs, the finer details of these elements are often lost, posing a challenge for the model to reconstruct them accurately.

Conclusion

VideoGigaGAN represents a significant step forward in the field of VSR. It extends the success of generative image upsamplers to VSR tasks while preserving temporal consistency. With its unique capabilities and impressive performance, VideoGigaGAN is poised to make a significant impact in the field of video enhancement.

Source

research paper : https://arxiv.org/abs/2404.12388

research document : https://arxiv.org/pdf/2404.12388

Project details: https://videogigagan.github.io/

GitHub Repo: https://github.com/lucidrains/videogigagan-pytorch

No comments:

Post a Comment