Introduction

In the ever-evolving landscape of Artificial Intelligence (AI), multimodal models have emerged as a significant milestone. These advanced models, capable of processing and interpreting diverse data types such as text, images, and audio, are transforming the way AI perceives and interacts with the world. They offer a more comprehensive understanding of the world, closely mirroring human perception. However, the journey towards the advancement of these models is not without its challenges. The primary hurdle lies in the efficient integration and optimization of these diverse data types to function cohesively.

Amidst these challenges, OpenAI’s GPT-4o has emerged as a beacon of promise. This model sets itself apart with its unique ability to reason across audio, vision, and text in real-time. This capability is a significant stride towards bridging the gap between AI and human-like interaction.

GPT-4o is the latest in a series of generative pre-trained transformers developed by OpenAI. The driving force behind the development of GPT-4o is OpenAI’s mission to ensure that AI can be used to benefit all of humanity. This commitment to creating accessible and advanced AI tools is embodied in the design and capabilities of GPT-4o.

The primary motive behind the development of GPT-4o was to create a more natural human-computer interaction. By enabling the model to reason across audio, vision, and text in real time, GPT-4o is a significant step towards achieving this goal.

What is GPT-4o?

GPT-4o, where ‘o’ stands for ‘omni’, is a cutting-edge multimodal AI model developed by OpenAI. It is designed to accept any combination of text, audio, and image as input and generate corresponding outputs in the same modalities.

Key Features of GPT-4o

GPT-4o comes with a host of unique features that set it apart:

- Multimodal Capabilities: GPT-4o can process and generate data across text, audio, and image modalities, making it a truly versatile AI model.

- Real-Time Interaction: It can respond to audio inputs in as little as 232 milliseconds, mirroring the response time of a human in a conversation.

- Cost-Effective: GPT-4o is not only faster but also 50% cheaper in the API compared to its predecessors, making it a cost-effective solution for various applications.

- Single Model: GPT-4o uses a single model trained end-to-end across various modalities like text, vision, and audio. This is a significant improvement over previous models that required multiple models to handle different tasks.

- Understanding Inputs More Holistically: GPT-4o can understand tone, background noises, and emotional context in audio inputs at once.

Capabilities/Use Cases of GPT-4o

The unique capabilities of GPT-4o open up a wide range of use cases:

- Interacting with AI: Two GPT-4o AIs can have a real-time conversation, demonstrating its potential in creating interactive AI systems.

- Customer Service: GPT-4o can handle customer service issues, making it a valuable tool for businesses looking to improve their customer experience.

- Interview Preparation: It can help users prepare for interviews by providing relevant information and guidance.

- Game Suggestions: GPT-4o can suggest games for families to play and even act as a referee, adding a fun element to family gatherings.

These features and capabilities make GPT-4o a promising development in the field of AI.

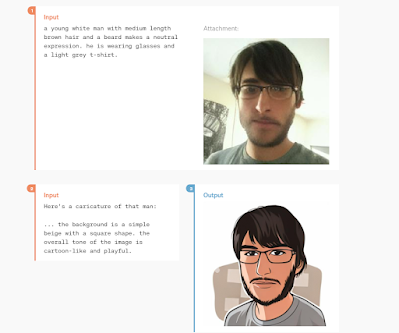

You can find more capabilities listed on OpenAI website under 'Explorations of capabilities' section. One of the examples, 'Photo to caricature', as shown below.

How does GPT-4o work?

GPT-4o exemplifies the pinnacle of AI design, employing a transformer-based neural network structure. It diverges from prior models that necessitated distinct mechanisms for each data type. Instead, GPT-4o’s design philosophy is to handle all input and output through a singular neural network. This integrated method enables the model to directly process various auditory cues, such as tone, the presence of multiple speakers, or ambient sounds, and to produce outputs that can include laughter, singing, or the expression of emotions. It synthesizes knowledge from diverse fields including natural language processing (NLP), visual and video analytics, and speech recognition to interpret context and subtle differences when dealing with mixed data sources.

The architecture of GPT-4o is distinguished by its end-to-end training across textual, visual, and auditory data. Consequently, all forms of data are processed through a consolidated model framework, fostering more streamlined and consistent interactions. The model’s design allows it to comprehend and formulate responses instantaneously, rendering it an invaluable tool for an array of applications.

Training and Fine-Tuning of GPT-4o

The training regimen for GPT-4o encompasses an immense dataset of approximately 13 trillion tokens, spanning text and code. The dataset amalgamates content from CommonCrawl and RefinedWeb, amounting to 13 trillion tokens. There is conjecture about the inclusion of additional sources such as Twitter, Reddit, YouTube, and an extensive compilation of textbooks. This broad training spectrum equips GPT-4o with the capability to understand and articulate responses over a vast array of subjects and scenarios. Following its initial training phase, GPT-4o underwent a fine-tuning process with reinforcement learning, incorporating feedback from both human evaluators and AI systems to ensure alignment with human values and adherence to policy guidelines.

Performance Evaluation of GPT-4o

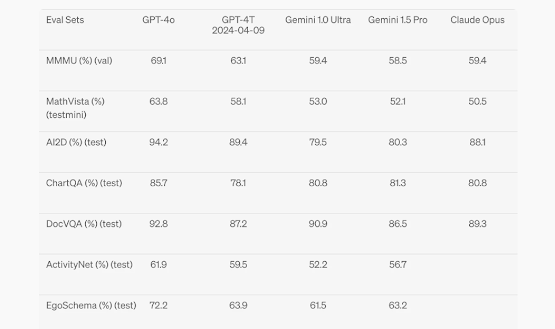

GPT-4o, the latest offering from OpenAI, has made significant strides in various performance metrics. It matches the performance of GPT-4 Turbo on English text and code, and shows substantial improvement on non-English text. It also surpasses existing models in understanding vision and audio, showcasing its superior capabilities in these areas. Here are the key highlights of its evaluations:

Text Evaluation: GPT-4o achieves new high-scores on 0-shot COT MMLU and 5-shot no-CoT MMLU, demonstrating its superior ability in understanding and generating text.

Audio ASR Performance: GPT-4o enhances speech recognition performance over Whisper-v3 across all languages, with a particular emphasis on lower-resourced languages.

Audio Translation Performance: GPT-4o sets a new benchmark in speech translation, outperforming Whisper-v3 on the MLS benchmark.

M3Exam Performance: GPT-4o excels in the M3Exam benchmark, a multilingual and vision evaluation, outperforming GPT-4 across all languages.

Vision Understanding Evals: GPT-4o achieves state-of-the-art performance on visual perception benchmarks.

These evaluations underscore GPT-4o’s advanced capabilities across various domains, setting new standards in the field of AI. This performance evaluation paints a promising picture of GPT-4o’s potential in revolutionizing the AI landscape.

How to Access and Use GPT-4o?

GPT-4o, the latest advancement from OpenAI, is now more accessible and usable than ever before. It is available to anyone with an OpenAI API account and can be used in the Chat Completions API, Assistants API, and Batch API. It can also be accessed via the Playground. GPT-4o is available in ChatGPT Free, Plus, and Team, with availability for Enterprise users coming soon. Free users will have a limit on the number of messages they can send with GPT-4o.

The capabilities of GPT-4o are being rolled out iteratively, starting with text and image capabilities in ChatGPT. OpenAI is making GPT-4o available in the free tier, and to Plus users with up to 5x higher message limits. A new version of Voice Mode with GPT-4o in alpha will be rolled out within ChatGPT Plus in the coming weeks.

For developers, GPT-4o is now accessible in the API as a text and vision model. It is 2x faster, half the price, and has 5x higher rate limits compared to GPT-4 Turbo. OpenAI plans to launch support for GPT-4o’s new audio and video capabilities to a small group of trusted partners in the API in the coming weeks.

Limitations and Future Work

The GPT-4o model introduces a cap on the volume of messages that can be sent by users at no cost. When this threshold is met, the system defaults to the GPT-3.5 version. Although GPT-4o is adept at handling a trio of modalities like text, audio, and images.The current public release from OpenAI is confined to text and image inputs, with outputs restricted to text.

At the outset, audio outputs will be constrained to a range of preselected voices, in compliance with established safety protocols. OpenAI has acknowledged the unique challenges posed by the audio capabilities of GPT-4o.

Efforts are underway to enhance the technical framework, improve post-training usability, and ensure the safety measures required for the introduction of additional modalities. OpenAI plans to divulge comprehensive information about the full spectrum of GPT-4o’s functionalities in an upcoming system card.

Conclusion

GPT-4o represents a significant step forward in the evolution of AI. Its ability to process and generate multimodal data in real-time opens up new possibilities for human-computer interaction. I believe that GPT-4o is not just a technological achievement but a harbinger of a future where AI can understand and engage with the world in a way that was once the realm of science fiction.

With the widespread use of AI by businesses, controlling API access and costs has become more challenging. With so many companies offering a range of AI services, including OpenAI, Anthropic, Google, and OpenAI-compatible, it can be challenging for businesses to stand out, stay within budget, and ensure efficient utilization (OwlMetric)...

ReplyDelete