Introduction

In the rapidly evolving landscape of artificial intelligence (AI), diffusion-based generative models have emerged as a game-changer. These models, including the likes of DALL-E and Stable Diffusion, have redefined content creation by enabling the generation of images and videos from textual descriptions. However, despite their groundbreaking advancements, these models grapple with the challenge of maintaining consistency over long sequences, a critical aspect for storytelling and video generation.

Addressing this challenge head-on is StoryDiffusion, a novel AI model developed by a collaborative team from Nankai University and ByteDance Inc. This model aims to maintain content consistency across a series of generated images and videos, thereby enhancing the storytelling capabilities of AI. The project is spearheaded by the Vision and Cognitive Intelligence Project (VCIP) at Nankai University, with significant contributions from interns and researchers at ByteDance Inc., the parent company of TikTok.

StoryDiffusion is a testament to the relentless pursuit of innovation in the field of AI, particularly in the realm of diffusion-based generative models. By addressing the challenge of maintaining content consistency, StoryDiffusion not only enhances the capabilities of existing models but also paves the way for future advancements in this exciting domain.

What is StoryDiffusion?

StoryDiffusion is an innovative AI model designed for long-range image and video generation. It stands out in the realm of AI for its unique ability to enhance the consistency between generated images. This makes it a powerful tool for tasks that require a high degree of visual consistency.

Key Features of StoryDiffusion

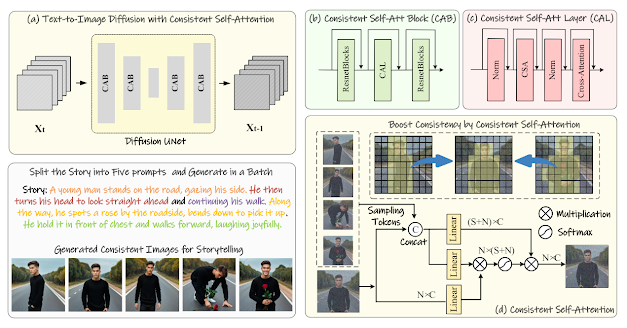

- Consistent Self-Attention: This unique method of self-attention calculation significantly enhances the consistency between generated images, making StoryDiffusion a powerful tool for long-range image and video generation.

- Zero-Shot Augmentation: One of the standout features of StoryDiffusion is its ability to augment pre-trained diffusion-based text-to-image models in a zero-shot manner. This means it can be applied to specific tasks without the need for additional training

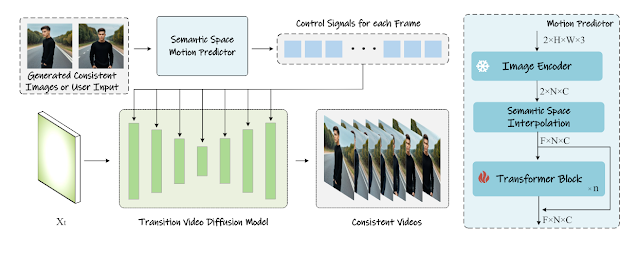

- Semantic Motion Predictor: This is a unique module that predicts motion between condition images in a compressed image semantic space. It enables larger motion prediction and smooth transitions in video generation, enhancing the quality and realism of the generated content.

Capabilities/Use Case of StoryDiffusion

- Comic Creation: StoryDiffusion excels in creating comics with consistent character styles. This opens up new possibilities for digital storytelling, making it a valuable tool for creators in the entertainment industry.

- High-Quality Video Generation: The model can generate high-quality videos that maintain subject consistency. This capability can be leveraged in various fields, including education, advertising, and more.

How does StoryDiffusion work?

StoryDiffusion operates in two stages to generate subject-consistent images and transition videos. The first stage involves the use of the Consistent Self-Attention mechanism, which is incorporated into a pre-trained text-to-image diffusion model. This mechanism generates images from story text prompts and builds connections among multiple images in a batch to ensure subject consistency. It samples tokens from other image features in the batch, pairs them with the image feature to form new tokens, and uses these tokens to compute self-attention across a batch of images. This process promotes the convergence of characters, faces, and attires during the generation process.

The second stage refines the sequence of generated images into videos using the Semantic Motion Predictor. This component encodes the image into the image semantic space to capture spatial information and achieve accurate motion prediction. It uses a function to map RGB images to vectors in the image semantic space, where a transformer-based structure predictor is trained to perform predictions of each intermediate frame. These predicted frames are then decoded into the final transition video.

The model is optimized by calculating the Mean Squared Error (MSE) loss between the predicted transition video and the ground truth. By encoding images into an image semantic space, the Semantic Motion Predictor can better model motion information, enabling the generation of smooth transition videos with large motion. This two-stage process makes StoryDiffusion a powerful tool for generating subject-consistent images and videos.

Performance Evaluation

The evaluation process involved two main stages: the generation of consistent images and the generation of transition videos.

In the first stage, StoryDiffusion was compared with two recent ID preservation methods, IP-Adapter and Photo Maker. The performance was tested using a combination of character and activity prompts generated by GPT-4. The aim was to generate a group of images that depict a person engaging in different activities, thereby testing the model’s consistency.

The qualitative comparisons revealed that StoryDiffusion could generate highly consistent images, whereas other methods might produce images with inconsistent attire or diminished text controllability. On the other hand, PhotoMaker generated images matching the text prompt but with significant discrepancies in the attire across the three generated images.

The quantitative comparisons, as detailed in table above, evaluated two metrics: text-image similarity and character similarity. Both metrics used the CLIP Score to measure the correlation between the text prompts and the corresponding images or character images. StoryDiffusion outperformed the other methods on both quantitative metrics, demonstrating its robustness in maintaining character consistency while conforming to prompt descriptions.

In the second stage, StoryDiffusion was compared with two state-of-the-art methods, SparseCtrl and SEINE, for transition video generation. The models were employed to predict the intermediate frames of a transition video, given the start and end frames.

The qualitative comparisons demonstrated that StoryDiffusion significantly outperformed SEINE and SparseCtrl, generating transition videos that were smooth and physically plausible. For example, in a scenario of two people kissing underwater, StoryDiffusion succeeded in generating videos with very smooth motion without corrupted intermediate frames.

The performance evaluation of StoryDiffusion, as detailed in table above, shows its superior capabilities in generating subject-consistent images and transition videos. Its robustness in maintaining character consistency and its ability to generate smooth and physically plausible videos make it a promising method in the field of AI and machine learning. Further details can be found in the supplementary materials.

StoryDiffusion’s Role in Advancing AI-Driven Visual Storytelling

The landscape of AI models for image and video generation is rich with innovation, where models like StoryDiffusion, IP-Adapter, and Photo Maker each contribute distinct capabilities.

StoryDiffusion is engineered for extended image and video narratives, employing a consistent self-attention mechanism to ensure the uniformity of character appearances throughout a story. This feature is pivotal for coherent storytelling. Moreover, StoryDiffusion’s image semantic motion predictor is instrumental in producing videos of high fidelity. The model’s fine-tuning process, which tailors it with a focused dataset, enhances its task-specific performance.

In contrast, IP-Adapter is a nimble adapter that imparts image prompt functionality to existing text-to-image diffusion models. Despite its lean structure of only 22M parameters, it delivers results that rival or exceed those of models fine-tuned for image prompts.

Photo Maker provides a unique service, enabling users to generate personalized photos or artworks from a few facial images and a text prompt. This model is adaptable to any SDXL-based base model and can be integrated with other LoRA modules for enhanced functionality.

While IP-Adapter and Photo Maker offer valuable features, StoryDiffusion distinguishes itself with its specialized capabilities. Its commitment to maintaining narrative integrity through consistent visual elements and its proficiency in generating high-quality videos mark it as a significant advancement in AI models for image and video generation.

How to Access and Use this Model?

StoryDiffusion is an open-source model that is readily accessible for anyone interested in AI and content generation. The model’s GitHub repository serves as the primary access point, providing comprehensive instructions for local setup and usage. This allows users to experiment with the model in their local environment.

In addition to local usage, StoryDiffusion also supports online usage. Users can generate comics using the provided Jupyter notebook or start a local gradio demo, offering flexibility in how the model is used.

Limitations and Future Work

While StoryDiffusion represents a leap forward, it is not without limitations. Currently, it struggles with generating very long videos due to the absence of global information exchange. Future work will focus on enhancing its capabilities for long video generation.

Conclusion

StoryDiffusion is a pioneering exploration in visual story generation, offering a new perspective on the capabilities of AI in content consistency. As AI continues to advance, models like StoryDiffusion will play a crucial role in shaping the future of digital storytelling and content creation.

Source

research paper: https://arxiv.org/abs/2405.01434

research document: https://arxiv.org/pdf/2405.01434

GitHub Repo: https://github.com/HVision-NKU/StoryDiffusion

Project details: https://storydiffusion.github.io/

No comments:

Post a Comment