Introduction

The field of generative models is experiencing a rapid evolution, transforming the way we create and interact with digital content. One of the most intricate tasks these models tackle is the generation of 3D indoor scenes. This task demands a deep understanding of complex spatial relationships and object properties. Amidst this landscape, EchoScene has emerged as a groundbreaking solution, addressing specific challenges and pushing the boundaries of what’s possible in scene generation.

Generative models have been progressively improving, adapting to address intricate problem statements and challenges. A notable challenge is the generation of 3D indoor scenes, a task that requires models to comprehend complex spatial relationships and object attributes. Current generative models encounter difficulties in managing scene graphs due to the variability in the number of nodes, multiple edge combinations, and manipulator-induced node-edge operations. EchoScene, an innovative AI model, is designed to tackle these specific issues.

EchoScene is the result of the collaborative efforts of a team of researchers from the Technical University of Munich, Ludwig Maximilian University of Munich, and Google. The team’s primary motivation behind the development of EchoScene was to enhance the controllability and consistency of 3D indoor scene generation. This model embodies the collaborative spirit of AI research, with the project’s motto being to push the boundaries of controllable and interactive scene generation.

What is EchoScene?

EchoScene is a state-of-the-art generative model that stands at the intersection of interactivity and control. It is designed to generate 3D indoor scenes using scene graphs, a task that requires a deep understanding of spatial relationships and object properties.

EchoScene distinguishes itself with its dual-branch diffusion model, which dynamically adapts to the complexities of scene graphs, ensuring a high degree of flexibility and adaptability.

Key Features of EchoScene

EchoScene boasts several unique features that set it apart:

- Interactive Denoising: EchoScene associates each node within a scene graph with a denoising process. This unique approach facilitates collaborative information exchange, enhancing the model’s ability to generate complex scenes.

- Controllable Generation: EchoScene ensures controllable and consistent generation, even when faced with global constraints. This feature enhances the model’s versatility and applicability in various scenarios.

- Information Echo Scheme: EchoScene employs an information echo scheme in both shape and layout branches. This innovative feature allows the model to maintain a holistic understanding of the scene graph, thereby facilitating the generation of globally coherent scenes.

Capabilities/Use Case of EchoScene

EchoScene’s capabilities extend beyond mere scene generation, making it a valuable asset in various real-world applications:

- Scene Manipulation: EchoScene allows for the manipulation of 3D indoor scenes during inference by editing the input scene graph and sampling the noise in the diffusion model. This capability makes it a powerful tool for creating diverse and realistic indoor environments.

- Compatibility with Existing Methods: EchoScene’s ability to generate high-quality scenes that are directly compatible with existing texture generation methods broadens its applicability in content creation, from virtual reality to autonomous driving. This compatibility ensures that EchoScene can seamlessly integrate with existing workflows, enhancing productivity and efficiency.

How EchoScene Works: Architecture and Design

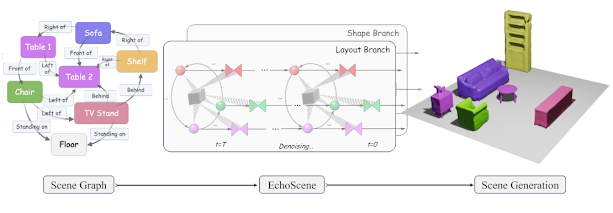

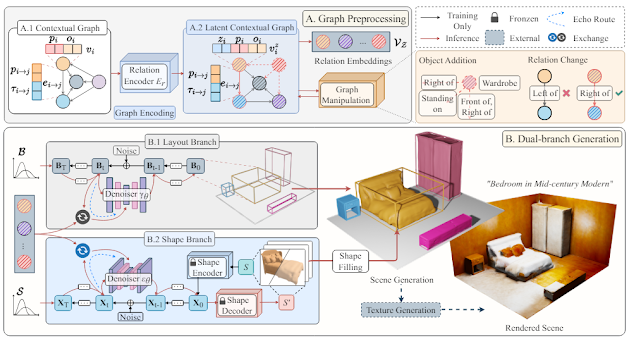

EchoScene operates by transforming a contextual graph into a latent space. This transformation is facilitated by an encoder and a manipulator based on triplet-GCN, as depicted in figure below section A. The latent nodes are then separately conditioned to layout and shape branches, as shown in figure below section B.

In the layout branch, each diffusion process interacts with each other through a layout echo at every denoising step. This interaction ensures that the final layout generation aligns with the scene graph description. Similarly, in the shape branch, each diffusion process interacts with each other through a shape echo, ensuring that the final shapes generated in the scene are consistent.

The architecture of EchoScene is characterized by its dual-branch diffusion model, which includes shape and layout branches. Each node in these branches undergoes a denoising process, sharing data with an information exchange unit that employs graph convolution for updates. This is achieved through an information echo scheme, which ensures that the denoising processes are influenced by a holistic understanding of the scene graph, facilitating the generation of globally coherent scenes.

The design of EchoScene is centered around the concept of an information echo scheme in graph diffusion. This scheme addresses the challenges posed by dynamic data structures, such as scene graphs. EchoScene assigns each node in the graph an individual denoising process, forming a diffusion model for specific tasks. This makes the content generation fully controllable by node and edge manipulation. The cornerstone of the echo is the introduction of an information exchange unit that enables dynamic and interactive diffusion processes among the elements of a dynamic graph. This is illustrated in figure above, which provides an overview of EchoScene’s pipeline, consisting of graph preprocessing and two collaborative branches: Layout Branch and Shape Branch. This intricate design and architecture make EchoScene a powerful tool in the realm of 3D indoor scene generation.

Performance Evaluation

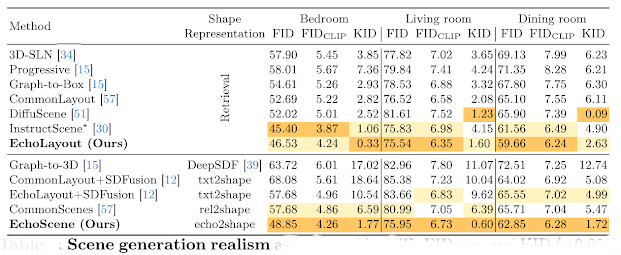

In assessing EchoScene’s performance, a thorough benchmarking against other models spotlights its strengths in scene generation, adherence to graph constraints, and object detail. Below is an encapsulation of the evaluation.

Scene Fidelity: Metrics such as FID, FIDCLIP, and KID gauge EchoScene’s accuracy in rendering scenes. The model demonstrates a marked improvement over predecessors like CommonScenes, with a 15% enhancement in FID, a 12% increase in FIDCLIP, and a significant 73% leap in KID for bedroom scene creation.

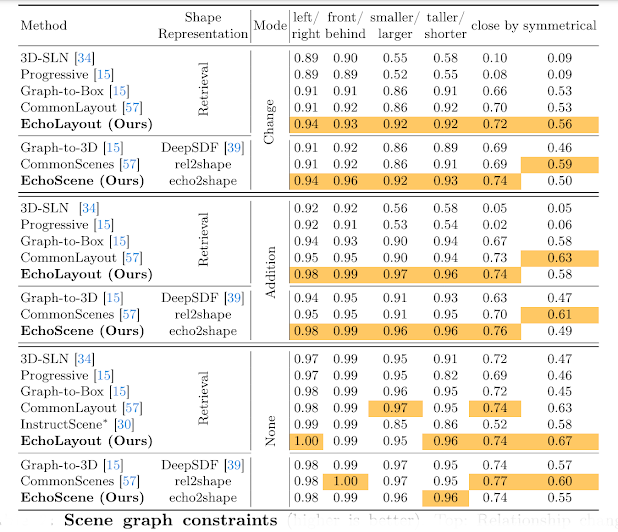

Graph Constraints: EchoScene’s compliance with scene graph constraints is verified through latent space manipulations. It excels beyond 3D-SLN and CommonScenes, reliably upholding spatial relationships like ‘smaller/larger’ and ‘close by’ post-manipulation.

Object-Level Analysis: The quality and variety of object shapes are scrutinized using MMD, COV, and 1-NNA2 metrics. EchoScene outstrips CommonScenes in matching distributional similarity, reflecting its superior capability in crafting object shapes.

Qualitative assessments further affirm EchoScene’s prowess, showcasing greater consistency between objects and overall quality in scene generation. Its compatibility with standard texture generators also augments scene realism. Collectively, EchoScene stands out for its enhanced fidelity in scene generation and its adeptness at managing graph-based manipulations.

EchoScene Versus Peers: A Comparative Look at 3D Scene Generation

The landscape of 3D scene generation is rich with innovative models, among which EchoScene, CommonScenes, and Graph-to-3D are particularly noteworthy. Each model introduces distinct features; however, EchoScene’s dynamic adaptability and its novel information echo mechanism set it apart.

EchoScene’s prowess lies in its interactive and controllable generation of 3D indoor scenes through scene graphs. Its dual-branch diffusion model, which is fine-tuned to the nuances of scene graphs, and the information echo scheme that permeates both shape and layout branches, ensure a comprehensive understanding of the scene graph. This leads to the creation of globally coherent scenes, a significant advantage over its counterparts.

In contrast, CommonScenes and Graph-to-3D also present strong capabilities. CommonScenes translates scene graphs into semantically realistic 3D scenes, leveraging a variational auto-encoder for layout prediction and latent diffusion for shape generation. Graph-to-3D pioneers in fully-learned 3D scene generation from scene graphs, offering user-driven scene customization.

EchoScene’s unique approach to scene graph adaptability and information processing enables the generation of 3D indoor scenes with unparalleled fidelity and control, ensuring global coherence and setting a new standard in the field. This positions EchoScene as a formidable tool in 3D scene generation, distinct from CommonScenes and Graph-to-3D.

How to Access and Use this model?

EchoScene’s code and trained models are open-sourced and can be accessed on GitHub. The GitHub repository provides detailed instructions on how to set up the environment, download necessary datasets, train the models, and evaluate the models. It is important to note that EchoScene is a research project and its usage may require a certain level of technical expertise.

Limitation

While EchoScene is a multifaceted tool with uses in areas like robotic vision and manipulation, it does face certain limitations. A notable constraint is its lack of texture generation capabilities. This means that EchoScene falls short in tasks requiring photorealistic textures. However, this limitation is not unbeatable. The high-quality scenes produced by EchoScene can be further improved by combining them with an external texture generator.

Conclusion

EchoScene represents a significant leap in generative model capabilities, offering a glimpse into the future of AI-driven content creation. Its development reflects the collaborative effort and innovative spirit driving the field forward.

Source

research paper: https://arxiv.org/abs/2405.00915

research document: https://arxiv.org/pdf/2405.00915

project details: https://sites.google.com/view/echoscene

GitHub: https://github.com/ymxlzgy/echoscene

No comments:

Post a Comment