Introduction

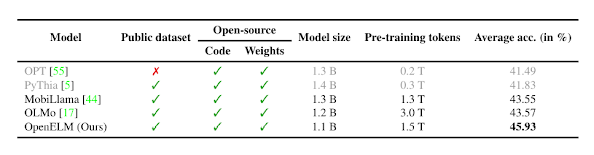

In the rapidly evolving landscape of artificial intelligence, open-source language models have emerged as pivotal instruments of progress. These models, continually advancing and addressing complex challenges, democratize access to state-of-the-art technology and push the boundaries of machine comprehension and capabilities. Among these models, OpenELM, developed by Apple’s machine learning researchers, stands out as a beacon of innovation.

OpenELM, a cutting-edge language model family, is a testament to the collaborative spirit within the AI community. It addresses the pressing need for transparency, reproducibility, and efficiency in AI research, thereby ensuring the trustworthiness of AI systems. The model’s design reflects Apple’s commitment to innovation, targeting the optimization of parameter allocation within its transformer architecture to enhance accuracy.

The development of OpenELM underscores Apple’s unexpected yet significant contribution to the open research community. Despite not being traditionally associated with openness, Apple has paved the way for future open research endeavors with the development of OpenELM. This model is a crucial step towards addressing the need for reproducibility and transparency in large language models, which are essential for advancing open research and enabling investigations into data and model biases.

What is OpenELM?

OpenELM is state-of-the-art open language model, known for its efficient parameter allocation within each layer of the transformer model, leading to enhanced accuracy. It represents a significant leap forward in the AI landscape, providing an open-source framework for training and inference.

Key Features of OpenELM

OpenELM stands out for its unique design and features, which include:

- Layer-wise Scaling: This feature allows for the efficient allocation of parameters within each layer of the transformer model, leading to improved performance.

- Enhanced Accuracy: Compared to previous models like OLMo, OpenELM exhibits a 2.36% improvement in accuracy.

- Reduced Pre-training Tokens: OpenELM requires half the number of pre-training tokens compared to its predecessors, making it more efficient.

Capabilities of OpenELM

OpenELM is designed to be versatile and practical, catering to a variety of use cases. Some of its capabilities include:

- On-device AI Processing: OpenELM is designed to run efficiently on devices such as iPhones and Macs, making it ideal for on-device AI processing.

- Instruction-tuned Models: These models are particularly suited for developing AI assistants and chatbots, showcasing the practical application of OpenELM in real-world scenarios.

How does OpenELM work? / Architecture

OpenELM is engineered with a distinctive approach termed layer-wise scaling strategy. This method is meticulously crafted to allocate parameters judiciously across each transformer model layer. The allocation commences with more compact latent dimensions in the initial layers’ attention and feed-forward mechanisms. Progressing towards the output, the layers incrementally expand. This judicious distribution of parameters is instrumental in bolstering OpenELM’s accuracy.

At its core, OpenELM’s architecture is anchored in the transformer-based framework. This framework equips OpenELM with the capability to adeptly capture extensive dependencies and nuanced contextual cues. The transformer architecture is central to the model’s impressive performance benchmarks. Combined with the layer-wise scaling strategy, OpenELM stands as a formidable entity in AI, adept at managing intricate tasks with remarkable efficiency and precision.

Performance Evaluation with Other Models

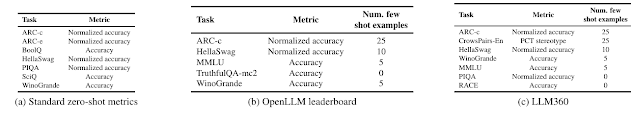

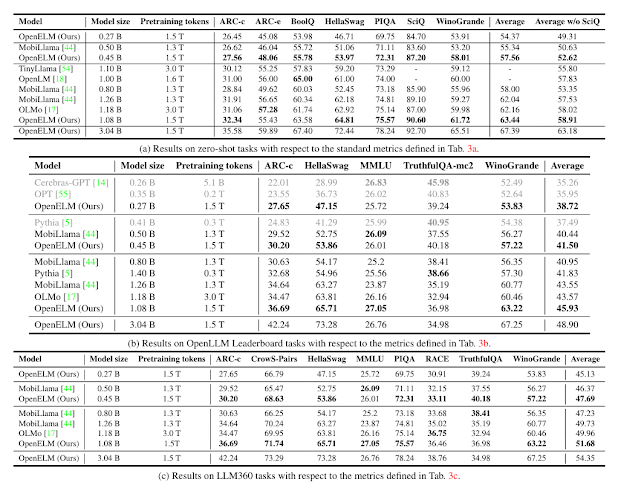

The performance of OpenELM was evaluated using the LM Evaluation Harness across a variety of tasks, including standard zero-shot tasks, OpenLLM leaderboard tasks, and LLM360 leaderboard tasks. These tasks allowed for a comprehensive evaluation of OpenELM in terms of reasoning, knowledge understanding, and misinformation & bias. The few-shot settings, outlined in table below, primarily differentiate these frameworks.

OpenELM was compared with publicly available Language Models (LLMs) such as PyThia, Cerebras-GPT, TinyLlama, OpenLM, MobiLlama, and OLMo. The models most closely related to OpenELM are MobiLlama and OLMo, which are trained on comparable dataset mixtures with a similar or larger number of pre-training tokens. The accuracy of OpenELM was plotted against training iterations for seven standard zero-shot tasks, showing an overall increase in accuracy with longer training durations across most tasks. The results in table below highlights OpenELM’s effectiveness over existing methods. For instance, an OpenELM variant with 1.1 billion parameters achieves higher accuracy compared to OLMo with 1.2 billion parameters in a, b, and c.

Further evaluations included instruction tuning and parameter-efficient fine-tuning (PEFT) results. Instruction tuning consistently improved OpenELM’s average accuracy by 1-2% across different evaluation frameworks. PEFT methods were applied to OpenELM, and the results in Tab. 6 show that LoRA and DoRA deliver similar accuracy on average across the given CommonSense reasoning datasets. For more detailed information, please refer to the original research document.

A Comparative Study: Distinguishing OpenELM in the AI Landscape

In the vibrant world of language models, OpenELM, MobiLlama, and OLMo each carve out their own niche, bringing distinct strengths to the forefront. OpenELM shines with its unique layer-wise scaling strategy, which allows for efficient parameter allocation within each layer of the transformer model. This strategy not only enhances accuracy but also reduces the need for pre-training tokens, making OpenELM a model of efficiency.

On the flip side, MobiLlama, a Small Language Model (SLM), stands out with its innovative approach. It starts from a larger model and employs a careful parameter sharing scheme to cut down both the pre-training and deployment costs. Designed with resource-constrained computing in mind, MobiLlama prioritizes performance while keeping resource demands in check.

OLMo, a truly Open Language Model, is crafted for the scientific study of these models, including their inherent biases and potential risks. It offers a robust framework for building and studying the science of language modeling. However, when pitted against OpenELM, OLMo requires more pre-training tokens, a factor that could influence resource allocation decisions.

So, OpenELM distinguishes itself from MobiLlama and OLMo in several crucial areas. Its efficient parameter allocation and reduced need for pre-training tokens make it a more resource-efficient model. These factors position OpenELM as a powerful and efficient language model that stands tall among its peers, marking it as a significant player in the language modeling landscape.

How to Access and Use This Model?

OpenELM, being an open-source model, is readily accessible and user-friendly. The models are available on HuggingFace, a popular platform for AI developers. Developers can access these models and install them locally for use. In addition to HuggingFace, the source code of OpenELM, along with pre-trained model weights and training recipes, is available at the GitHub repository. Detailed instructions for setting up and running the models are provided, making it easy for developers to get started.

It’s worth noting that while Apple offers the weights of its OpenELM models under what it deems a 'sample code license', there may be some restrictions in the license. All relevant links for this AI model are provided under the 'source' section at the end of this article.

Limitations And Future Work

While OpenELM is a significant advancement in the field of AI, it does have its limitations:

- Performance Speed: Despite OpenELM’s impressive accuracy, it’s worth noting that it is slower than OLMo in performance tests. This trade-off between accuracy and speed is a common challenge in AI and something to consider when choosing a model for specific applications.

- Implementation of RMSNorm: Apple’s researchers acknowledge that the less-than-victorious showing is due to their “naive implementation of RMSNorm,” a technique for normalizing data in machine learning. This indicates room for improvement in the model’s implementation.

In the future, they plan to explore further optimizations to enhance the model’s performance and efficiency.

Conclusion

OpenELM stands as a testament to the power of efficient parameter allocation in language models. It marks a significant milestone in the evolution of open language models, providing a robust framework that tackles key challenges in AI research. The release of OpenELM empowers a diverse range of researchers and developers to contribute to the advancement of AI. As we look to the future, the potential of OpenELM and similar models in transforming the AI landscape is truly exciting.

Source

Website: https://machinelearning.apple.com/research/openelm

research paper: https://arxiv.org/abs/2404.14619

research document: https://arxiv.org/pdf/2404.14619

Model weights: https://huggingface.co/apple/OpenELM

GitHub Repo: https://github.com/apple/corenet

No comments:

Post a Comment