Introduction

In the dynamic landscape of artificial intelligence, the emergence of Small Language Models (SLMs) marks a significant shift. These compact yet powerful models are redefining the boundaries of what’s possible, often outperforming their larger counterparts in specific tasks. This evolution is fueled by advancements in computational power, innovative training methodologies, and a collective ambition to democratize AI.

As we navigate through this exciting journey, a notable milestone is the development of Phi-3, a highly capable language model by Microsoft. This model is a testament to the relentless efforts of researchers and developers who are pushing the envelope in AI technology. Phi-3 is part of Microsoft’s initiative to make AI more accessible by creating a series of SLMs. These models, despite their smaller size, offer many of the capabilities found in larger language models and are trained on less data.

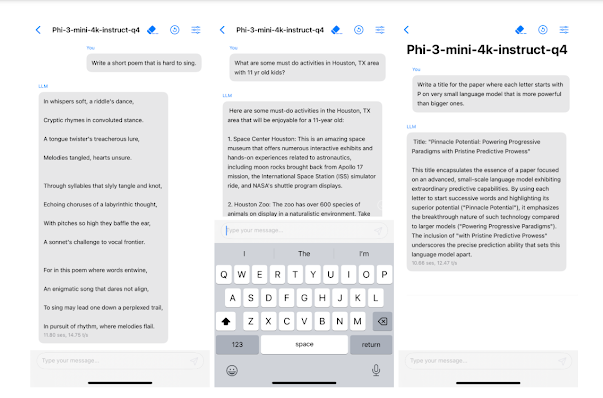

The driving force behind Phi-3 is not just about excelling in performance but also about making AI ubiquitous. It’s about creating a model that can operate on everyday devices like smartphones, thereby democratizing AI. Amidst the challenges of optimizing performance while minimizing resource consumption, Phi-3 addressing these issues head-on and propelling the AI field towards new horizons.

What is Phi-3?

Phi-3 is a family of open AI models, designed to redefine the capabilities of Small Language Models (SLMs). These models, meticulously crafted to deliver high-quality performance, are not only compact but also efficient enough to operate on local devices.

Variants of Phi-3

The Phi-3 comprises three distinct sizes: mini, small, and medium. The Phi-3-mini, with its 3.8 billion parameters, serves as the entry point, delivering impressive performance for its compact size. It is available in two context-length variants 4K and 128K tokens, providing flexibility in application. As we move up the ladder, the Phi-3-small and Phi-3-medium variants, with 7 billion and 14 billion parameters respectively, expand the model’s capabilities.

Key Features of Phi-3

Phi-3’s unique features set it apart from its contemporaries:

- Size and Performance: The Phi-3-mini model, despite its compact size of 3.8 billion parameters, can outperform models twice its size.

- Context Window: It is the first model in its class to support a context window of up to 128K tokens, with minimal impact on quality.

- Instruction-Tuned: Phi-3 is instruction-tuned, meaning it’s trained to follow diverse types of instructions, reflecting how people normally communicate.

Capabilities/Use Case of Phi-3

- Suitability: Small language models like Phi-3 are particularly suitable for resource-constrained environments, latency-bound scenarios, and cost-constrained use cases.

- Performance: They are designed to perform well for simpler tasks, making them more accessible and easier to use for organizations with limited resources.

- Fine-Tuning: Phi-3 models can be more easily fine-tuned to meet specific needs, enhancing their versatility.

How does Phi-3 work?

Phi-3 is a marvel of efficiency, harnessing the power of heavily filtered web data and synthetic data to deliver performance that rivals its larger counterparts. This synergy of data sources allows Phi-3 to understand and generate human-like text with a high degree of accuracy and reliability. The model’s architecture is designed with a focus on robustness, safety, and a conversational format, making it an ideal choice for a wide range of applications, from mobile assistants to complex analytical tools.

Architecture/Design

The architecture of Phi-3 is a testament to the ingenuity of modern AI design. The Phi-3-small variant exemplifies this with its 32-layer decoder structure and a hidden size of 4096, which are typical of a 7B class model. What sets it apart is its innovative grouped-query attention mechanism. This mechanism allows the model to share a single key across four queries, significantly reducing the KV cache footprint. Such a design enables Phi-3 to manage computational resources effectively while still delivering high-quality performance, making it a versatile tool for developers and a seamless experience for users.

Performance Evaluation with Other Models

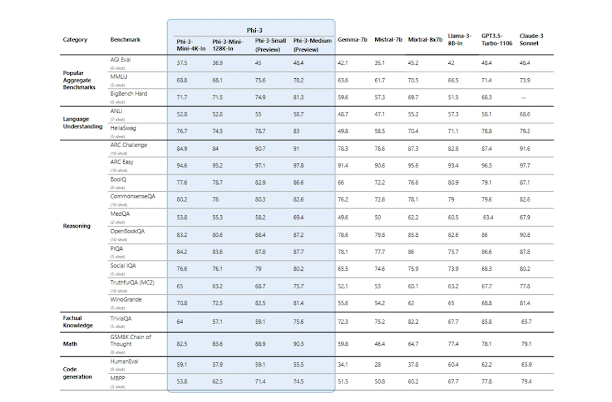

In the competitive landscape of language models, the Phi-3 series distinguishes itself by its remarkable performance. The models consistently exceed the capabilities of counterparts of similar or even greater size in diverse benchmark tests. The Phi-3-mini, for instance, impresses with its ability to surpass models with double its parameter count. The Phi-3-small and Phi-3-medium variants continue this trend, demonstrating superior performance when compared to significantly larger models, such as the GPT-3.5T.

source - https://azure.microsoft.com/en-us/blog/introducing-phi-3-redefining-whats-possible-with-slms/

The evaluation process for Phi-3 is thorough, involving side-by-side comparisons with other language models using established open-source benchmarks. These benchmarks are designed to test various aspects of reasoning, from common sense to logical deduction. The Phi-3-mini’s results are particularly noteworthy, as they are obtained using the same evaluation pipeline as other models, ensuring a fair comparison. The Phi-3 models have proven to be among the most efficient and effective SLMs on the market, solidifying their position as transformative elements in AI technology.

Phi-3’s Distinctive Edge: A Comparative Overview

While comparing with other competing language models, Phi-3 distinguishes itself with its instruction-tuned design and an expansive context window that can handle up to 128K tokens. This capability is unparalleled among its peers. Mistral-7b-v0.1 stands out with its Grouped-Query Attention and Sliding-Window Attention, while Mixtral-8x7b leverages a Sparse Mixture of Experts for its unique architectural approach. Gemma 7B excels as a text-to-text, decoder-only model, adept across various text generation tasks. Meanwhile, Llama-3-instruct-8b, tuned specifically for instructions, is optimized for dialogues, drawing from the strengths of the Meta Llama 3-8B model.

Phi-3’s readiness for immediate deployment is a significant boon, offering a practical advantage for a wide array of applications. Its standout feature, the large context window, is not commonly seen in other models, providing Phi-3 with a unique edge. The Phi-3-mini, despite its smaller size, showcases remarkable efficiency, outshining models with larger parameter counts. This efficiency, coupled with versatility, positions Phi-3 as a formidable contender in the AI field, highlighting its unique advantages over competing models.

How to Access and Use This Model?

Phi-3 is readily available for exploration and use on various platforms. It can be found on Microsoft Azure AI Studio, Hugging Face, and Ollama. Each platform provides detailed instructions for local deployment, making it user-friendly and accessible. Phi-3-mini, a variant of Phi-3, is available in two context-length variants—4K and 128K tokens. It can also be deployed anywhere as an NVIDIA NIM microservice with a standard API interface.

As an open model, Phi-3 is accessible for both commercial and non-commercial purposes, embodying the spirit of open-source development. In the coming weeks, additional models will be added to the Phi-3 family, offering customers even more flexibility across the quality-cost curve.

If you are interested to learn more about this AI model, all relevant links are provided under the ‘source’ section at the end of this article.

Limitations And/or Future Work

The Phi-3 models, despite their groundbreaking performance, encounter certain constraints. Their compact nature, while advantageous in many respects, does limit their capabilities in specific scenarios. For example, the models’ smaller size means they can’t store as much factual information, which can affect their performance in knowledge-intensive tasks such as TriviaQA. Furthermore, Phi-3 is optimized for standard English, which means it may struggle with non-standard variations like slang or languages other than English.

As for future developments, Microsoft is committed to expanding the Phi-3 lineup, enhancing the range of options available to users and striking a balance between quality and cost. The upcoming additions, namely the Phi-3-small and Phi-3-medium models, will boast 7 billion and 14 billion parameters, respectively. These models are set to join the Azure AI model catalog and will be accessible through various model repositories, offering greater flexibility and power to users worldwide.

Conclusion

As we stand at the cusp of a new era in artificial intelligence, the advent of models like Phi-3 marks a significant milestone in the journey of language models. Phi-3, with its compact size and robust capabilities, challenges the notion that bigger is always better and opens up a world of possibilities for AI applications on personal devices.Phi-3’s performance, despite its limitations, is commendable and sets a benchmark for future models.

Source

Blog: https://azure.microsoft.com/en-us/blog/introducing-phi-3-redefining-whats-possible-with-slms/

research Report: https://arxiv.org/abs/2404.14219

Technical Document: https://arxiv.org/pdf/2404.14219.pdf

Azure AI Studio: https://ai.azure.com/explore/models?selectedCollection=phi

Nvidia: https://build.nvidia.com/microsoft/phi-3-mini

Hugging Face Phi3 128k INtrusctr: https://huggingface.co/microsoft/Phi-3-mini-128k-instruct

Hugging Face Phi3 4k INtrusctr: https://huggingface.co/microsoft/Phi-3-mini-4k-instruct

No comments:

Post a Comment