Introduction

Artificial Intelligence (AI) has been making strides in various fields, with multi-turn image generation being one of the most exciting areas of development. This technology allows for the creation of images in a sequential manner, where each subsequent image is influenced by the context and feedback from the previous ones. However, maintaining semantic and contextual consistency across multiple turns has been a significant challenge. This is particularly important for applications such as storytelling and interactive media, where consistency is key.

Enter Theatergen, an innovative solution that addresses these challenges. Developed by a team of researchers affiliated with Sun Yat-sen University and Lenovo Research, Theatergen leverages Large Language Models (LLMs) to ensure consistent multi-turn image generation. The development of Theatergen was driven by the need to overcome the limitations of existing multi-turn image generation models. The goal was to create a framework that could seamlessly integrate character management with LLMs to produce images that are not only high-quality but also contextually and semantically consistent across turns. This marks a significant leap in the capabilities of AI, opening up new possibilities in the realm of multi-turn image generation.

What is Theatergen?

Theatergen is a innovative framework that integrates Large Language Models (LLMs) with text-to-image (T2I) models to facilitate multi-turn image generation. It is designed to interact with users, generating and managing a structured prompt book for character prompts and layouts. This allows Theatergen to create images that are not only high-quality but also contextually and semantically consistent across multiple turns.

Key Features of Theatergen

- Training-Free Framework: Theatergen does not require training, making it efficient and accessible.

- LLM Integration: It leverages the power of LLMs to manage character prompts and layouts, ensuring consistency in the generated images.

- Standardized Prompt Book: Theatergen maintains a structured prompt book that guides the image generation process.

- Semantic and Contextual Consistency: One of the standout features of Theatergen is its ability to significantly improve the consistency in synthesized images.

Capabilities/Use Case of Theatergen

Theatergen’s capabilities extend to various real-world applications:

- Interactive Storytelling: Theatergen can be used to create visually consistent stories over multiple turns.

- Media and Entertainment: It offers potential in gaming and virtual reality for creating dynamic characters.

- Educational Tools: Theatergen is useful for creating educational content that requires visual consistency.

How does TheaterGen work?/ Architecture/Design

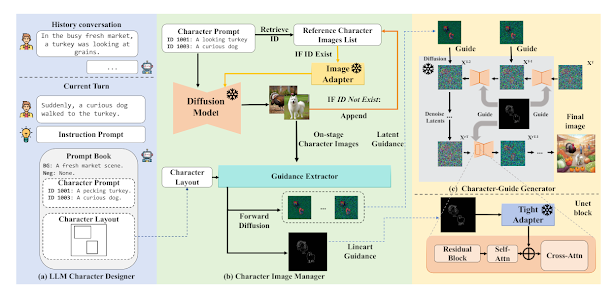

TheaterGen is an innovative framework designed for multi-turn interactive image generation. It’s a training-free model that combines the strengths of existing Large Language Models (LLMs) and Text-to-Image (T2I) models to facilitate natural interaction and diverse image generation. The architecture of TheaterGen comprises three key components:

The first component is the LLM-based character designer. This module acts as a screenwriter, interacting with users and managing a structured character-oriented prompt book to encode user intentions and descriptions of multiple characters. The prompt book contains the background prompt, the negative prompt, and the unique ID, the prompt, and the layout (bounding box) of each character. This unique ID allows TheaterGen to track different characters across multiple turns effectively.

The second component is the character image manager. This module is responsible for generating on-stage character images according to the prompt book and extracting guidance to ensure semantic and contextual consistency in generated images. For each character, it considers two types of images: a reference image that is used to maintain contextual consistency throughout the entire interaction process, and an on-stage image that represents the character in the current stage. The character image manager also extracts two types of guidance: latent guidance and lineart guidance. Latent guidance is constructed by applying the forward diffusion process of the T2I model on the middle-state image, projecting it into the latent space of the model. Lineart guidance, on the other hand, is extracted using a lineart processor, providing stronger constraints on character positions and facilitating the preservation of finer edges and structural details.

The third component is the character-guided generator. This module synthesizes the final image for each turn. It first concatenates the character prompts and the background prompt in the prompt book to obtain a global prompt. This global prompt contains the semantic information of the entire image, which is injected into the cross-attention modules of the T2I model along with the guidance information to control image generation. The character-guided generator utilizes both lineart guidance and latent guidance. Lineart guidance strengthens the layout constraint and incorporates more details into synthesized characters. Latent guidance, on the other hand, enhances the consistency of a character across multiple turns.

Performance Evaluation with Other Models

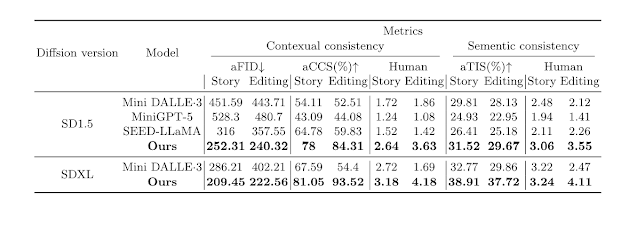

TheaterGen has been put through rigorous testing and comparison with other leading models in the field. The results, as shown in Tables below, are quite impressive.

TheaterGen significantly outperforms the cutting-edge Mini DALLE·3 model, raising the performance bar by 21% in average character-character similarity (aCCS) and 19% in average text-image similarity (aTIS). These metrics are crucial in evaluating the semantic consistency of the generated images, and such a substantial improvement underscores TheaterGen’s superior ability to maintain this consistency across multi-turn dialogues.

In terms of contextual consistency, TheaterGen also demonstrates an improvement of 19% in the aTIS metrics compared to the previous state-of-the-art model. This suggests that TheaterGen can effectively uphold both semantic and contextual consistency in multi-turn image generation, a feat that is not easily achieved.

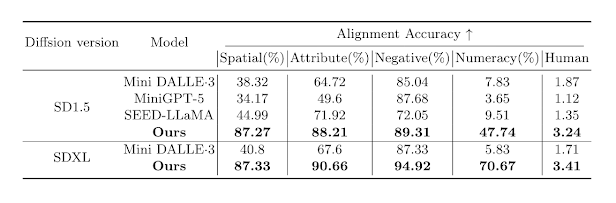

Moreover, TheaterGen not only excels in maintaining dialogue consistency but also outshines other models in terms of completion for each editing type. This is particularly evident in cases involving spatial relationships and quantities. This improvement is mainly attributed to the layout capability of the Language-to-Layout Model (LLM).

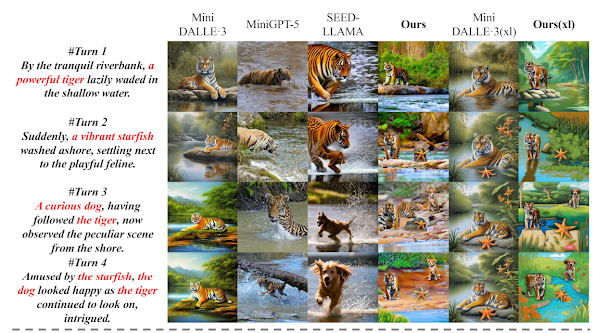

The visualizations of the generation results of the chosen models, reveal that the images generated by TheaterGen perform well in both semantic and contextual consistency. This suggests that TheaterGen can handle complex referential issues and maintain discernible features for the main characters.

Dissecting TheaterGen’s Unique Position in AI Modeling

In the dynamic field of AI, TheaterGen, mini-DallE3, Minigpt-5, and SEED-LLaMA each play pivotal roles, bringing distinct methodologies and strengths to the table.

TheaterGen distinguishes itself with a novel framework that requires no training, seamlessly blending Large Language Models (LLMs) with text-to-image (T2I) models to facilitate the generation of images over multiple interactions. Conversely, mini-DallE3 has carved out a niche in interactive text to image (iT2I) generation, fostering a dialogue between LLMs and users to produce high-quality images that closely align with textual descriptions through natural language interactions. It enhances LLMs for iT2I by incorporating prompting strategies alongside readily available T2I models. Minigpt-5 ventures into the realm of vision and language generation with its 'generative vokens' concept, aiming to synchronize text and image outputs. This model adopts a dual-stage training process, emphasizing the generation of multimodal data without the need for explicit descriptions. SEED-LLaMA, on the other hand, introduces a specialized SEED tokenizer that discretizes visual signals into distinct visual tokens, ensuring the capture of essential semantics while being generated under one-dimensional causal dependence.

TheaterGen’s unique proposition lies in its use of LLMs as a 'Screenwriter' orchestrating and curating a standardized prompt book for each character depicted in the generated images. This innovative strategy ensures that TheaterGen upholds semantic coherence between text and images, as well as contextual continuity for subjects across successive interactive turns. While its counterparts exhibit their own advantages, TheaterGen’s commitment to preserving semantic and contextual integrity in image synthesis positions it as a standout model in the AI landscape.

Theatergen is available on GitHub, where users can find instructions for local use. It is open-source, allowing for both commercial and non-commercial use under its licensing structure.

If you are interested to learn more about this AI model, all relevant links are provided under the 'source' section at the end of this article.

Limitations and Future Work

TheaterGen, despite its significant advancements in multi-turn image generation, still faces challenges. One of the primary issues is maintaining semantic consistency between images and texts, as well as contextual consistency of the same subject across multiple interactive turns.

Few more challenges, like ability to handle more complex multi-turn scenarios and expanding its application to other domains, while complex, are the focus of ongoing research and development efforts.

Conclusion

Theatergen is a groundbreaking AI model that promises to revolutionize the field of multi-turn image generation by providing a solution to the long-standing challenge of maintaining consistency across turns. Its development is a testament to the incredible strides being made in the field of AI and its potential to transform how we interact with technology.

Source

research paper : https://arxiv.org/abs/2404.18919

research document : https://arxiv.org/pdf/2404.18919

Project details: https://howe140.github.io/theatergen.io/

Github repo: https://github.com/donahowe/Theatergen

No comments:

Post a Comment