Introduction

Have you ever wished you could create realistic images from any text description, without having to fine-tune a model for each domain or style? If so, you might be interested in this new model, which is developed by researchers from OPPO Research Institute. The model was developed to address the slow progress in the area of open-domain and non-fine-tuning personalized image generation. The motto behind the development of this model was to create a model that does not require test-time fine-tuning and only requires a single reference image to support personalized generation of single- or multi-subject in any domain. This new model is called 'Subject-Diffusion'.

What is Subject-Diffusion?

Subject-Diffusion is an open-domain personalized image generation model that does not require test-time fine-tuning. It only requires a single reference image to support personalized generation of single- or multi-subject in any domain.

Key Features of Subject-Diffusion

Subject-Diffusion has several key features that make it stand out from other text-to-image generation models.

source - https://oppo-mente-lab.github.io/subject_diffusion/

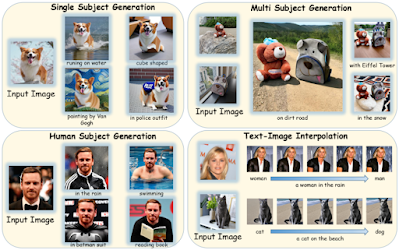

- As shown in above figure, it can create personalized images from any domain, with just one reference image and no test-time fine-tuning. It can handle one or more subjects in the image, based on your text input, such as animals, flowers, landscapes, anime characters, abstract art, etc.

- It can generate diverse and high-quality images that match the text input by using a diffusion-based framework that iteratively refines the image from noise to reality.

- It can generate personalized images that reflect the user’s intention and taste by allowing them to provide additional keywords or sentences as guidance for the image generation process. For example, if the user wants to generate an image of a cat with blue eyes and fluffy fur, they can simply add these keywords or sentences to the original text input. The style encoder will then encode these additional inputs and inject them into the diffusion process, resulting in a personalized image that matches the user’s expectation.

Capabilities/Use Case of Subject-Diffusion

Subject-Diffusion has many potential applications and use cases in various fields and scenarios, such as:

- Creative design: Subject-Diffusion can help designers and artists to create realistic or stylized images from their textual ideas or sketches, without having to spend time and effort on fine-tuning a model for each domain or style. For example, a designer can use Subject-Diffusion to generate an image of a logo or a poster from a brief description or a slogan.

- Education and entertainment: Subject-Diffusion can help students and teachers to visualize concepts or scenarios from texts, such as stories, poems, historical events, scientific facts, etc. For example, a student can use Subject-Diffusion to generate an image of a scene from a novel or a movie they are studying or watching.

- Personalization and customization: Subject-Diffusion can help users to create personalized images that reflect their preferences and styles, by allowing them to provide additional keywords or sentences as guidance for the image generation process. For example, a user can use Subject-Diffusion to generate an image of their dream house or car from a simple description or a list of features.

How does Subject-Diffusion work?

The Subject-Diffusion model combines text and image semantics by using coarse location and fine-grained reference image control to enhance subject fidelity and generalization. It also uses an attention control mechanism to support multi-subject generation.

The model is based on an open-source image synthesis algorithm called Stable Diffusion, which improves computational efficiency by performing the diffusion process in low-dimensional latent space with an auto-encoder. The auto-encoder encodes the input image into a latent representation, and the diffusion process is then applied on the latent space. A conditional UNet denoiser predicts noise with the current timestep, noisy latent, and generation condition.

The training framework of the Subject-Diffusion method has three parts. The first part is location control, which splices mask information during the noise addition process to boost the model’s local learning within the subject position box, increasing image fidelity. The second part is fine-grained reference image control, which has two components. The first component integrates segmented reference image information through special prompts, by learning the weights of the blended text encoder to improve both prompt generalization and image fidelity. The second component adds a new layer to the UNet, which receives patch embedding of the segmented image and position coordinate information. The third part adds extra control learning of the cross-attention map to support multi-subject learning.

source - https://oppo-mente-lab.github.io/subject_diffusion/

As shown in Figure above, for the image latent part, the image mask is concatenated to the image latent feature. For multiple subjects, the multi-subject image mask is overlaid. Then, the combined latent feature is input to the UNet. For the text condition part, a special prompts template is constructed. Then, at the embedding layer of the text encoder, the “CLS” embedding of the segmented image replaces the corresponding token embedding. Also, regular control is applied to the cross-attention map of these embeddings and the shape of the actual image segmentation map. In the fusion part, patch embeddings of segmented images and bounding box coordinate information are fused and trained as a separate layer of the UNet.

Performance Evaluation

The Subject-Diffusion model’s creators demonstrated their method’s superior performance over other state-of-the-art approaches on personalized image generation in both single- and multi-subject settings, using both quantitative and qualitative measures.

For single-subject generation, Subject-Diffusion was benchmarked against methods such as DreamBooth, Re-Image, ELITE, and BLIP-Diffusion. The results revealed that Subject-Diffusion surpassed other methods by a large margin in terms of DINO score, with a score of 0.711 versus DreamBooth’s score of 0.668. Its CLIP-I and CLIP-T scores were also slightly better or comparable to other fine-tuning-free algorithms.

For multi-subject generation, image similarity calculations using DINO and CLIP-I, as well as text similarity calculations using CLIP-T were performed on all of the generated images, user-provided images, and prompts. The results indicated that Subject-Diffusion had clear advantages over DreamBooth and Custom Diffusion across DINO and CLIP-T metrics, proving its ability to capture the subject information of user-provided images more precisely and display multiple entities in a single image concurrently.

Numerous qualitative and quantitative results show that the Subject-Diffusion method beats other state-of-the-art frameworks in single, multiple, and human customized image generation.

How to access and use this model?

Subject-Diffusion is an open-source model that can be accessed and used by anyone who is interested in text-to-image generation. The model is available on GitHub Website, where you can find the code, data and instructions for running the model. You can also use an online demo through the project link to generate images from your own text inputs and additional keywords or sentences. Subject-Diffusion is licensed under the MIT License, which means that you can use it for both personal and commercial purposes.

If you are interested to learn more about Subject-Diffusion model, all relevant links are provided under 'source' section at the end of this article.

Limitations

Subject-Diffusion is an innovative and promising model, but it also has some drawbacks and difficulties that need to be solved.

- It struggles to modify attributes and accessories within user-input images, limiting the model’s versatility and applicability.

- It may produce incoherent images with a high chance when generating personalized images for more than two subjects.

- It can slightly increase the computational burden when generating multi-concept images.

Conclusion

Subject-Diffusion can generate diverse and high-quality images that match the text input, across a wide range of domains and styles. It also outperforms other state-of-the-art text-to-image generation models in terms of visual quality, semantic consistency, and diversity. Subject-Diffusion is a breakthrough in the field of artificial intelligence, as it opens up new possibilities and opportunities for creative design, education, entertainment, personalization, and customization.

Source

research paper - https://arxiv.org/abs/2307.11410

research document - https://arxiv.org/pdf/2307.11410.pdf

project details -https://oppo-mente-lab.github.io/subject_diffusion/

GitHub repo - https://github.com/OPPO-Mente-Lab/Subject-Diffusion

No comments:

Post a Comment