Introduction

Stability AI has launched a new model, which is the latest development in its Stable Diffusion text-to-image suite of models. The model was developed by a team of researchers from Stability AI researchers' group. Stability AI is an artificial intelligence startup that popularized the Stable Diffusion image generator. The company is focused on advancing the field of artificial intelligence and developing cutting-edge technology.

The motto behind the development of this model was to improve upon previous versions of Stable Diffusion and produce more detailed and realistic text-to-image synthesis. The team at Stability AI recognized the potential for improvement in this area and set out to create a model that could produce high-quality images with greater depth and resolution. This new model has ability to achieve this goal and produce images that are significantly improved over its predecessor. This new AI model is called 'SDXL 0.9'.

What is SDXL 0.9?

SDXL 0.9 is a latent diffusion model for text-to-image synthesis. It is the latest development in the Stable Diffusion text-to-image suite of models by Stability AI. The model was developed to improve upon previous versions of Stable Diffusion and produce more detailed and realistic images.

Key Features of SDXL 0.9

Some key features of SDXL 0.9 include:

source - https://arxiv.org/pdf/2307.01952v1.pdf

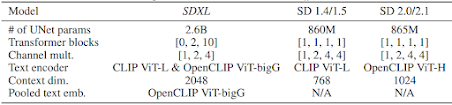

- As shown in figure above, its ability to produce massively improved image and composition detail over its predecessor.

- The model is run on two CLIP models, including one of the largest OpenCLIP models trained to date (OpenCLIP ViT-G/14), which beefs up 0.9’s processing power and ability to create realistic imagery with greater depth and a higher resolution of 1024x1024.

- The use of a larger UNet backbone, more attention blocks, and a larger cross-attention context compared to previous versions of Stable Diffusion, allowing for improved processing of information and more detailed and realistic images.

These features allow SDXL 0.9 to produce high-quality text-to-image synthesis with improved detail and realism.

Capabilities/Use Case of SDXL 0.9

SDXL 0.9 shows drastically improved performance compared to previous versions of Stable Diffusion and achieves results competitive with those of black-box state-of-the-art image generators. It can be used for text-to-image synthesis, allowing users to input textual descriptions and generate corresponding images. This can be useful in a variety of applications, such as:

- Generating visual content for websites or social media posts, based on textual descriptions or keywords.

- Creating illustrations or artworks from text, such as stories, poems, or songs.

- Exploring different concepts or ideas visually, by generating images from different perspectives or angles.

- Having fun and being creative, by generating images from any text you can think of.

How does SDXL 0.9 work?

SDXL 0.9 uses a UNet backbone, which is a type of neural network architecture that is commonly used in image processing tasks. The model also incorporates more attention blocks and a larger cross-attention context compared to previous versions of Stable Diffusion, allowing for improved processing of information. The UNet backbone is a convolutional neural network architecture that was originally designed for biomedical image segmentation. It has since been adapted for use in other image processing tasks, including image generation.

In addition to the UNet backbone, SDXL 0.9 also uses a second text encoder to improve its processing of textual information. This allows the model to better understand and incorporate textual descriptions into the image generation process. The use of more attention blocks and a larger cross-attention context also helps the model to process information more effectively.

Performance evaluation with other Models

SDXL 0.9 is not just a minor update of the Stable Diffusion text-to-image suite of models by Stability AI. It is a major leap forward in terms of its quality and realism. This is because SDXL 0.9 has several key advantages over older Stable Diffusion models, which make it more powerful and capable of generating amazing images from text.

source - https://arxiv.org/pdf/2307.01952v1.pdf

Table above shows how SDXL 0.9 differs from older Stable Diffusion models in various aspects, such as the number of UNet parameters, transformer blocks, channel multipliers, text encoders, context dimensions, and pooled text embeddings. As you can see from the table, SDXL 0.9 has a larger model capacity, a more advanced text encoder, and a larger context dimension than older Stable Diffusion models. These differences mean that SDXL 0.9 can handle more information and produce more detailed and realistic images than older Stable Diffusion models. As a result, SDXL 0.9 outperforms older Stable Diffusion models by a large margin.

How to access and use this model?

SDXL 0.9 is now available on the Clipdrop by Stability AI platform. Stability AI API and DreamStudio customers will be able to access the model.

The code for SDXL 0.9 is available on the Stability AI GitHub repository. This repository also includes links to the model weights for SDXL 0.9, which can be used to run the model locally. In addition to the base model weights, the repository also includes links to the weights for a refinement model, which can be used to improve the visual fidelity of samples generated by SDXL 0.9 using a post-hoc image-to-image technique.

Researchers who would like to access these models can apply using their Hugging Face Account with their academic email. All relevant links related to this model are provided under the 'source' section at the end of this article.

Future Work

The developers of SDXL 0.9 have identified several areas for future improvement of the model. Some of these ideas are:

- Making the model faster and easier to use by using a single-stage approach, instead of a two-stage approach that requires a refinement model.

- Making the model more capable of generating text from images by using byte-level tokenizers or scaling the model to larger sizes, which would allow the model to handle more complex and diverse texts.

- Experimenting with transformer-based architectures, which are a type of neural network that have shown great results in natural language processing and computer vision tasks.

- Reducing the compute needed for inference and increasing sampling speed by using distillation techniques, which are methods that can compress a large model into a smaller one without losing much performance.

- Training the model using the EDM-framework, which is a technique that can give more control and flexibility over the sampling process.

Conclusion

In conclusion, SDXL 0.9 is an exciting development in the field of text-to-image synthesis that offers improved performance and capabilities compared to previous versions of Stable Diffusion.

source

stability ai blog post - https://stability-ai.squarespace.com/blog/sdxl-09-stable-diffusion

research paper - https://arxiv.org/abs/2307.01952v1

code repo - https://github.com/Stability-AI/generative-models

base Model weights - https://huggingface.co/stabilityai/stable-diffusion-xl-base-0.9

refiner model weights - https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-0.9

No comments:

Post a Comment