Introduction

Language modeling is one of the most important and challenging tasks in natural language processing (NLP). It involves predicting the next word or token given a sequence of previous words or tokens. Language models are widely used for various applications such as text generation, machine translation, speech recognition, text summarization, question answering, and more.

However, most of the existing language models have a major limitation: they can only handle a fixed and limited amount of context. For example, GPT-3, one of the most powerful language models to date, can only process up to 2048 tokens at a time. This means that it cannot capture the long-term dependencies and coherence of longer texts, such as books, articles, or conversations.

To overcome this limitation, a team of researchers from the University of Warsaw and Google DeepMind have developed a new language model. Google DeepMind is a leading research organization in artificial intelligence (AI) and machine learning (ML). Google DeepMind aims to solve intelligence and create artificial general intelligence (AGI), which is the ability of machines to perform any intellectual task that humans can. Google DeepMind is known for its groundbreaking achievements in AI. This new AI model that they have developed is called 'LongLLaMA'.

What is LongLLaMA?

LongLLaMA is a large language model that can handle unlimited context length. This means that it can take in and process very long inputs, allowing it to generate more coherent and relevant responses. Unlike most language models, which have a fixed context window and can only process a limited amount of input at a time, LongLLaMA can handle any amount of context without truncating or padding it.

Key Features of LongLLaMA

LongLLaMA has several unique features that make it stand out from other language models:

- It can handle unlimited context: Unlike most language models that have a fixed context window (e.g., 1024 or 2048 tokens), LongLLaMA can process any amount of context without truncating or padding it. This allows it to capture long-term dependencies and coherence across longer texts.

- It can generate long and coherent texts: LongLLaMA can not only process long texts, but also generate them. It can extrapolate beyond its training context size of 8k tokens and produce texts up to 256k tokens long. Moreover, it can maintain the coherence and quality of the generated texts across different segments and domains.

- It is fast and efficient: LongLLaMA is designed to be computationally efficient and scalable. It uses adaptive span attention and reversible residual connections to reduce the memory and time complexity of the model. It also uses a hierarchical attention mechanism to speed up the inference time and avoid unnecessary computations. LongLLaMA can run on a single GPU or CPU and generate texts in a matter of seconds or minutes, depending on the length and complexity of the input.

Capabilities/Use Cases of LongLLaMA

LongLLaMA has a wide range of potential applications and use cases in various domains and scenarios. Some examples are:

- Text generation: LongLLaMA can be used to generate long and coherent texts for various purposes, such as creative writing, storytelling, content creation, summarization, etc. For example, it can generate a novel or a short story based on a given prompt or genre, or it can generate a summary or an abstract of a long article or a book.

- Text completion: LongLLaMA can be used to complete or extend a given text by generating the missing or desired parts. For example, it can complete a sentence or a paragraph based on the previous context, or it can extend a text by adding more details or information.

- Text rewriting: LongLLaMA can be used to rewrite or paraphrase a given text by changing its style, tone, vocabulary, or structure. For example, it can rewrite a text in a different language, dialect, register, or genre, or it can paraphrase a text to avoid plagiarism or improve readability.

- Text analysis: LongLLaMA can be used to analyze or extract information from a given text by generating summaries, keywords, topics, sentiments, etc. For example, it can analyze a long text and generate a concise summary of its main points, or it can extract the keywords or topics that best describe the text.

- Text interaction: LongLLaMA can be used to interact with users or other agents by generating natural and engaging responses. For example, it can be used as a chatbot or a conversational agent that can handle long and complex dialogues with users, or it can be used as a game character or a narrator that can generate immersive and interactive stories.

How does LongLLama Work?

LongLLama is a fine-tuned version of the OpenLLaMA checkpoint that uses the Focused Transformer (FoT) technique. FoT employs a training process inspired by contrastive learning to enhance the structure of the (key, value) space, enabling an extension of the context length. This approach allows for fine-tuning pre-existing, large-scale models to lengthen their effective context.

LongLLama also employs memory attention layers, which have access to an external memory database during inference. The memory is populated incrementally with (key, value) pairs processed beforehand. The key idea behind FoT, inspired by contrastive learning, is to expose the memory attention layer to (key, value) pairs from the current and previous local context of the given document (positives) and contexts from unrelated documents (negatives), in a differentiable way. This novel approach enhances the structure of the (key, value) space, enabling an extension of the context length.

Performance Evaluation of LongLLama:

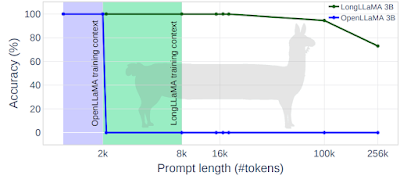

One of the ways to test how well LongLLama can handle long texts is to see if it can remember a passkey that is hidden in a long text. The passkey is a word or a phrase that is placed at the start of the text and the model has to find it after reading the whole text. LongLLama can do this very well even when the text is very long, up to 256k tokens.

source - https://arxiv.org/pdf/2307.03170.pdf

For example, LongLLama 3B can find the passkey with 94.5% accuracy when the text is 100k tokens long and with 73% accuracy when it is 256k tokens long. The original OpenLLaMA model, on the other hand, can only handle texts up to 2k tokens long, which is its training length.

This is just one of the tasks that LongLLama was evaluated on. There are more tasks that show how good LongLLaMA is at processing and generating long texts. You can read more about them in the research paper.

How to access and use LongLLama Model?

If you want to try LongLLama for yourself, you can download the model and run it on your own machine, or you can use it online through the Hugging Face website. To download the model, you need to install some dependencies and follow some steps that are explained in the GitHub repository.

The model is open-source and you can use it for any purpose, as it is available under the Apache-2.0 license, which allows for commercial use of the software. However, it is important to carefully review the terms of the license before using the software for commercial purposes.

All relevant links related to this model, are provided under the 'source' section at the end of this article.

Limitations and Future work

- The most important future research direction is scaling up memory, which presents engineering challenges such as storing more than 16M (key, value) pairs and using approximate kNN search.

- Another future research direction is scaling up crossbatch by increasing the value of d and testing on devices with bigger memory or utilizing multi-node training.

- Exploring other contrastive learning methods, such as hard negative mining, could also be beneficial.

- Combining FOT with other long-context methods could result in mutually beneficial interactions.

Conclusion

LongLLaMA is a remarkable achievement and a breakthrough in language modeling. It shows that AI can overcome the limitations of fixed and limited context and generate long and meaningful texts that humans can understand and enjoy. It also opens up new possibilities and challenges for future research and development in AI.

source

research paper - https://arxiv.org/abs/2307.03170

research doc - https://arxiv.org/pdf/2307.03170.pdf

Github repo -https://github.com/cstankonrad/long_llama

Model - https://huggingface.co/syzymon/long_llama_3b

License - https://github.com/CStanKonrad/long_llama/blob/main/LICENSE

No comments:

Post a Comment