Introduction

Language models possess immense capabilities to generate natural language texts based on a given context or prompt. However, most existing language models are limited to processing text inputs and generating text outputs, disregarding the vast array of information that can be derived from other modes such as images, audio, and video.

What if we could develop a language model that can effectively handle multiple modalities and produce coherent and diverse texts based on the input content? This is the objective of Macaw-LLM, a cutting-edge multi-modal language model that seamlessly integrates image, audio, video, and text data within a unified framework.

Macaw-LLM has been developed collaboratively by a team of researchers from Tencent AI Lab, Dublin City University, and Monash University. Built upon the Transformer architecture, which has gained widespread adoption in natural language processing tasks, this model has been trained on a comprehensive multi-modal dataset called Macaw. This extensive dataset comprises diverse sources and domains, encompassing image, audio, video, and text data.

The driving force behind the development of Macaw-LLM is to enable the generation of natural language across different modalities and domains, thereby facilitating a range of downstream applications, including image captioning, video summarization, audio transcription, and text-to-speech synthesis.

What is Macaw-LLM?

Macaw-LLM is a multi-modal language model that can generate natural language texts based on the input content from image, audio, video, or text modalities. The model can also mix and match different modalities in the input and output sequences, such as generating a text description for an image or a video clip or generating an image or a video clip for a text description.

Key Features of Macaw-LLM

Macaw-LLM is truly an exceptional language model that stands out from the rest due to its remarkable range of features. Let me introduce you to some of its key attributes:

To begin with, Macaw-LLM possesses the remarkable capability to handle multiple modalities in both input and output sequences. This means it can effortlessly perform tasks such as converting images to text, text to images, videos to text, text to videos, audio to text, and even text to audio. Its versatility knows no bounds!

Furthermore, Macaw-LLM excels in generating natural language texts of exceptional quality. The texts it produces are not only relevant and fluent but also incredibly informative and diverse. It's like having an expert writer at your disposal.

Another outstanding aspect of Macaw-LLM is its adaptability. It can seamlessly adjust to different domains and tasks by fine-tuning itself using specific datasets or employing task-specific prefixes. This exceptional flexibility enables it to meet a wide range of requirements.

Macaw-LLM also leverages the power of pre-trained models to its fullest potential. For example, it can effectively utilize models like CLIP to match images with text or leverage VGGish to extract audio features. By harnessing the capabilities of these models, Macaw-LLM significantly enhances its performance and accuracy.

Last but certainly not least, Macaw-LLM supports an extensive array of downstream applications. Whether you require image captioning, video summarization, audio transcription, or text-to-speech synthesis, Macaw-LLM has you covered. Its versatility makes it an invaluable tool for numerous purposes.

Capabilities/Use Cases of Macaw-LLM

Macaw-LLM has proven its capabilities and demonstrated its versatility in various scenarios:

- Captivating Image Descriptions: This cutting-edge model excels at generating vivid and diverse captions that bring images to life, leveraging the visual content to provide rich and engaging descriptions.

- Concise Video Summaries: Harnessing its advanced audiovisual understanding, the model effortlessly generates succinct and informative summaries for videos, distilling their essence and presenting it in an easily digestible format.

- Flawless Audio Transcripts: With its impeccable acoustic analysis, Macaw-LLM produces accurate and fluent transcriptions for audio clips, ensuring a seamless conversion of spoken words into written form.

- Natural Text-to-Speech Synthesis: Leveraging its deep understanding of semantics and syntax, the model creates authentic and expressive speech from text inputs, producing lifelike voices that captivate listeners with their naturalness and emotion.

Architecture of Macaw-LLM

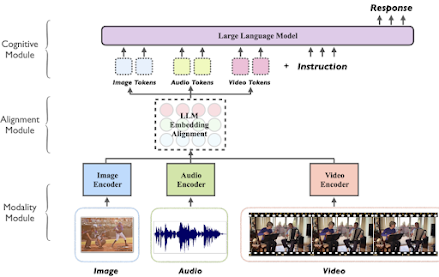

The model has three major modules: modality module, alignment module, and cognitive module. The modality module uses pre-trained models to encode different modalities into latent representations. The alignment module uses multi-head self-attention and linear layers to transform and align the latent representations from different modalities into a common space. The cognitive module uses a pre-trained language model to process the aligned representations and generate natural language texts.

Macaw-LLM is designed to address the challenges of multi-modal language modeling. there are couple of challenges stated below and their solutions that Macaw-LLM can handle with its architecture.

- Alignment Strategy of Macaw-LLM

One of the challenges of multi-modal language modeling is how to align the representations from different modalities into a common space. Macaw-LLM uses a simple and fast alignment strategy that consists of three steps: encoding, transformation, and alignment. The encoding step uses pre-trained models such as CLIP and WHISPER to encode image, video, and audio data into high-level features. The transformation step uses linear layers to compress and adjust the size of the features to match the LLM embeddings. The alignment step uses element-wise operations to fuse the features from different modalities and create a unified representation space. This alignment strategy enables Macaw-LLM to efficiently integrate multi-modal data and quickly adapt to diverse data types. - One-Stage Instruction Fine-Tuning of Macaw-LLM

Another challenge of multi-modal language modeling is how to adapt the model to different domains and tasks. Macaw-LLM simplifies the adaptation process by using a one-stage instruction fine-tuning method, which allows the model to learn from task-specific instructions. The instructions are provided as prefixes to the input sequences, and they contain information such as the input modality, the output modality, the domain, and the task. The model can use these instructions to adjust its behavior and generate appropriate outputs.

How to access and use this model?

Macaw-LLM is available on GitHub, where you can find the code, data, pre-trained models, and instructions for using the model. You can also access an online demo where you can try out the model interactively by providing your own inputs or choosing from some examples.

The model is open-source and free for research purposes. However, if you want to use it for commercial purposes, you need to contact the authors for licensing information.

If you are interested to know more about Macaw-LLM, all relevant links are provided under 'source' section at the end of this article.

Limitations

Macaw-LLM stands out as a formidable and versatile model capable of seamlessly integrating multiple modalities to produce natural language texts that cater to diverse domains and tasks. However, like any advanced technology, it does possess certain limitations that necessitate future attention:- Evaluation: While the model showcases a few instances of its multi-modal prowess, these examples may not suffice to accurately and comprehensively exhibit its true capabilities. There are concerns that the model's performance may not align with the evaluation results reported, especially when it comes to tasks specifically tailored for instructional purposes.

- Single-Turn Dialogue: Although the model receives training on "dialog-like" instructions, this training is restricted to single-turn interactions. Consequently, the model might not be optimized to effectively navigate multi-turn dialogues and capitalize on the wealth of information provided by long-range context.

- Hallucination, Toxicity, and Fairness: Similar to other language models, Macaw-LLM may encounter challenges such as hallucination, toxicity, and fairness. Regrettably, due to the absence of suitable evaluation suites, the model has not been thoroughly assessed in these areas.

Conclusion

Macaw-LLM is a breakthrough in the field of natural language generation and multi-modal learning. It opens up new possibilities and opportunities for various applications and domains that require natural language generation across different modalities. It also poses new challenges and directions for future research and development.

source

GitHub repo-https://github.com/lyuchenyang/Macaw-LLM

research paper - https://arxiv.org/abs/2306.09093

No comments:

Post a Comment