Have you ever wished for a virtual assistant that can do more than just answer your questions or play your favorite songs? A virtual assistant that can understand your goals, plan the best actions, execute them with multiple modalities, inspect the results, and learn from feedback? If yes, then you might be interested in AssistGPT, a new model developed by researchers from ShowLab at the National University of Singapore, Microsoft Research Asia, and Microsoft.

ShowLab is a research group that focuses on creating intelligent systems that can interact with humans and the physical world using natural language, vision, and robotics. Their motto is to how artificial intelligence can be used for various applications and domains. AssistGPT is one of their latest projects that aims to create a general multi-modal assistant that can handle complex tasks using natural language and visual inputs and outputs.

What is AssistGPT?

AssistGPT is a general multi-modal assistant that can plan, execute, inspect, and learn from natural language instructions and visual feedback. It can handle complex tasks that require multiple steps, such as booking a flight, ordering food, or creating a presentation. It can also adapt to different domains and scenarios by learning from user feedback and online resources.

Key Features of AssistGPT

AssistGPT has several key features that make it a powerful and versatile multi-modal assistant:

- It can handle multi-step tasks that require planning and reasoning across different domains and modalities. For example, it can book a flight, order food, create a presentation, or play a game using natural language commands and visual interfaces.

- It can adapt to new tasks by learning from user feedback and online resources. For example, it can learn new skills or concepts by asking questions or searching for information on the web. It can also learn from its own mistakes or successes by receiving positive or negative feedback from the user or the inspector.

- It can generate natural language responses that are coherent and diverse. For example, it can explain its actions, ask for clarification, provide suggestions, or express emotions using the text generation capabilities of the language model.

- It can interact with multiple devices and platforms. For example, it can use a laptop, a smartphone, a smart speaker, or a robot arm to perform different actions.

- It can reason in an interleaved language and code format, using structured code to call upon various powerful tools. For example, it can use SQL queries to access databases, HTML tags to create web pages, or Python scripts to run algorithms. It can also use natural language to explain its reasoning process, ask questions, or provide feedback.

- It can learn from different sources of data and knowledge, such as dialogues, web data, images, captions, queries, or SQL. It can use different learning techniques, such as reinforcement learning or meta-learning, to update its model parameters. For example, it can use reinforcement learning to optimize its reward function based on the feedback. It can also use meta-learning to adapt its model parameters to new tasks or domains.

Capabilities/Use Cases of AssistGPT

AssistGPT has many potential use cases in various domains and scenarios. Here are some examples of what it can do:

- It can help users with their daily tasks and chores that involve multiple steps and modalities. For example, it can book a flight, order food, create a presentation, or play a game using natural language commands and visual interfaces.

- It can assist users with their learning and education by adapting to their needs and preferences. For example, it can learn new skills or concepts by asking questions or searching for information on the web. It can also provide suggestions or feedback using natural language responses.

- It can entertain users with their hobbies and interests by generating diverse and creative content. For example, it can play games with users using natural language dialogue and visual feedback. It can also generate stories, poems, songs, or images using natural language creativity.

- It can support users with their health and wellness by monitoring and improving their well-being. For example, it can track users’ vital signs, provide health tips, remind them to take medications, or connect them with doctors using natural language communication and visual sensors.

AssistGPT Architecture

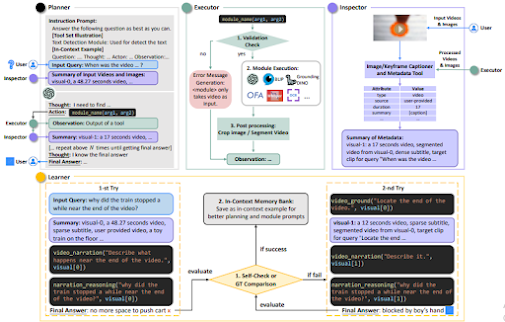

The architecture of AssistGPT comprises four core components: the planner, the executor, the inspector, and the learner. Each component incorporates sub-modules and functions, as depicted in the figure below:

AssistGPT leverages a large-scale language model to generate diverse and coherent texts. To enable multi-modal planning, execution, inspection, and learning, AssistGPT extends this model with a multi-modal encoder and a multi-modal decoder.

The planner's role is to generate a sequence of actions that can fulfill the user's goal. It encompasses two sub-modules: the instruction parser and the action planner. The instruction parser receives a natural language instruction from the user and converts it into a formal representation of the goal. Subsequently, the action planner utilizes the text generation capabilities of the language model to generate a sequence of actions that can accomplish the goal.

The executor is responsible for executing the actions generated by the planner using various modalities. It comprises three sub-modules: the action parser, the modality selector, and the modality executor. The action parser transforms an action from the planner into a formal representation. The modality selector determines the most suitable modality for executing the action, such as keyboard typing, mouse clicking, or speech synthesis. To make this selection, the modality selector employs a scoring function that considers factors like modality availability, efficiency, and reliability. Finally, the modality executor carries out the action using the chosen modality on the relevant device or platform.

The inspector's task is to scrutinize the output of the executor and verify if it aligns with the expected outcome. It consists of two sub-modules: the action parser and the action verifier. The action parser converts an action from the planner into a formal representation of the expected outcome. The action verifier compares the executor's output with the expected outcome using the text generation capabilities of the language model. In case of any mismatch or error, the action verifier may flag it or seek clarification from the user.

The learner's role is to enhance its performance by learning from user feedback and online resources. It encompasses two sub-modules: the feedback parser and the feedback updater. The feedback parser converts natural language feedback provided by the user or the inspector into a formal representation. The feedback updater takes the feedback and updates the model parameters using reinforcement learning or meta-learning techniques. The feedback updater utilizes a reward function that assesses the model's performance on the task based on the feedback received.

How to access and use this model?

If you are interested in learning more about AssistGPT or trying it out yourself, you can visit its GitHub page or its demo page. You can also read its research paper or its research document for more details.

Limitations

AssistGPT is a novel and powerful model that can perform various tasks using natural language and vision. However, it also has some limitations or challenges that need to be addressed in the future.

Some of the limitations are:

- AssistGPT is still limited by the availability and quality of data and resources for different domains and tasks. For example, some domains or tasks might not have enough data or resources to train or fine-tune AssistGPT effectively.

- AssistGPT is still limited by the scalability and efficiency of its components and mechanisms. For example, some components or mechanisms might be computationally expensive or memory intensive to run or update.

Conclusion

AssistGPT is a novel and powerful model that demonstrates the potential of combining natural language understanding, planning, execution, inspection, and learning in a unified framework. It also opens up new possibilities for building and using multi-modal assistants for various applications and domains.

source

project details - https://showlab.github.io/assistgpt/

research paper - https://arxiv.org/abs//2306.08640

project link - https://assistgpt-project.github.io/

No comments:

Post a Comment