Introduction

Control of Artificial Intelligence (AI) capability has made significant advances during recent years. The ability to achieve these advances is gained by the requirement to ensure AI systems work and function safely, responsibly, and ethically. Properly defined boundaries, limitations, and guidelines aim to minimize possible risks and negative results of AI systems.

Still, this road to robust AI capability control is fraught with heavy challenges. Issues associated with biased data used in training, lack of transparency within the decision-making processes, and exploitation by bad actors remain among the significant hurdles. Advanced computational techniques for developing more reliable and trustworthy AI systems are the mean by which LFMs seeks to surmount these challenges.

Who invented Liquid Foundation Models?

Liquid AI is a firm comprised of former researchers from the Massachusetts Institute of Technology's (MIT) CSAIL who are developing what they call Liquid Foundation Models. Liquid AI comprises a corps of experts in dynamical systems, signal processing, and numerical linear algebra. The motto for the development of LFMs is best-in-class intelligent and efficient systems at every scale, designed to take care of large amounts of sequential multimodal data, enable advanced reasoning, and achieve reliable decision-making.

What are Liquid Foundation Models?

Liquid Foundation Models is a new class of first-principles, generative AI models. These models achieve state-of-the-art performance at every scale, though the models come with an incredibly smaller memory footprint and higher degree of efficiency during inference. LFMs are designed to handle an enormous variety of sequential data that can be video, audio, text, time series, and signals.

Model Variants

Liquid Foundation Models is offered in three versions:

- LFM 1.3B: Most appropriate for highly resource-poor environments.

- LFM 3.1B: Optimized for edge deployment.

- LFM 40.3B MoE: A Mixture of Experts model designed to be deployed for solving tougher problems.

Key Features of Liquid Foundation Models

- Multi-Modal Support: LFMs natively support multiple data modalities such as text, audio, images, and video.

- Token Mixing & Channel Mixing: The computational units specialize in doing token mixing and channel mixing, which improves the ability of the model in processing and consolidating different types of data.

- Efficient Inference: Less memory usage and fewer computationally expensive inferences compared to an equivalent transformer-based structure

- Adaptive Computation: It includes adaptive linear operators, which effectively modulate computation based on input.

- Scalability: LFMs are optimised for performance, scalability, and efficiency on a wide range of hardware platforms.

Capabilities and Applications of LFMs

- General and specific knowledge: LFMs truly stand out in two general and specific knowledge domains, thus enabling them to perform many tasks.

- Math and Logical Reasoning: LFMs are excellent in math and logical reasoning. For instance, they can solve fairly complex problems very quickly. This is especially useful in most engineering and data science-related work.

- Handling Long Tasks: LFMs are efficient with long tasks. It is perfect for summarizing a document, writing an article, or conversational AI.

- Financial Services: LFMs can easily filter through large data sets in order to detect fraud, enhance trading strategies, and thus help find the patterns that are held for intelligent investment decisions.

- Biotechnology: LFMs help in the development of drugs and genetic research. This helps and hastens the digestion of complex biological data in the generation of new treatments.

Innovative Architecture of LFMs

Liquid Foundation Models are built in a different way than transformer models. They employ a specific design with adaptive linear operators that work based on input data and, consequently can handle tokens up to 1 million in a memory-efficient way, rather than augmenting the model size as it does in traditional Large Language Models. This makes it easy for them to produce good results, adapt quickly, and consume fewer bytes.

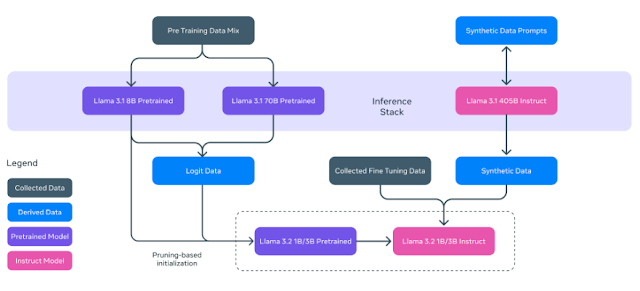

LFMs use computation units adopted from other systems, such as dynamical systems, signal processing and numerical linear algebra. These are designed as a depth group. As shown in above figure, this architecture is found to promote feature sharing and also more controlled control over the model's computation at the same time and making it easier to understand how the model works. This is seen to ensure AI systems operate in a safe and responsible manner that fits or adopted to serve the needed ethical principles. This prevents unintended consequences and increases transparency in the decision-making areas.

Instead of model scaling, Liquid puts its focus on 'featurization'. Featurization refers to the process of rearranging input data-in this case, text or audio-under a structured format. This would allow for customizing computational units depending on the nature of data and hardware that will be required. The aspects Liquid AI stresses mainly in its design are 'featurization' and the operators' complexity. Liquid AI balances the model performance and efficiency through control of these aspects. Control of strong AI capability is maintained through such balance.

Performance Evaluation

Liquid Foundation Models (LFMs) have shown top performance when compared to similar-sized language models using Eleuther AI’s evaluation tool. LFM-1B Model scores the highest in the 1B parameter category. It excels in benchmarks like MMLU (5-shot) with a score of 58.55 and HellaSwag (10-shot) with a score of 67.28. This shows how effective Liquid AI’s design is, especially in environments with limited resources.

LFM-3B Model goes even further. It is more efficient than models like Phi-3.5 and Google’s Gemma 2, while using less memory. This makes it perfect for mobile and edge AI applications. It also outperforms other 3B parameter models, including transformers, hybrids, and RNNs, and even beats some 7B and 13B models. With a score of 38.41 on MMLU-Pro (5-shot), it is great for mobile text-based applications.

LFM-40B Model uses a Mixture of Experts (MoE) approach with 12B activated parameters. It balances size and output quality well. It performs as well as larger models but is more efficient because of its MoE design. As you can see in above figure, scoring high on the MMLU-Pro task shows that these LFMs are excellent at complex reasoning and problem-solving. This highlights their potential to tackle tough AI tasks that need advanced thinking skills.

Comparison with Other Leading AI Models

The top AI models are Liquid AI's Liquid Foundation Models (LFMs), Microsoft's Phi-3.5, and Google's Gemma 2. Each has particular features and abilities. LFMs are built from first principles. It uses systems like dynamical systems, signal processing, and numerical linear algebra. This helps it to perform well with less memory. Microsoft's Phi-3.5 models, including Phi-3.5-MoE, are designed to be powerful and cost-effective. They support several languages and come with robust safety features. Google's Gemma 2 models are lightweight and efficient. They can run very well on various hardware platforms and even do great when being small in size.

LFMs are rare as it does not depend on the transformer architecture. Phi-3.5 and Gemma 2 are using transformers. This reduces the parameters of LFMs, thus they are efficient and perform well. In the models of Phi-3.5, LFMs apply Mixture-of-Experts architecture. This would result in only certain parameters being active during its utilization. This makes it much more efficient as well. The main objective with the Gemma 2 model is on high performance and efficiency. It has very strong safety features and can be integrated into many different frameworks.

LFMs are ideal for low-resource environments. Due to the novel system they have, they are capable of tasks requiring advanced reasoning and decision-making. Phi-3.5 models are reliable and support many languages, making them good for applications requiring much reliability as well as a variety of languages. Gemma 2 models are highly efficient in cost. They are suitable for quite a number of applications-from cloud sets up to local deployments. Overall, LFMs are the frameworks that best perform in low-resource environments. Therefore, they represent a powerful tool for many AI applications.

How to Access and Use LFMs

LFMs are available for early testing and integration through a range of access points, including Liquid Playground, Lambda-both, including Chat UI and API-and, also, through Perplexity Labs. It is, however, worth noting at this point that while above access points do indeed allow for some degree of experimentation and deployment in certain cases, these models themselves are not open-source.

Limitations and Future Work

Challenges LFMs face include issues in zero-shot code tasks, accuracy of exact numerical computations, and data confidentiality over information sensitive to time. Their training language is typically English, so such a method would not be fully effective if the applications were multilingual. It's also unclear what the maximum token limit could be.

Future work will scale model sizes, improve computational efficiency, and optimize for modalities as well as hardware. Liquid AI hopes better alignment with human values can be achieved through human preference optimization techniques.

Conclusion

LFMs, or Liquid Foundation Models, provide an alternative focusing on efficiency, scalability and control. LFMs thus offer an effective and flexible solution for a variety of applications by combining the capabilities of conventional computing through conventional programming methodologies with emerging paradigms in computation. These capabilities combined with simply proprietary technology, make LFMs new disrupting tools capable of changing industries.

Source

Website: https://www.liquid.ai/liquid-foundation-models

LNN: https://www.liquid.ai/blog/liquid-neural-networks-research

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.