Introduction

Lightweight models for edge and mobile devices have had much penetration while reducing resource consumption but improving overall performance. It allows real-time processing and decision-making directly on devices, reducing latency and promoting privacy. On the other hand, multimodal models advance through the incorporation of diverse types of data - such as text and images - into delivering a richer, more contextual outcome. This openness through integration opens numerous applications, including image captioning and visual question answering amongst others.

All these developments are part of AI advancements for making it more efficient, versatile, and able to process complex operations in a more accurate manner with high speeds. Llama3.2 embodies the improvements by offering increased edge AI and vision capabilities, providing support for lightweight as well as multimodal functionalities through a robust framework that developers will find highly useful in crafting innovative AI applications.

What is Llama3.2 ?

Llama3.2 is a new AI model introduced recently by Meta and is optimized to work on the smallest devices, phones and tablets. Model great for private and personalized AI. Model can interchangeably work with text and images, which makes it very handy for many jobs.

Model Variations

- Llama 3.2 1B: It is a small model that can only work with text, ideal for small devices.

- Llama 3.2 3B:Just another bare-features text-only model but with many more features.

- Llama 3.2 11B Vision: It will take a text and images as input.

- Llama 3.2 90B Vision: even bigger model for more complex tasks, as well accepts text and images.

Key Features of Llama3.2

- Multimodal Capabilities: Handles both text and images, hence very versatile.

- Optimized for Edge Devices: It works really well on small devices, therefore fast and private.

- Improved Performance: It can give better instructions and summative information than the older versions.

- Long context length: The model may accept context lengths up to 128K tokens. This implies it can comprehend and process that much at a go.

- Improved Privacy: Store in the device itself keeps the information private.

- Multilingual Support: Works on multiple languages such as English, German, French, Italian, Portuguese, Hindi, Spanish and Thai.

Capabilities/Use Cases of Llama3.2

- Image Captioning:Llama3.2 can describe images with great verbosity, which makes this model useful for applications like auto tagging photos and generating visual content.

- Visual Question Answering: The capability to answer questions through visual data can increase the utility in educational applications and customer service.

- Document understanding: Llama3.2 can read and understand documents containing images-charts, graphs, etc. It is very helpful for scanning complex documents, extracting relevant data, and preparing a summary.

- Personalized AI Agents: The model could be used as an on-device assistant that can take summary operations in multiple languages, thus enabling helping users more effectively in their daily activities by providing personalized and context-aware services.

- Business Insights: Llama3.2 takes business data and produces recommendations to improve through interpretation of visual data. It helps businesses in the development of actionable insights from their data, makes operations easier, and bases decisions on analytical data that is visual.

Unique Architectural Enhancements in Llama 3.2

Llama 3.2 configures a pre-trained image encoder with a language model by making use of special layers known as cross-attention layers. Thus, the model handles images fluently but also in text, making it capable of both understanding and generating natural language that would coincide with even more complicated visual information. The vision adapter will now be used in conjunction with the already developed Llama 3.1 language model, which will retain all the language skills but add to it the capability of understanding images.

It uses the cross-attention layers to focus on relevant parts of an image when processing text and vice versa. This is really helpful for tasks that require association of parts of an image with text. The cross-attention layers take the image data feed it into the main language model. It receives raw image data as input and processes it first through an image encoder, which then turns that into a format understandable to the language model. The adapter is trained on a huge set of image-text pairs. During training, the settings of its image encoder are updated but those of the language model remain the same. This helps the adapter connect the image and text data without messing up the language skills of Llama 3.1.

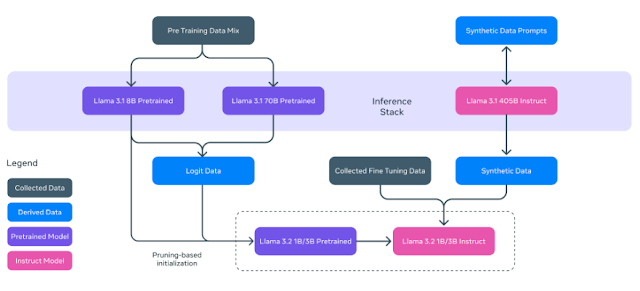

The Llama 3.2 1B and 3B models are light and efficient. These models reach their efficiency through pruning and knowledge distillation methods applied to the original Llama 3.1 models. The process starts with the application of structured pruning on the 8B Llama 3.1 model; in which systematically removed parts of the network, adjusted weights and gradients, shrink a model while maintaining as much performance as possible. It was subjected to knowledge distillation whereby it was trained on the large 8B and 70B Llama 3.1 models. That meant incorporating the output probabilities or logits of those 'teacher' models into the pre-training of the pruned model to help it perform even better than if it were only training from scratch. The result will be sets of 1B and 3B models optimized for on-device deployment, balancing the demands of smaller devices with the performance of full-sized models.

Performance Evaluation with Other Models

Llama 3.2 shows great skills in recognizing images and understanding visual information. As shown in below table, It performs well on many tests. For example, it excels in tasks like Visual Question Answering (VQA) and Document Visual Question Answering (DocVQA). This means it can understand and answer questions based on images and document layouts. Llama 3.2 is also good at image captioning, finding images that match text, and connecting images to text. This proves its strong ability to understand and reason with images.

The lightweight 1B and 3B versions of Llama 3.2 are made for use on devices. They have shown they can compete with other similar models. Tests show that the 3B model does better than both Gemma 2 and Phi 3.5-mini in tasks like following instructions, summarizing, rewriting prompts, and using tools. The 1B model also performs well compared to the Gemma model. These results show that Llama 3.2 can balance efficiency and accuracy in text tasks, making it good for devices with limited resources.

Llama 3.2 competes well with top models like GPT-4o and Claude 3 Haiku, especially in image recognition and summarization tasks. It also performs better than its older version, Llama 3.1, in many tests. This improvement is clear in visual and math reasoning tasks, where Llama 3.2 often outperforms models like Claude 3 and GPT-4 mini. This shows that Llama 3.2 has better skills and efficiency in handling both text and image tasks.

How to Access and Use Llama3.2?

Llama3.2 is available for download from Hugging Face and from the Llama official website under the Llama 3.2 Community License Agreement. One may also use cloud services like Amazon Bedrock or Google Cloud. It can be used as a local solution on personal computers or edge devices. Detailed instructions and documentation are available at Llama's website as well as in the GitHub repository. Llama3.2 is free and open source, commercially usable under its particular license. If you are interested to learn more then all relevant links are provided at the end of this article.

Limitations and Future Work

Llama3.2 is a giant leap for the AI technology field, but the problems that still stand before it. Like other large language models, it produces wrong, biased, or inappropriate answers. It is because it learns from voluminous datasets that contain either wrong or biased data. The vision capabilities of Llama3.2 work flawlessly with only English. That, indeed, limits the utility of such a system to users of other languages. Moreover, this model cannot be allowed in countries that have rigid regulations like the EU and UK.

In the future, Llama3.2 will continue to focus on safety and reduce bias. This model will also make more efforts to get the vision features working in more languages and to improve its ability to reason and explain answers.

Conclusion

Llama3.2 Truly an application of very strong AI - really good on dealing well with text and images, and, more important, it is very well-performing and fits well in small devices like phones or tablets. Due to its openness and possibilities of customization, it's a resource of great value for developers and businesses alike.

Source

Website: https://ai.meta.com/blog/llama-3-2-connect-2024-vision-edge-mobile-devices/

Huggingface models: https://huggingface.co/collections/meta-llama/llama-32-66f448ffc8c32f949b04c8cf

GitHub Repo: https://github.com/meta-llama/llama-models/tree/main/models/llama3_2

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.

No comments:

Post a Comment