Introduction

The landscape of medical knowledge is undergoing massive, transformative change with the advent of Large language models (LLMs). These AI giants are moving beyond being repositories into active participants in disseminating healthcare knowledge. For their part, they were great tools to break down complex medical jargon into everyday language, making it easy for research and even aiding in clinical decisions. However incredible the journey could be, this one has some challenges. LLMs often do not provide answers to domain-specific questions precisely enough and thus have to be fine-tuned, resulting in high precision. But meet LlamaCare, an AI model crafted to circumvent these very problems and advance healthcare knowledge in an AI-powered way.

LlamaCare has been developed by Maojun Sun from The Hong Kong Polytechnic University Kowloon, Hong Kong, China, and a company for fine-tuning with the lowest carbon footprint in the medical knowledge of LLMs. This collaborative project involved pooling in principles from expert contributors, and the development was underpinned by the determination of the organization to better the sharing of health care knowledge. The mantra around which the formation of LlamaCare occurred empowered this model to produce high yield and, at the same time, combat the redundancy and lack of precision factors that other models faced.

What Is LlamaCare?

LlamaCare is a specialist healthcare artificial intelligence tool. It is a medical language model fine-tuned for enhanced sharing and use of healthcare knowledge, unlike the general language models that are created to handle complex medical terminologies and concepts alike, to present its use in the medical healthcare industry to great stature.

Key Features of LlamaCare

Some key features that make LlamaCare unique from the other models available are:

- Low Carbon Emissions: Fine-tuned LlamaCare is attuned to models like ChatGPT, but the use of GPU resources is shallow in comparison to this. In turn, it is not only efficient but friendly to the environment.

- Extended Classification Integration (ECI): It serves as a new module to solve the redundancy of categorical answers and, at last, to allow the model to have an excellent performance. It does so to ensure that the model gives clear and succinct answers.

- One-shot and Few-shot Training: LlamaCare has been trained in benchmarks such as PubMedQA and USMLE 1-3 step. This training has prepared it to process a variety of medical questions.

Capabilities/Use Case of LlamaCare

LlamaCare's capabilities extend to diverse practical applications:

- Automated Generation of Patient Instructions: LlamaCare utilizes multimodal data to increase efficiency and quality through hospital discharge instructions. This will go a long way toward not only reducing the burden on the healthcare providers but also ensuring that patients receive not just reasonable but correct directions.

- Clinical Decision Support: LlamaCare assists healthcare providers with accurate medical information and suggestions. This will guide improved clinical decision-making and, hence, better patient outcomes.

How does LlamaCare work?/ Architecture/Workflow

At its core, LlamaCare uses pre-trained encoders and modality-specific components to process diverse data types such as text and images for the purpose of comprehensive healthcare knowledge sharing. The instruction-following capabilities of large language models (LLMs) and the availability of real-world data sources raise design possibilities for a LlamaCare architecture. The fine-tuning of these LLMs in the clinical arena further by LlamaCare will ensure the delivery of precise and relevant medical knowledge to serve as essential decision-making tools by practitioners and patients as required.

The workflow for LlamaCare is illustrated in above figure. The first step involves data collection: the system sources medical text at a large scale from highly diverse sources, including but not limited to honest conversations and generated data from models like ChatGPT. This data helps to fine-tune the pre-trained LLaMA model in improving its medical knowledge and problem-solving abilities. The prompt training steps are to search for related knowledge, summarize said knowledge, and finally make a decision according to the summary. So, it can ensure that the model not only generates accurate information but also presents it in a compact and user-friendly way.

At the same period, in classification tasks, LlamaCare leverages an Extended Classification Integration (ECI) module for responsive class properties to make the whole process faster and user-friendly, producing short categorical answers. When training, it learns to balance between text generation and classification by jointly updating the LLaMA-2 model and the ECI module. This integrated approach allows LlamaCare to be versatile in any healthcare domain, where it can address anything from very detailed explanations to simple yes-or-no answers regarding a wide range of medical queries.

LlamaCare’s Unique Learning Techniques

Fine-Tuning - LlamaCare’s fine-tuning process is not just about adapting to the medical domain. It involves a deeper level of learning using specialized datasets like PubMedQA and USMLE step exams. This enhances LlamaCare’s proficiency in managing complex medical queries. Moreover, LlamaCare incorporates methods such as LoRA and QLoRA, striking a balance between computational efficiency and model performance.

Instruction Tuning - LlamaCare’s instruction tuning process is more than just understanding and responding to instructions. It’s about refining the model’s comprehension of specific medical queries. This is achieved using specialized prompts and datasets designed for the medical domain. The result is practical and straightforward information that is truly beneficial for health practitioners and patients.

Prompt Engineering - Prompt engineering in LlamaCare is not just about generating responses. It’s a three-step paradigm that involves hunting down relevant knowledge, condensing it, and making decisions based on the summarization. This ensures that LlamaCare not only generates accurate information but also presents it in a user-friendly manner.

Performance Evaluation

The performance evaluation of LlamaCare unveils lots of promises in the medical domain in comparison with recently presented state-of-the-art models.

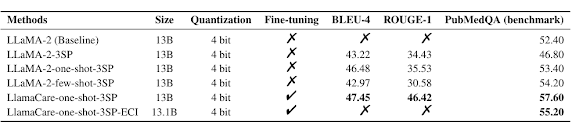

From the above table, it can be seen that LlamaCare distinctly outperforms the baseline by improving two essential measures employed in text quality determination: BLEU-4 and ROUGE-1. On a one-shot scenario, the model gets a BLEU-4 of 47.45 and a ROUGE-1 of 46.42 through the 3-step prompt approach. This is a significant improvement over the base LLaMA-2 model and a clear testimony of LlamaCare's capability to capture the intent of medical questions with precision.

Table above also summarizes the performance of LlamaCare on the benchmark data, USMLE, and PubMedQA. Even when quantized to 4-bit precision dissemination, LlamaCare has hit 57.60% accuracy on PubMedQA, very close to models like ChatGPT, which scores 63.90%. The USMLE benchmark percentile that LlamaCare scores is 44.64. This is above the specialized medical models, Med-Alpaca and Chat-Doctor. These results should point at LlamaCare being very computationally efficient and effective at obtaining equivalent or better performance than models of similar size while spending fewer computational resources.

LlamaCare: A Unique Player in Medical Language Models

In the rather crowded field of large language models fine-tuned for medical tasks, LlamaCare, Med-Alpaca, and Chat-Doctor each bring unique features and capabilities. LlamaCare introduces an Extended Classification Integration (ECI) module for dealing with classification problems in LLMs, focusing on making knowledge much more shareable in healthcare. It also hits near ChatGPT performance, with just a 24G GPU.

On the other hand, Med-Alpaca is an open-source medical conversational AI model meant to enhance medical workflows, diagnostics, patient care, and education. It has been fine-tuned on over 160,000 entries of a dataset that was explicitly designed for practical work in medicine. A medical chat fine-tuned on LLaMA with medical domain knowledge, Chat-Doctor incorporates a self-directed information retrieval mechanism, allowing the model to access and use real-time information from online sources such as Wikipedia and data of curated offline medical databases.

So, even though Med-Alpaca and Chat-Doctor have strengths, LlamaCare has some unique characteristics for augmenting healthcare knowledge sharing. Its feat in classifying problems and environmentally friendly characteristics is notable. The performance of LLM springs from enhancement by the ECI module in LlamaCare to solve a problem of redundant categorical answers; further, it is eco-friendly with its low carbon emissions. These unique features differentiate LlamaCare from the other applications, namely Med-Alpaca and Chat-Doctor, making it a significant player in this field of medical language models.

How to Access and Use LlamaCare?

You can find Weights and datasets for LlamaCare on Huggingface, and its official repository is on GitHub. The software is open-source; this means that it can be used and contributed from the community in a very accessible manner. If you want more information about this AI model, all the links are listed in the 'source' section at the bottom of this article.

Conclusion

LlamaCare embodies what AI has to offer towards better health knowledge sharing. Its development, hence, is one single but significant achievement in the domain of healthcare LLMs that points toward a vision where AI and health are seamlessly integrated.

No comments:

Post a Comment