Introduction

The field of artificial intelligence has made significant progress in reconstructing articulated objects from real-world data. However, the way is still full of challenges. AI models face issues such as high computational cost, low accuracy under challenging conditions, and the necessity of large training datasets. One such AI model that has started to see the light of day in this area is Real2Code. Real2Code aims to address the problems of efficient and accurate code generation for an articulated object through reconstruction methods.

Real2Code is a novel approach developed by some skilled researchers from Stanford University and Columbia University. The model was conceived to cross the classical frontiers of object reconstruction and be able to handle articulated objects that pose different challenges just because the parts are movable. The core idea behind Real2Code is, in one way, to simplify a complex process: It digitally generates twins of these objects toward upgrading applications all over, from robotics to virtual reality.

What is Real2Code?

Real2Code is a cutting-edge AI model that stands at the intersection of computer vision and AI. It is designed to interpret and reconstruct articulated objects by generating corresponding code that represents their structure and dynamics. This unique approach allows Real2Code to create detailed digital representations of real-world objects, thereby bridging the gap between physical and digital realities.

Key Features of Real2Code

- Efficient Reconstruction: Real2Code leverages a code generation mechanism that streamlines the reconstruction process. This feature allows it to efficiently create detailed digital twins of articulated objects.

- High Fidelity: Real2Code ensures a high level of detail and accuracy in the representation of objects. This high fidelity makes it an invaluable tool in applications where precision is key.

- Articulation Awareness: One of the standout features of Real2Code is its ability to handle objects with multiple moving parts. This articulation awareness sets it apart from other models in the field.

- Scalability: Real2Code has the ability to scale elegantly with the number of articulated parts, making it a versatile tool for a wide range of objects.

- Generalization: Real2Code can generalize from synthetic training data to real-world objects in unstructured environments. This ability to adapt to different data sources enhances its applicability.

Capabilities and Use Cases of Real2Code

- Robotics: Real2Code’s ability to create detailed digital twins of articulated objects can assist in creating more responsive and aware robotic systems. For instance, it could be used to improve object manipulation tasks in robotics.

- Virtual Reality: Real2Code can enable the creation of more immersive and interactive VR experiences. By providing high-fidelity digital representations of real-world objects, it can enhance the realism of virtual environments.

- 3D Modeling: Real2Code’s efficient reconstruction and high fidelity make it a powerful tool for 3D modeling. Designers and engineers could use it to quickly generate accurate 3D models of complex objects.

- Simulation: In simulation scenarios, Real2Code’s ability to handle objects with multiple moving parts can be invaluable. It could be used to create detailed simulations for training AI models or for testing automated systems.

How does Real2Code work?

Real2Code is an innovative system designed to reconstruct multi-part articulated objects from visual observations. It operates in two main steps:

Reconstruction of Object Parts’ Geometry: Real2Code leverages a pre-trained DUSt3R model to obtain dense 2D-to-3D point maps. It then uses a fine-tuned 2D segmentation model, SAM, to perform part-level segmentation and project to segmented 3D point clouds. A learned shape-completion model takes these partial point cloud inputs and predicts a dense occupancy field, which is used for part-level mesh extraction. This approach is category-agnostic and can address objects with an arbitrary number of parts.

Joint Estimation via Code Generation: Here, the object parts are represented with oriented bounding boxes (OBBs), which are input to a fine-tuned large language model (LLM) to predict joint articulation as code. The LLM takes a list of part-OBB information as input and outputs joint predictions as a list. This approach scales elegantly with the complexity of an object’s kinematic structure and can be directly executed in simulation.

By leveraging pre-trained vision and language models, Real2Code can generalize from synthetic training data to real-world objects in unstructured environments. It’s a compact and precise system that effectively converts a regression task to an easier classification task for LLMs. This makes Real2Code a powerful tool for creating digital twins of objects in simulation, enabling various downstream applications.

Performance Evaluation with Other Models

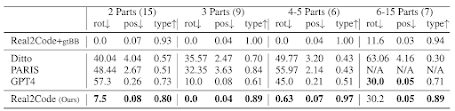

The performance evaluation of Real2Code, as compared to other models, demonstrates its superior capabilities in handling complex kinematic structures.

As per table above, Real2Code consistently outperforms baseline methods across objects with varying kinematic structures. Notably, for objects with 4 or more moving parts, Real2Code accurately predicts joints, whereas baseline methods fail. This is a significant achievement, considering the complexity and diversity of the objects involved.

Figure above further illustrates the qualitative results, comparing Real2Code to baseline methods on objects with varying kinematic complexities. While all methods can handle simpler laptop articulation, baseline methods struggle as the number of object parts increases. In contrast, Real2Code performs reconstruction much more accurately, even on a ten-part multi-drawer table. This demonstrates Real2Code’s robustness and adaptability in handling objects of varying complexity, further solidifying its position as a highly effective model for articulated object reconstruction.

Comparing Real2Code, PARIS, and Ditto

The comparison between Real2Code, PARIS, and Ditto reveals distinct strengths and applications for each model. Real2Code excels in reconstructing articulated objects from visual observations, using an image segmentation model and a shape completion model for part geometry reconstruction, and a fine-tuned large language model (LLM) for joint articulation prediction. On the other hand, PARIS works with two sets of multi-view images of an object in two static articulation states, decoupling the movable part from the static part to reconstruct shape and appearance while predicting the motion parameters. Ditto, however, stands out in a different domain, as an entity matching (EM) solution that checks if two data entries refer to the same real-world entities.

In terms of capabilities, Real2Code’s ability to scale with the number of articulated parts and generalize from synthetic training data to real-world objects in unstructured environments sets it apart. PARIS, however, generalizes better across object categories and outperforms baselines and prior work that are given 3D point clouds as input. Ditto leverages the powerful language understanding capability of pre-trained language models (LMs) via fine-tuning, making it valuable in scenarios where identifying and matching real-world entities is crucial.

So, while all three models have their unique strengths and applications, Real2Code sets itself apart with its unique approach of reconstructing articulated objects via code generation. Its ability to efficiently and accurately reconstruct complex articulated objects from visual observations makes it particularly useful in scenarios where detailed object reconstruction is required. However, it’s important to note that the choice between these models would ultimately depend on the specific requirements of the task at hand. Real2Code, with its focus on articulated objects, would be the model of choice for tasks involving detailed and accurate object reconstruction. Meanwhile, for tasks involving entity matching, Ditto would be more suitable. As AI continues to evolve, it’s exciting to see how these models will continue to shape the landscape of AI and machine learning.

Access and Usage

Real2Code is accessible through its GitHub repository, where users can find detailed instructions for local deployment and online demos. The model is open-source, fostering a community of developers and researchers to contribute and enhance its capabilities.

If you would like to read more details about this AI model, the sources are all included at the end of this article in the 'source' section.

Limitations

While Real2Code has made significant strides in the reconstruction of articulated objects, there are still challenges to be addressed:

- Single Object Focus: Real2Code currently only considers single objects with many parts. Extending it to multiple object scenes would require additional object detection and preprocessing.

- Limited Joint Parameter Prediction: Real2Code only predicts joint parameters in terms of their type, position, and axis. Inferring other joint parameters, such as joint range and friction, would require additional multi-step interactions and observations.

- Sensitivity to Segmentation Errors: The accuracy of articulation prediction is sensitive to failures in the initial 2D image segmentation module. Incorrect segmentations can obstruct the model’s reasoning of object structures. This could potentially be improved by providing human corrective feedback.

Conclusion

Real2Code is a huge step forward in developing AI models for articulated object reconstruction. The new approach towards code generation using Real2Code opens the possibility of generating improved and more accurate digital models, holding great potential for further improvements in this area. However, similar to all models, there are limitations, and always a scope for improvement.

Source

Research paper : https://arxiv.org/abs/2406.08474

research document: https://arxiv.org/pdf/2406.08474

Project details: https://real2code.github.io/

GitHub Repo: https://github.com/MandiZhao/real2code

No comments:

Post a Comment