Introduction

Artificial intelligence, particularly in the realm of language agents, is advancing with the help of neural language models. The progress is tremendous, changing how we interact using technology on the way to fully realizing artificial general intelligence. Yet some of the challenges include task-specific limitations, complexity regarding task planning, and personalization.

Husky is developed as an open-source language agent to handle these prolonged reasoning experiments and is focused primarily on dealing with the complex, explicit, multi-step reasoning tasks, facilitating the next significant advance in the general trajectory of AI in developing more significant and more expressive, compositional models. Developed by a team of University of Washington, Meta AI, and AI2 researchers, Husky is built in CLEVR-Entailment, a dataset for a novel, automatic induction task encompassing extensive, multi-step problem-solving.

The motivation behind building Husky is to create a fully holistic language agent capable of heavyweight tasks in numerical, tabular, and knowledge-based reasoning, all through reasoning over one unified action space, to be the fully holistic tool in the AI landscape. This makes Husky versatile and capable of handling heavy reasoning in different domains.

What is Husky?

Husky is a comprehensive, open-source language agent that stands out in its ability to handle complex, multi-step reasoning tasks. It operates within a unified action space, effectively addressing tasks that involve numerical, tabular, and knowledge-based reasoning. This unique approach allows Husky to navigate the challenges that often accompany these diverse tasks.

Key Features of Husky

- Unified Action Space: A standout feature of Husky is its unified action space. This means that regardless of the task at hand, the tools it employs remain consistent and effective.

- Expert Models: Husky utilizes expert models such as code generators, query generators, and math reasoners. These models enhance Husky’s ability to reason and solve tasks.

- Training via Synthetic Data: Husky’s modules are all trained using synthetic data. This approach ensures a robust and adaptable learning process.

- Optimized Inference for Multi-Step Reasoning: Husky is designed to perform inference by batch processing all inputs and executing all tools in parallel. This optimization enhances its efficiency in multi-step reasoning tasks.

Capabilities/Use Case of Husky

- Complex Reasoning: Husky has tremendous significance in solving different kinds of complex, multi-step reasoning tasks. It handles numerical, tabular, and knowledge-based reasoning. For instance, it can be applied in analyzing big datasets to analyze data and predict based on identifying patterns within this data; that is how it is applied in data science and analytics to be used as an invaluable tool.

- Semi-extendable Capabilities: Husky's capabilities go all the way to a wide range of applications in AI and machine learning. From solving complex math problems to code execution and search query generation, Husky shows how it can be used to transform AI problem solutions. It can be used easily for software development as it is code-generation-enabled. As an alternative, generated queries in search engines would provide better results. Other areas where its ability for numerical reasoning comes in handy include financial modeling and such other complex calculations.

How does Husky work? / Architecture/Design

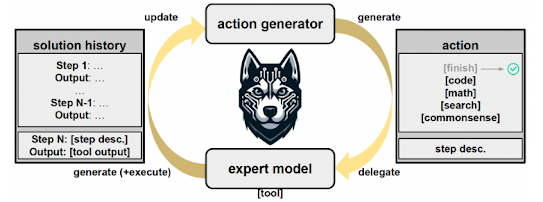

Husky is conceptualized to solve complex multi-step reasoning problems. It works in iterations between the two stages. It starts with an initial state and ends at a terminal state - the final answer for the given task.

First, it generates the next thing to do for a given task. This is done through the action generator that predicts the next high-level step and the corresponding tool invoked to carry out that step. The tools comprising the ontology of actions are code, math, search, and commonsense. If the final answer to the question has already been arrived at in the solution history, then the action generator returns that answer.

This is done in the second stage, where an action is executed, and the current solution state is updated using an expert model. Each tool has an associated expert model: code generator for code, math reasoner for math, query generator for search, and commonsense reasoner for commonsense. By the tool that this Action Generator allocates, Husky fires up the corresponding tool and executes it. Optionally, the tool outputs are rewritten in natural language.

All modules of Husky are trained on synthetic data. Later on, however, at inference time, it does batch processing of all the inputs. It also runs all the tools is the expert models in parallel. This efficient design enables Husky to support various tasks in a single, unified action space. So, the architecture of Husky is somewhat like a modern, LLM-based restatement of the planning system in the STRIPS mold: it offers an unusually generalizable yet efficient method of training and deployment for open language agents on a wide range of tasks.

Performance Evaluation

Husky has been subjected to extensive evaluation, demonstrating its superior performance over other language agents across a diverse set of 14 tasks. These tasks encompass a wide range from elementary school to high school competition math problems, finance question-answering datasets, and complex queries that require multi-step solutions.

In numerical reasoning tasks, Husky showcased a significant performance improvement, scoring 10 to 20 points higher than other language agents across all four tasks. This indicates Husky’s ability to accurately predict and execute the necessary tools to solve each step of a given math problem. Broadly speaking, Husky consistently outperformed all other approaches on four distinct tasks, reflecting its wide-scale exposure to tabular data, a characteristic feature of its training set distributions.

When evaluated over the evaluation benchmarks, Husky surpassed all other language agents in mixed-tool-task evaluations, with its most robust performance being on the in-domain part of the knowledge-based reasoning task. Impressively, for IIRC*, the modular GPT-4o was outperformed by Husky by a large margin on the HUSKYQA dataset. In most other results, the performance varied. Husky outperformed GPT-3.5-turbo and was on par with GPT-4–0125-preview with a 7B action generator, and on par with GPT-4.

Comparative Analysis of AI Language Agents: Husky, FireAct, and LUMOS

AI models in language agents have made significant strides in this field; few among those are: Husky, FireAct, and LUMOS. Each model has its strengths and mode of operation.

The Husky is an open-source model of a language agent. It provides complex multitask, multistep reasoning about numerical, tabular, and knowledge-base reasoning. It generates the subsequent action for a task given and executes the same using expert models. FireAct is a new fine-tuning of LMs with trajectories of many tasks and prompting methods. The setup is QA with a Google search API. Various base LMs, prompting methods, fine-tuning data, and QA tasks are explored. LUMOS is one of the first frameworks in this area and trains open-source LLM-based agents; it has a learnable unified modular architecture.

While all three models have their strong suites, it is the reasoning on a unified action space with the handling of such a varying suite of complex tasks that makes Husky stand out. All this was done using 7B models for the same functions in which frontier LMs like GPT-4 either meet or even outstrip it. This is how good an idea it is to have such a holistic approach by Husky toward reasoning problems. By contrast, the fine-tuning approach is actually vital for FireAct, and LUMOS is very strong in modular architecture. One thing in which Husky stands unique is in having a unified action space for different types of complex actions. Compared to the frontier LM like GPT-4, the performance certainly makes a case for its effectiveness in complex reasoning.

How to Access and Use Husky?

As an open-source language agent, Husky is accessible on its GitHub repository and the Hugging Face model hub. It is always possible to use it locally by following the instructions of the GitHub repository. It has been designed for a diverse range of uses, from research to commercial purposes.

If you are interested to learn more about this language agent then all relevant links are provided under the 'source' section at the end of this article.

Limitations and Future Work

Although Husky has so much applicability, it has considerable limitations. The quality and creativity of the training data may influence the performance of Husky. Moreover, being AI-based, just like all other models, Husky may not always give accurate predictions. Having discussed this, future research will focus on the betterment of Husky about more diverse changes in the face of challenging tasks, apart from how higher-level accuracy is to be improved.

Conclusion

Husky addresses some of the key challenges in the field and offers a versatile tool for complex, multi-step reasoning tasks. While there are still areas for improvement, Husky demonstrates the potential of AI and machine learning in solving complex problems and advancing our understanding of the world.

Source

research paper: https://arxiv.org/abs/2406.06469

research document: https://arxiv.org/pdf/2406.06469

Project details: https://agent-husky.github.io/

GitHub Repo: https://github.com/agent-husky/Husky-v1

Models: https://huggingface.co/agent-husky

No comments:

Post a Comment