Introduction

The rise of AI-driven code models signifies a transformative shift in software development. These models, powered by machine learning, are instrumental in automating coding tasks, enhancing developer productivity, and tackling intricate programming challenges. However, perfecting these models presents hurdles, including ensuring accuracy, optimizing computational resources, and maintaining a balance between automation and human creativity.

In this evolving landscape, Codestral shines as a beacon of innovation. Developed by Mistral AI, a French startup with a rich heritage in the esteemed École polytechnique and the innovative ecosystems of Meta Platforms and Google DeepMind, Codestral is the first-ever open-weight code model. It is engineered to address the fundamental challenges in code model evolution, including understanding and generating code across a multitude of languages, executional performance, and user-friendliness. Codestral is more than just a product; it symbolizes the broader progression of AI, with an aim to democratize coding and make it more efficient.

What is Codestral?

Codestral is an open-weight generative model, meticulously crafted for the purpose of code generation. Codestral empowers developers by simplifying the process of writing and interacting with code, thanks to its shared instruction and completion API endpoint. This distinctive feature propels the efficiency of coding tasks by integrating the strengths of AI.

Key Features of Codestral

Codestral boasts a suite of remarkable features that distinguish it from its contemporaries:

- Multilingual Proficiency: With training on an extensive dataset encompassing more than 80 programming languages, Codestral’s proficiency spans from widely-used languages like Python, Java, C++, and JavaScript to niche ones such as Swift and Fortran. This ensures Codestral’s adaptability to a variety of coding projects and environments.

- Code Completion: A highlight of Codestral is its code completion capability, which significantly reduces the time and effort developers invest in routine tasks, thereby allowing them to concentrate on more intricate project elements.

- Test Writing: Beyond code generation, Codestral extends its utility to test writing, thereby augmenting the software development lifecycle.

- Partial Code Completion: The model’s fill-in-the-middle mechanism for completing partial code is especially beneficial in extensive projects, enabling developers to efficiently manage concurrent coding tasks.

Capabilities/Use Case of Codestral

Codestral, with its suite of remarkable features, opens up a plethora of use cases that can significantly enhance developer productivity:

- Efficient Code Generation Across Languages: Codestral’s multilingual proficiency allows developers to efficiently generate code across more than 80 programming languages. This can be particularly useful when working on projects that involve multiple languages or transitioning between projects that require different languages.

- Streamlined Coding Process: The code completion feature of Codestral can streamline the coding process by automatically completing routine tasks. This allows developers to focus on more complex aspects of their projects, thereby enhancing productivity.

- Automated Test Writing: Codestral’s ability to write tests can automate a crucial part of the software development lifecycle. This can help in early detection of bugs and ensure the delivery of high-quality code.

- Effective Management of Large Projects: The partial code completion feature of Codestral can be a game-changer for large projects. By efficiently managing concurrent coding tasks, it can significantly reduce the complexity of managing large codebases.

Codestral, a 22B model, has set new benchmarks in performance and latency, surpassing its predecessors in similar coding tasks. Its expansive context window is a standout feature, propelling it to the forefront in RepoBench evaluations, shown in below table, which measure long-range code generation capabilities. Codestral’s adeptness in Python is evident through its stellar performance across four distinct benchmarks, highlighting its exceptional ability for repository-level code completion. Its prowess extends to SQL, as evidenced by the Spider benchmark, which attests to its solid SQL code generation skills.

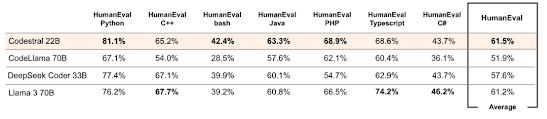

The model’s versatility is further demonstrated through its performance in six additional languages, shown in below table: C++, Bash, Java, PHP, Typescript, and C#, evaluated via multiple HumanEval pass@1 benchmarks. This multi-lingual proficiency emphasizes Codestral’s adaptability and wide-ranging applicability.

A notable aspect of Codestral is its Fill-in-the-Middle (FIM) performance, which has been rigorously tested in Python, JavaScript, and Java against the DeepSeek Coder 33B model. The outcomes reveal that Codestral’s FIM capability is not only immediately operational but also significantly augments its utility for diverse coding tasks. This feature, among others, cements Codestral’s position as a leading tool in the domain of AI-powered code generation.

Multilingual Code Mastery: Codestral’s Competitive Edge

In the competitive landscape of AI-driven code models, Codestral, alongside CodeLlama 70B, DeepSeek Coder 33B, and Llama 3 70B, represents the pinnacle of innovation. Each model has its own set of strengths, but Codestral distinguishes itself in several critical aspects.

Codestral boasts an impressive array of 22 billion parameters coupled with a 32k token context length, providing it with a significant advantage in managing intricate coding tasks. Its proficiency in over 80 programming languages ensures that Codestral is well-equipped to handle a wide array of coding projects and environments.

In contrast, CodeLlama 70B shines in the realm of code synthesis and comprehension. However, its specialization in Python constrains its versatility. DeepSeek Coder 33B is notable for its extensive training data and advanced code completion capabilities, optimized for deciphering complex coding instructions.

Llama 3 70B is recognized for its ability to interpret complex coding instructions and is particularly effective when quantized. Yet, it operates within an 8K token context window, which is less than Codestral’s 32k token capacity, giving Codestral an advantage in processing larger contexts. Moreover, Llama 3 70B’s requirement for over 140 GB of VRAM exceeds what most standard computers can handle, potentially limiting its accessibility and practicality in comparison to Codestral.

Therefore, Codestral’s synthesis of remarkable parameters, an extensive context window, multilingual capabilities, and superior performance establishes it as a frontrunner in the code model arena. Its proficiency in understanding complex instructions and generating precise, efficient code positions it as an invaluable resource for developers and researchers. Codestral’s attributes not only elevate it above its competitors but also underscore its role as a transformative tool in the development landscape.

How to Access and Use This Model?

Codestral is readily accessible for research and testing purposes. It can be downloaded under the Mistral AI Non-Production License, ensuring that developers and researchers can explore its capabilities.

The model is available on HuggingFace, a popular platform for AI models, making it easily accessible for a wide range of users. In addition to this, Codestral features a dedicated endpoint, codestral.mistral.ai, which is optimized for IDE integrations. This endpoint is accessible through a personal API key, providing users with a personalized experience.

During an 8-week beta period, access to this endpoint is free, managed via a waitlist to ensure quality service. This allows users to test the model and provide valuable feedback for further improvements.

For developers seeking to utilize Codestral in their applications, the model can be accessed through Mistral’s main API endpoint at api.mistral.ai. This endpoint is suitable for research, batch queries, and third-party applications, offering a versatile solution for various use cases.

Furthermore, Codestral is included in Mistral’s self-deployment offering, catering to those interested in self-deployment. This ensures that users have the flexibility to deploy the model in a manner that best suits their specific requirements.

Limitations And Future directions

While Codestral is a powerful tool with numerous capabilities, it does come with certain limitations. One of the primary constraints is that Codestral-22B-v0.1 currently lacks moderation mechanisms. This means that it may not always respect guardrails, which could pose challenges for deployment in environments that require moderated outputs.

Another significant limitation pertains to its licensing. Codestral is licensed for non-commercial use, which could restrict its application in certain commercial projects. This licensing structure imposes significant restrictions on its usage, potentially limiting the scope of its deployment.

Despite these limitations, Mistral AI is actively engaging with the community to refine and enhance Codestral. They are keen on finding ways to make the model finely respect guardrails and are open to feedback and suggestions. This commitment to continuous improvement underscores their dedication to making Codestral a valuable tool for the AI community.

Conclusion

Codestral represents a significant step forward in the field of code models. Despite its limitations, it offers a range of capabilities that can greatly assist developers in their coding tasks. As AI continues to advance, models like Codestral will undoubtedly play a pivotal role in shaping the future of coding.

Source

BlogPost : https://mistral.ai/news/codestral/

Documentation: https://docs.mistral.ai/capabilities/code_generation/

HF Model: https://huggingface.co/mistralai/Codestral-22B-v0.1

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.

No comments:

Post a Comment