Introduction

In the dynamic landscape of AI, Multimodal Language Models (MLMs) stand as a crucial research focus. They serve as a conduit linking diverse data forms such as text, images, videos, and audio. As MLMs evolve, they grapple with intricate challenges that demand understanding and reasoning across a variety of modalities. Amid this progression, Reka has emerged as an advanced model that seamlessly amalgamates text, images, videos, and audio.

Reka is the brainchild of Reka AI, a San Francisco-based AI startup founded by a team of seasoned researchers. The development of Reka was driven by the ambition to craft a frontier-class MLM that could rival the leading models in the industry today. As a potent series of MLMs, Reka is engineered to tackle the key challenges in the field and propel the boundaries of AI advancements.

Reka Variants & Their Differences

The differences between Reka Core, Flash, and Edge primarily lie in their size, training data, and performance capabilities:

Reka Core:

- It is the most capable and largest model.

- It approaches the performance of the best frontier models.

- It excels in both automated evaluations and blind human evaluations.

Reka Flash:

- It is a dense model with 21B parameters.

- It outperforms many larger models, delivering outsized value for its compute class.

- It performs competitively on multimodal chat.

Reka Edge:

- It is a dense model with 7B parameters.

- It surpasses the current state-of-the-art models of its compute class.

Each model is designed to process and reason with text, images, video, and audio inputs, with varying degrees of effectiveness based on their respective sizes and training data.

What is Reka Core?

Reka Core is the most potent model in the Reka series, embodying a frontier-class multimodal language model that has been meticulously trained from scratch. It transcends the capabilities of traditional large language models by demonstrating a comprehensive understanding of not just text, but also images, videos, and audio.

Key Features of Reka Core

- Multimodal Comprehension: Reka Core exhibits a profound understanding of various modalities, including text, images, videos, and audio.

- Extended Context Window: With a context window of 8K for regular models and 128K for long context models, Reka Core is adept at ingesting and accurately recalling a vast amount of information.

- Advanced Reasoning: Reka Core shines in its reasoning abilities, encompassing language and math.

- Coding and Workflow Empowerment: As a top-tier code generator, Reka Core is instrumental in enhancing workflows.

- Multilingual Proficiency: Approximately 15% of the pretraining data is explicitly multilingual, comprising 32 diverse languages.

- Flexible Deployment: Reka Core offers deployment flexibility, being available via API.

Applications of Reka Core:

Reka Core enables a wide range of use cases across industries such as e-commerce, social media, digital content, healthcare, and robotics. Its deployment flexibility ensures accessibility for various applications.

- Product Recommendations: Reka Core analyzes user preferences from text and images, providing personalized product recommendations. Whether it’s fashion, electronics, or home decor, Reka Core ensures a delightful shopping experience.

- Visual Search: Users can snap a photo of an item they like, and Reka Core identifies similar products in online catalogs. Say goodbye to endless scrolling!

- Content Moderation: Reka Core detects harmful or inappropriate content in images and text, helping platforms maintain a safe environment for users.

- Sentiment Analysis: It gauges emotions from posts, comments, and images, allowing brands to tailor their responses and improve customer satisfaction.

- Automated Captioning: Reka Core generates captivating captions for images, making social media posts more engaging.

- Storytelling: Authors and content creators can leverage Reka Core to craft compelling narratives by combining text and visuals seamlessly.

- Medical Imaging: Reka Core assists radiologists by analyzing medical images, detecting anomalies, and providing preliminary diagnoses.

- Patient Records: It extracts relevant information from handwritten notes, scanned documents, and images, streamlining administrative tasks.

- Human-Robot Communication: Reka Core enables robots to understand natural language commands and interpret visual cues. Imagine a robot assistant that responds to both text and gestures!

- Visual Navigation: Robots equipped with Reka Core can navigate complex environments using visual input, improving safety and efficiency.

- Poetry and Art: Reka Core collaborates with poets and artists, generating beautiful poems inspired by images or creating digital art pieces.

- Meme Creation: It combines witty text with relevant images, ensuring your memes hit the mark.

- Interactive Learning: Reka Core enhances educational apps by providing explanations through text, images, and audio. Students can explore topics holistically.

- Language Learning: It assists language learners by associating vocabulary with visual context, reinforcing memory retention.

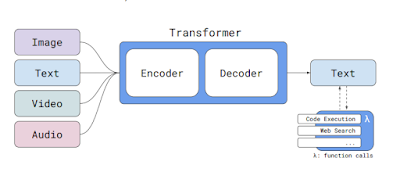

The Inner Workings and Architecture

Reka Core operates on a modular encoder-decoder-transformer architecture, a technical design that allows it to process inputs in the form of text, images, video, and audio, and provide outputs in text format.

The backbone of Reka Core’s architecture is the ‘Noam’ architecture, which incorporates elements such as SwiGLU, Grouped Query Attention, Rotary positional embeddings, and RMSNorm. This design is akin to the PaLM architecture but without parallel layers, making it unique in its structure.

Reka Core, along with other models in the series like Reka Flash and Edge, uses a sentencepiece vocabulary of 100K based on tiktoken, similar to the GPT-4 tokenizer. Sentinel tokens are added for masking spans and other special use cases, enhancing the model’s ability to handle complex tasks.

The pretraining of Reka Core involves a curriculum that progresses through multiple stages with varying mixture distributions, context lengths, and objectives. This rigorous training process results in a dense model that is trained with bfloat16.

In terms of context length, standard models have a context length of 8K, while Reka Flash and Reka Core boast a context length of 128K, making them ideal for retrieval and long document tasks. These models pass the needle-in-the-haystack test for the context they support, and the 128K models seem to extrapolate to a 256K context length.

For long context training, supervised fine-tuning data is synthetically created by conditioning on long documents found in the pretraining corpus, a technique referred to as reverse instruction tuning from long documents. This approach ensures that Reka Core is well-equipped to handle a wide range of tasks and challenges in the realm of AI.

Performance Evaluation

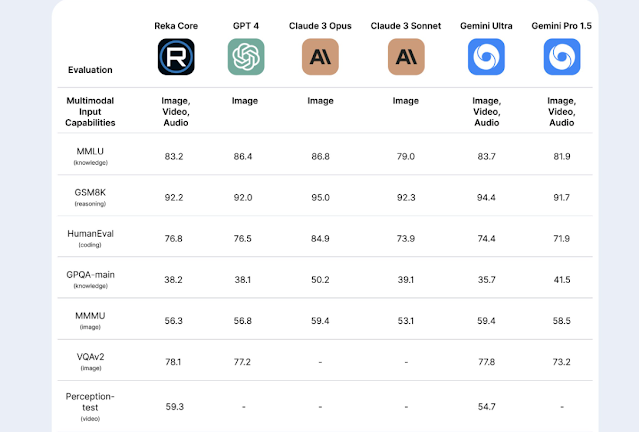

The Reka models, specifically Reka Core and Reka Flash, have demonstrated competitive performance across a wide range of evaluations. These evaluations span language-only and multimodal tasks, chat model evaluations, cross-lingual evaluations, long context question answering, and medical reasoning tasks.

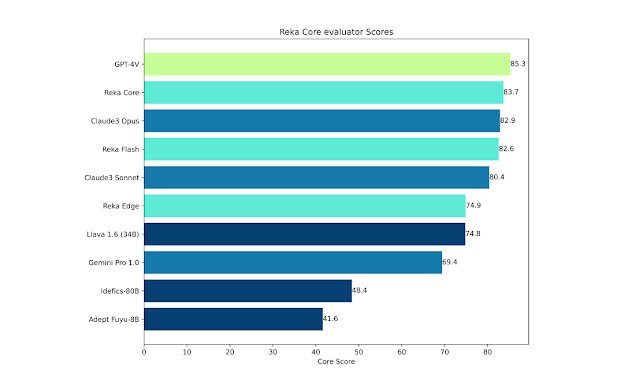

In the realm of multimodal evaluations, Reka Core outperforms Claude-3 Opus and surpasses Gemini Ultra on video tasks. It is also comparable to GPT-4V on MMMU. On language tasks, Reka Core holds its own against other frontier models on well-established benchmarks.

The use of Reka Core for model development and automatic evaluation has proven effective, with its rankings correlating well with human judgement. In this evaluation, the alignment of Reka Core evaluator scores with the final ELO scores obtained from human raters.

So, the Reka models have shown substantial improvements and are competitive with other leading models in the industry. The ongoing development of these models promises even better results in the future. Technical document released by Reka AI, provides a detailed analysis of the performance of Reka models across various benchmarks and scenarios.

How to Access and Use this model?

Reka is available today via API, on-premise, or on-device deployment options. You can access all Reka series models on the Reka Playground. All you need to do is log in or set up a Reka Account.

If you are interested in learning more about Reka Models, please refer to the resource table above. All relevant links are provided under the ‘Source’ section at the end of this article.Limitations

- While Reka Core is a powerful and advanced multimodal language model, it is not without its limitations.

- Despite its multimodal input processing capabilities, Reka Core currently only supports text outputs.

- The exact number of parameters for Reka Core remains undisclosed, potentially limiting full understanding of the model’s complexity and computational requirements.

- While Reka Core has been trained on diverse sources, the representativeness of the training data could still be a potential limitation.

Future Work

- Reka AI plans to continue improving Reka Core and other models in the Reka series.

- The team is working on further training to enhance the performance of Reka Core.

- Development of the next version of the model is underway.

These future efforts aim to overcome current limitations and push the boundaries of what’s possible with multimodal language models.

Conclusion

Reka Models represent a significant advancement in multimodal language models. Its capabilities and deployment flexibility make it a valuable tool for various applications. As it continues to evolve, we anticipate even greater performance from Reka.

Source

press release: https://publications.reka.ai/reka-core-press-release.pdf

Blog: https://www.reka.ai/news/reka-core-our-frontier-class-multimodal-language-model

Technical report: https://publications.reka.ai/reka-core-tech-report.pdf

trial for experience : https://chat.reka.ai/chat

Demo: https://showcase.reka.ai/#doc

No comments:

Post a Comment