Introduction

Code models have become a cornerstone for developers and researchers in the dynamic world of artificial intelligence. These models have evolved from rudimentary rule-based systems to advanced language models, reflecting the rapid progress in natural language processing and deep learning techniques.

As the complexity of programming languages and the demand for efficient code completion and generation escalate, researchers are constantly innovating to overcome these challenges. A significant breakthrough in this journey is the advent of ‘CodeGemma’, an open-source project by Google. The development of CodeGemma involved collaboration between Google DeepMind and other teams across Google. Their collective expertise in machine learning, NLP, and code understanding culminated in the creation of this powerful tool.

CodeGemma, a specialized subset of lightweight language models, is fine-tuned for code handling. It’s builds on the foundation laid by the original Gemma models. The primary aim of CodeGemma is to democratize AI-driven code assistance, thereby empowering developers, enhancing code quality and accelerating software development.

What is CodeGemma?

CodeGemma is a specialized family of Lightweight Language Models (LLMs) fine-tuned for coding tasks. It’s an evolution of the pre-trained 2B and 7B Gemma checkpoints.

Model Variants

The CodeGemma family comprises three key variants:

- CodeGemma 2B Base Model: This model excels in infilling and open-ended code generation. It provides fast and efficient code completion, making it ideal for scenarios where latency and privacy are paramount.

- CodeGemma 7B Base Model: Trained on a mix of code infilling data and natural language, this variant shines in code completion, understanding, and generation. It supports popular programming languages like Python, Java, and JavaScript.

- CodeGemma 7B Instruct Model: Fine-tuned for instruction following, this model enables conversational interactions around code, programming, and mathematical reasoning topics.

Each variant of CodeGemma is designed with a specific focus, offering a comprehensive solution for various coding tasks and challenges. This makes CodeGemma a versatile tool in the realm of AI-driven code assistance.

Key Features of CodeGemma

- Intelligent Code Completion and Generation: CodeGemma is renowned for its ability to generate code that is both syntactically correct and semantically meaningful. It assists developers in completing lines, functions, and entire code blocks, whether they’re working locally or in the cloud.

- Multi-Language Proficiency: CodeGemma is a versatile coding assistant, supporting popular languages like Python, JavaScript, and Java.

- Streamlined Workflows: Integrating CodeGemma into your development environment can help reduce boilerplate code, allowing you to focus on writing meaningful and differentiated code.

Capabilities/Use Case of CodeGemma

- Code Completion: CodeGemma enhances code writing by providing intelligent completion suggestions.

- Code Generation: It can generate code snippets or entire blocks based on context and requirements.

- Natural Language Understanding: CodeGemma has the ability to understand and process natural language queries related to code.

- Logical Reasoning: CodeGemma excels in logical and mathematical reasoning, aiding developers in complex problem-solving.

How does CodeGemma work?/ Architecture/Design

The CodeGemma models are built upon the Gemma pretrained models and are further trained on more than 500 billion tokens of primarily code. They use the same architectures as the Gemma model family. This additional training enables the CodeGemma models to achieve state-of-the-art code performance.

The CodeGemma models are highly capable language models designed for effective real-world deployment. They are optimized to run in latency-constrained settings while delivering high-quality code completion on a variety of tasks and languages. The technologies that built Gemini and Gemma are transferable to downstream applications, making these models a valuable resource for the broader community.

The most straightforward method of deployment is to truncate upon generating a Fill-In-the-Middle (FIM) sentence. This approach ensures efficient and accurate code completion and generation. The models are designed to be versatile and adaptable, catering to a wide range of coding tasks and languages.

Performance Evaluation with Other Models

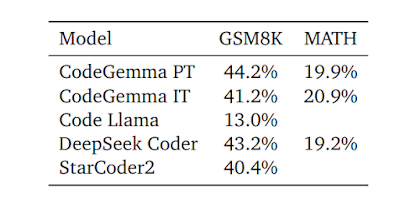

CodeGemma models have set new benchmarks in code generation and completion, demonstrating exceptional performance across various programming languages such as Python, Java, JavaScript, and C++. A comparative analysis of CodeGemma and the instruction-tuned version of Gemma, as depicted in figure below, reveals the distinct strengths of CodeGemma in terms of natural language understanding and reasoning skills.

Furthermore, as shown in table below, CodeGemma’s single-line and multi-line code completion capabilities have been evaluated against other Fill-In-the-Middle (FIM) aware code models. This comparison underscores CodeGemma’s efficiency, speed, and quality in code completion tasks, offering a holistic view of its capabilities in fulfilling code in diverse contexts and use cases.

In terms of multi-lingual coding capability, CodeGemma has been tested on datasets translated by BabelCode. The results indicate that CodeGemma excels in mathematical reasoning compared to similarly sized models, further emphasizing its versatility.

When compared with other code models in the same 7B size class, CodeGemma showcases exceptional performance in mathematical reasoning tasks. This further highlights its strong performance across various benchmarks, underscoring its position as a leading model in the realm of AI-driven code assistance. This comprehensive performance evaluation underscores CodeGemma’s position as a leading model in the realm of AI-driven code assistance.

Choosing the Right Model: Considerations and Trade-offs

In the realm of code generation and completion, CodeGemma, DeepSeekCoder, and StarCoder2 are all noteworthy models. Each has its unique strengths, but CodeGemma distinguishes itself in several ways.

CodeGemma is a family of code-specialist Large Language Models (LLMs) based on the pre-trained 2B and 7B Gemma checkpoints. It has been further trained on an additional 500 billion tokens of primarily English language data, mathematics, and code. This extensive training enhances CodeGemma’s capabilities in logical and mathematical reasoning, making it particularly adept at code completion and generation tasks.

DeepSeekCoder is known for its massive training data and highly flexible & scalable model sizes. In contrast, CodeGemma offers three different flavors designed for specific tasks. These include a 2B base model for infilling and open-ended generation, a 7B base model trained with both code infilling and natural language, and a 7B instruct model that users can chat with about code. This versatility allows CodeGemma to adapt to various tasks, providing a more tailored solution to coding challenges.

Starcoder2, on the other hand, stands out with its support for a vast number of programming languages and its ability to match the performance of larger models. It has been trained on a larger dataset compared to CodeGemma. However, CodeGemma’s excellence in logical and mathematical reasoning and its suitability for code completion and generation tasks give it an edge.

So, while all three models - CodeGemma, DeepSeekCoder, and Starcoder2 - have their strengths, CodeGemma’s performance in logical and mathematical reasoning along with its adaptability to various tasks, underscores its potential to revolutionize the way we approach coding. However, the choice between these models would ultimately depend on the specific requirements and constraints of the task at hand. Each model has its unique strengths and the choice would depend on the specific use case and requirements.

How to Access and Use CodeGemma?

Accessing and using CodeGemma involves a few steps. First, you need to review and agree to Google’s usage license on the Hugging Face Hub. Once you’ve done that, you’ll find the three models of CodeGemma ready for use.

CodeGemma models are open-source and can be used both locally and online. They are available on several platforms, including Kaggle, Hugging Face, and Google AI for Developers. Each platform provides detailed instructions for using the models.

If you’re planning to use the model in a notebook environment like Google Colab, you’ll need to install the required libraries such as Transformers and Accelerate. Additionally, you’ll need to have access tokens for the model.

For more accurate and up-to-date information, go through the all links provided under the 'source' section at the end of this article.

Limitations

Like any Large Language Models (LLMs), CodeGemma has certain limitations:

- Training Data Dependency: The model’s performance heavily relies on the quality and diversity of its training data. Scenarios not well-represented in the training data may pose challenges.

- World Knowledge Gap: Tasks requiring world knowledge beyond what the model has seen during training might be problematic.

- Plausible but Incorrect Outputs: CodeGemma could generate outputs that sound plausible but are factually incorrect or nonsensical, especially for complex tasks.

Future Work

Ongoing efforts aim to address these limitations:

- Refining Training Process: Continuously improving the training process to enhance model understanding and adaptability.

- Diverse Training Data: Expanding the diversity of training data to cover a broader range of coding scenarios.

- Output Control Techniques: Developing methods to better control the model’s generated output.

Conclusion

CodeGemma is a significant advancement in the field of Code Models. Its unique features and capabilities make it a powerful tool for developers. As developers embrace this powerful tool, it promises to revolutionize code completion, generation, and understanding. As AI continues to evolve, models like CodeGemma will play a crucial role in shaping the future of coding.

Source

Techinal Report: https://storage.googleapis.com/deepmind-media/gemma/codegemma_report.pdf

Model OverView: https://ai.google.dev/gemma/docs/codegemma

Model Card: https://ai.google.dev/gemma/docs/codegemma/model_card

Kaggle: https://www.kaggle.com/models/google/codegemma

Weights: https://huggingface.co/collections/google/codegemma-release-66152ac7b683e2667abdee11

No comments:

Post a Comment