Introduction

Lumiere is a novel video generation model that can create realistic, diverse, and coherent videos from text descriptions. It was developed by a team of researchers from Google Research, Weizmann Institute, Tel-Aviv University, and Technion. The motto behind the development of this model was to overcome the limitations of existing video synthesis methods, which often produce low-quality, inconsistent, or unrealistic videos. Lumiere aims to provide a powerful and flexible tool for video content creation and editing, with applications in entertainment, education, art, and more. The primary goal behind the development of Lumiere was to synthesize videos that portray realistic, diverse, and coherent motion. Lumiere stands out for its unique Space-Time U-Net architecture, which allows it to generate the entire temporal duration of a video at once, offering a significant improvement over existing methods.

What is Lumiere?

Lumiere is a video diffusion model that enables users to generate realistic and stylized videos. Users can provide text inputs in natural language, and Lumiere generates a video portraying the described content. Lumiere uses a novel Space-Time U-Net architecture, which allows it to generate the entire temporal duration of the video at once, through a single pass in the model. This is in contrast to existing video models, which synthesize distant keyframes followed by temporal super-resolution, an approach that inherently makes global temporal consistency difficult to achieve.

Key Features of Lumiere

Some of the key features of Lumiere are:

- Video Generation: Lumiere can generate videos of up to 32 frames at a resolution of 256x256, showcasing realistic, diverse, and coherent motion.

- Text Input Flexibility: Lumiere can handle a wide range of text inputs, from simple to complex, and from abstract to concrete.

- Leveraging Pre-trained Models: Lumiere can leverage a pre-trained text-to-image diffusion model, such as CLIP-GLaSS, to generate high-quality videos from text.

- Video Editing Capabilities: Lumiere can perform various video editing tasks, such as image-to-video, video inpainting, and stylized generation, using text inputs and optional masks or reference images.

- Style Diversity: Lumiere can generate videos in different styles, such as sticker, flat cartoon, 3D rendering, line drawing, glowing, and watercolor painting, by using fine-tuned text-to-image model weights.

- Space-Time Processing: By deploying both spatial and temporal down- and up-sampling and leveraging a pre-trained text-to-image diffusion model, Lumiere learns to directly generate a full-frame-rate, low-resolution video by processing it in multiple space-time scales.

Capabilities/Use Case of Lumiere

Lumiere has many potential capabilities and use cases, such as:

- Engaging Content Creation: Lumiere can create engaging and educational videos from text descriptions, such as stories, poems, facts, or instructions.

- Video Enhancement: Lumiere can enhance and transform existing videos with text inputs, such as adding or changing objects, characters, backgrounds, or styles.

- Cinematograph Generation: Lumiere can generate cinematographs, which are videos with subtle and repeated motion in a specific region, from images and masks.

- Scenario Visualization: Lumiere can explore and visualize different scenarios and outcomes from text inputs, such as what-if questions, alternative histories, or future predictions.

- Artistic Video Creation: Lumiere can create artistic and expressive videos from text inputs, such as moods, emotions, or themes.

- State-of-the-art Results: Lumiere demonstrates state-of-the-art text-to-video generation results.

- Content Creation and Video Editing: Lumiere facilitates a wide range of content creation tasks and video editing applications, including image-to-video, video inpainting, and stylized generation.

How does Lumiere work?/ Architecture/Design

Lumiere operates on a unique architecture known as the Space-Time U-Net, which generates the entire temporal duration of a video at once, through a single pass in the model. This architecture deploys both spatial and temporal down- and up-sampling and leverages a pre-trained text-to-image diffusion model.

The generative approach of Lumiere utilizes Diffusion Probabilistic Models. These models are trained to approximate a data distribution through a series of denoising steps. Starting from a Gaussian i.i.d. noise sample, the diffusion model gradually denoises it until reaching a clean sample drawn from the approximated target distribution. Diffusion models can learn a conditional distribution by incorporating additional guiding signals, such as text embedding, or spatial conditioning.

The Lumiere framework consists of a base model and a spatial super-resolution (SSR) model. The base model generates full clips at a coarse spatial resolution. The output of the base model is spatially upsampled using a temporally-aware SSR model, resulting in a high-resolution video.

The Space-Time U-Net downsamples the input signal both spatially and temporally, and performs the majority of its computation on this compact space-time representation. The architecture includes temporal blocks in the T2I architecture and inserts temporal down- and up-sampling modules following each pre-trained spatial resizing module. The temporal blocks include temporal convolutions and temporal attention. Factorized space-time convolutions are inserted in all levels except for the coarsest, which allow increasing the non-linearities in the network compared to full-3D convolutions while reducing the computational costs, and increasing the expressiveness compared to 1D convolutions. Temporal attention is incorporated only at the coarsest resolution, which contains a space-time compressed representation of the video.

Due to memory constraints, the inflated SSR network can operate only on short segments of the video. To avoid temporal boundary artifacts, Lumiere achieves smooth transitions between the temporal segments by employing Multidiffusion along the temporal axis. At each generation step, the noisy input video is split.

Performance Evaluation

The Lumiere model, a Text-to-Video (T2V) model, is trained on a dataset of 30 million videos with corresponding text captions. Each video is 5 seconds long with 80 frames at 16 fps. The base model is trained at a resolution of 128×128, while the SSR generates frames at 1024 × 1024. The model is evaluated on 113 diverse text prompts, including 18 assembled by us and 95 used by previous works. A zero-shot evaluation protocol is employed on the UCF101 dataset.

The Lumiere model is compared to several T2V diffusion models and commercial T2V models with available APIs. These include ImagenVideo, AnimateDiff, StableVideoDiffusion (SVD), ZeroScope, Pika, and Gen2. The comparison also extends to additional T2V models that are closed-source.

In the qualitative evaluation (as shown in figure above), Lumiere outperforms the baselines by generating 5-second videos with higher motion magnitude while maintaining temporal consistency and overall quality. In contrast, Gen-2 and Pika produce near-static videos despite their high per-frame visual quality. ImagenVideo, AnimateDiff, and ZeroScope generate videos with noticeable motion but of shorter durations and lower visual quality.

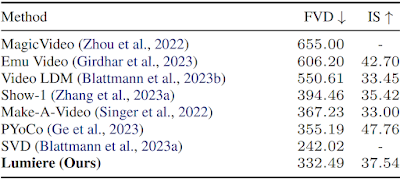

The quantitative evaluation involves a zero-shot evaluation on UCF101, following the protocols of Blattmann et al. (2023a) and Ge et al. (2023). The Frechet Video Distance (FVD) and Inception Score (IS) of Lumiere and previous work are reported in table above. Lumiere achieves competitive FVD and IS scores, although these metrics may not faithfully reflect human perception.

A user study is conducted using the Two-alternative Forced Choice (2AFC) protocol. Participants are presented with a pair of videos, one generated by Lumiere and the other by a baseline method, and asked to choose the better video in terms of visual quality and motion. They are also asked to select the video that more accurately matches the target text prompt. As illustrated in Figure above, Lumiere is preferred over all baselines by the users and demonstrates better alignment with the text prompts.

Finally, a user study comparing Lumiere’s image-to-video model against Pika, SVD, and Gen2 is conducted. As SVD’s image-to-video model is not conditioned on text, the survey focuses on video quality.

How to access and use this model?

Lumiere is not yet publicly available, but the researchers have shared a website where they showcase some of the results and applications of Lumiere. The website also provides a link to the paper, where they describe the technical details and the evaluation of Lumiere. The researchers plan to release the code and the model in the future, according to their website.

If you are interested to learn more about this model, all relevant links are provided under the 'source' section at the end of this article.

Conclusion

Lumiere is a groundbreaking video generation model and significant advancement in the field of video synthesis that can create realistic, diverse, and coherent videos from text inputs. Its unique Space-Time U-Net architecture and ability to generate realistic and stylized videos make it a powerful tool for various content creation tasks and video editing applications.

Source

Research paper - https://arxiv.org/abs/2401.12945

GitHub repo - https://github.com/lumiere-video/lumiere-video.github.io

Project details - https://lumiere-video.github.io/

No comments:

Post a Comment