In the realm of natural language processing (NLP) tasks, language models (LMs) have emerged as powerful tools. The likes of BERT, GPT-3, and T5, which are large-scale pre-trained models, have further amplified their capabilities. However, these models still encounter difficulties when it comes to handling long-term and structured knowledge, encompassing facts, entities, and relationships, which are vital for maintaining natural and coherent conversations.

To bridge this gap, a collaborative team of researchers from Tsinghua University, Beijing Academy of Artificial Intelligence, and Zhejiang University has introduced new framework. This innovative framework combines symbolic memory with large language models (LLMs), aiming to empower LLMs with the ability to access and manipulate structured knowledge effortlessly and naturally. The ultimate goal of ChatDB is to enhance the performance of LLMs in conversational tasks, including dialogue generation and question answering. This new framework is known as 'ChatDB'.

What is ChatDB?

ChatDB is a framework that augments LLMs (large language models) with databases as their symbolic memory. ChatDB enables LLMs to interact with structured knowledge in a natural and efficient way, and to improve their performance on conversational tasks.

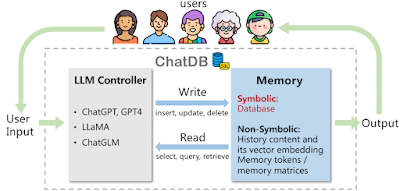

The overall workflow of ChatDB is shown below:

source - https://chatdatabase.github.io/

The LLM controller oversees and manages the read and write activities performed on the memory. This memory serves as a repository for storing past data and retrieving pertinent historical information to aid in addressing user queries. At ChatDB, primary emphasis lies in enhancing LLMs by incorporating databases as their symbolic memory.

Key Features of ChatDB

ChatDB offers a range of distinctive and efficient features:

It facilitates explicit and implicit memory operations. Explicit memory operations are those that users explicitly specify in their queries, such as "add John's birthday to the memory." Implicit memory operations, on the other hand, are inferred by the model based on context and the task, for instance, "retrieve John's birthday from the memory."

It supports both single-turn and multi-turn conversations. Single-turn conversations involve a single query and response, like "What is John's birthday?" On the other hand, multi-turn conversations encompass multiple queries and responses that are interconnected. For example, "Who is John? What is his birthday? What is his favorite color?"

It caters to both open-domain and closed-domain knowledge. Open-domain knowledge refers to general information sourced from external references such as Wikipedia or web searches. Closed-domain knowledge, on the other hand, pertains to specific knowledge associated with a particular domain or scenario, such as personal details or movie reviews.

It accommodates both textual and tabular data. Textual data comprises unstructured information presented in natural language sentences or paragraphs. Tabular data, on the other hand, comprises structured information organized into rows and columns of values.

Capabilities/Use Case of ChatDB

ChatDB has various capabilities and use cases for conversational tasks, such as:

- Dialogue generation: ChatDB can generate natural and coherent dialogues with users based on their queries and the memory state. For example, ChatDB can chat with users about movies, books, sports, or any other topics that they are interested in.

- Question answering: ChatDB can answer factual questions from users based on the knowledge stored in the memory. For example, ChatDB can answer questions like “when was Harry Potter published?” or “who directed The Godfather?”.

- Knowledge base construction: ChatDB can construct a knowledge base from textual or tabular data by extracting key-value pairs from the data and storing them in the memory. For example, ChatDB can construct a knowledge base from a Wikipedia article or a CSV file.

- Knowledge base completion: ChatDB can complete missing or incomplete information in a knowledge base by inferring or generating new key-value pairs based on the existing knowledge. For example, ChatDB can complete missing attributes or values for entities or relations in a knowledge base.

How does ChatDB work?

ChatDB framework has three main stages: input processing, chain-of-memory, and response summary.

Input processing: ChatDB uses LLMs to generate a series of steps to interact with the symbolic memory, if the user input needs memory access. Otherwise, ChatDB uses LLMs to generate a response directly.

Chain-of-memory: ChatDB executes the steps to manipulate the external memory in order. ChatDB sends SQL statements to the external database, such as insert, update, select, delete, etc. The database performs the SQL statements, updates itself, and returns the results. ChatDB then decides whether to update the next step based on the results and continues until all the memory operations are done.

Response summary: ChatDB uses LLMs to generate a final response to the user based on the outcomes of the chain-of-memory steps.

The red arrow lines show the process flow of chain-of-memory, which connects multiple memory operations. The red arrow lines between database tables show the reference relationships between primary keys and foreign keys, which go from primary keys to foreign keys. Only the first four columns of each table are shown for simplicity. This example shows how ChatDB handles a user request to return goods purchased on 2023-01-02 by a customer with the phone number 823451.

How to access and use this model?

ChatDB is an open-source project that is available on GitHub. The repository contains the code, data, and instructions for running ChatDB. ChatDB is built on top of HuggingFace Transformers, a popular library for NLP models. ChatDB uses T5 as the LLM encoder and decoder, and SQLite as the database engine. ChatDB can be used locally or on cloud platforms, such as Google Colab or Azure. ChatDB is licensed under the MIT License, which means it is free and open for commercial and non-commercial use.

If you are interested in learning more about the ChatDB framework, you can find all the relevant links under the "source" section at the end of the article.

Limitations

ChatDB presents a groundbreaking and promising framework that effectively combines symbolic memory with LLMs. However, like any innovative system, it has a few limitations that require attention in future endeavors. These limitations include:

- Reliance on LLMs: ChatDB depends on LLMs for generating SQL instructions to manipulate databases. While this approach is advantageous in many respects, it can potentially introduce errors or inefficiencies during memory operations. Future work should address this issue to enhance the system's overall performance.

- Limited Support for Complex Queries: Presently, ChatDB lacks support for handling intricate queries involving multiple tables or joins in databases. Consequently, its applicability to certain domains or scenarios may be restricted. To broaden its scope, it is important to develop mechanisms that enable ChatDB to handle complex queries effectively.

- Lack of Database Update and Deletion Mechanism: ChatDB currently lacks a mechanism to update or delete databases based on user feedback or new information. This limitation can have implications for the accuracy and consistency of the system over time. Future enhancements should focus on incorporating features that allow ChatDB to adapt and evolve with user input and changing data.

Conclusion

ChatDB represents a remarkable advancement in the realm of conversational AI by bridging the divide between neural networks and symbolic systems. It demonstrates the value of incorporating a symbolic memory within Language Model (LLM), enabling the storage and retrieval of structured knowledge in a manner that aligns with natural human cognition. Furthermore, ChatDB showcases the advantages of employing an LLM capable of generating natural language responses based on the content stored in its symbolic memory. This breakthrough paves the way for the development of more intelligent and coherent conversational agents proficient in handling long-term and structured information.

source

project - https://chatdatabase.github.io/

GitHub Repo - https://github.com/huchenxucs/ChatDB

research paper - https://arxiv.org/abs/2306.03901

research document - https://arxiv.org/pdf/2306.03901.pdf

No comments:

Post a Comment