Introduction

Meta has recently unveiled a cutting-edge AI tool that can predict connections between data, much like how humans perceive or imagine an environment. This innovative model has the potential to revolutionize the field of multimodal AI and enable computer scientists to explore novel applications for this technology. Using this new model, the company had desired to develope multimodal AI systems that learn from all possible types of data around them and to develop new, holistic systems and can bind data from more than one modality at once, without the need for explicit supervision. This new Model is called as ImageBind.

What is ImageBind and What are its Key Features?

ImageBind is a revolutionary multimodal AI model that utilizes six different senses to create a comprehensive understanding of objects in a photo.

This innovative technology combines text, image/video, audio, depth, thermal, and spatial movement to provide a holistic experience.

Based on the principle of self-supervised learning, ImageBind requires significantly less supervision than traditional machine learning approaches. This allows it to process multiple types of data, including images, text, audio, infrared sensor data, depth maps, and motion data.

ImageBind is the first AI model capable of binding information from six modalities without explicit supervision. By combining these senses, it provides an immersive, multisensory experience that creates a comprehensive understanding of the environment.

This technology opens up new possibilities for developing holistic systems that combine multiple modalities, such as 3D and IMU sensors, to design immersive virtual worlds. Meta, the company behind ImageBind, believes that this revolutionary technology could unlock new applications for AI and outperform traditional models that focus on processing only one type of data.

The ultimate goal of ImageBind is to mimic human perception and predict connections between data in a way that is similar to how humans perceive or imagine an environment. This could eventually take much of the legwork out of the design process and enable content creators to make immersive videos with realistic soundscapes and movement based on only text, image, or audio input.

ImageBind's Capabilities

ImageBind is capable of ingesting data collected by IMUs, a type of sensor that can track an object's location as well as related information such as its velocity.

ImageBind can also provide a way to explore new doors in the accessibility space, generating real-time multimedia descriptions to help people with vision or hearing impairments.

It has ability to learn a shared representation across multiple modalities is significant. This can lead to improved performance on different tasks, including few-shot and zero-shot tasks. It can be used for a wide range of applications due to its innovative capabilities.

Its ability to learn from multiple sources simultaneously makes it an attractive option for different types of technologies.

ImageBind's Use Cases

ImageBind has a myriad of potential applications, ranging from the realm of research to the domain of accessibility.

One of the most significant use cases of ImageBind is in the field of research, where it can facilitate the creation of multimodal AI systems that can assimilate and learn from diverse types of data that surround them. The advent of ImageBind has opened up new vistas for developing holistic systems that can combine 3D and IMU sensors to design immersive virtual worlds, as the number of modalities increases.

By providing a comprehensive understanding of the environment, ImageBind can aid researchers in developing new and innovative systems that can transform the way we perceive and interact with the world.

Moreover, ImageBind can prove to be a boon in the accessibility space, by generating real-time multimedia descriptions to aid people with visual or auditory impairments.

With ImageBind's assistance, content creators can produce immersive videos that feature realistic soundscapes and movements, based on mere text, image, or audio input.

It can link diverse types of data, such as text, images/videos, audio, 3D measurements (depth), temperature data (thermal), and motion data (from inertial measurement units), without requiring the need to train on every possibility. This can substantially reduce the effort and time spent on the design process and eventually lead to the creation of more precise and responsive recommendations.

How to Access ImageBind?

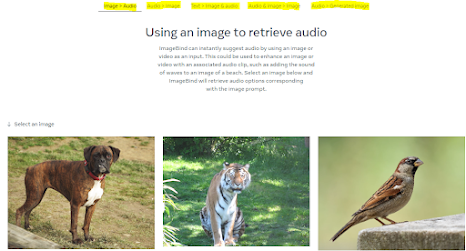

The avenue for accessing ImageBind is manifold. A plurality of channels are available for reaching the ImageBind model, including the ImageBind website. At this virtual destination, users are afforded the opportunity to engage with a demo version of the tool. The demo version functions by enabling users to select a modality and subsequently observe how ImageBind deftly processes the data.

The ImageBind model can also be sourced through the GitHub repository. By way of this platform, users are enabled to obtain the code and then utilize it for their research. The code, which is offered free of charge, is an exceedingly attractive option for those keenly interested in developing multimodal AI systems.

Moreover, the ImageBind paper is proffered on arXiv. This publication serves as an authoritative source for researchers who are eager to gain technical insight into the ImageBind model. The paper is instrumental in affording a holistic understanding of how ImageBind operates and how it can be leveraged to its full potential in research endeavors.

Lastly, the ImageBind model is also available for examination on the Facebook AI website. It is at this juncture where researchers can be made privy to the tool's potential applications, thereby amplifying the utility of the model in English.

The ImageBind demo is available on the ImageBind website. The demo allows users to play around with different examples and modalities.

Users can select an image and retrieve audio that is depicted from that image.

Users can select audio and retrieve an image that corresponds to the sound.

Users can combine two images or an image and audio file to generate another image.

All desired links are provided under 'source' at end of this article.

Conclusion

No comments:

Post a Comment