Stability AI and its multimodal AI research lab DeepFloyd have announced the research release of a new model. The research on this model is expected to lead to the development of new applications in various fields such as art, design, storytelling, virtual reality, and accessibility. This new fully open-source model contributes to these fields by generating photorealistic images based on text prompts and by intelligently integrating text into images. The new model is called “DeepFloyd IF”.

What is DeepFloyd IF?

DeepFloyd IF is a text-to-image model that can generate high-quality images based on text prompts.

Specification

The model was trained on a high-quality custom dataset called LAION-A,which contains 1 billion (image, text) pairs. The model uses the T5-XXL-1.1 language model as a text encoder to aid in understanding text prompts and employs cross-attention layers to better align the text prompt and the generated image. The

LAION-A dataset is an aesthetic subset of the English part of the LAION-5B dataset.

Features

The DeepFloyd IF model has several impressive features. Firstly, it is capable of generating high-quality images. Secondly, it offers zero-shot image-to-image translations, meaning it can produce images without prior training. Thirdly, the model has advanced integration capabilities that enable it to seamlessly integrate text into images. Finally, it can accurately apply text descriptions to generate images that depict various objects appearing in different spatial relations.

Performance

DeepFloyd IF's performance can be evaluated using the Fréchet Inception Distance (FID) score. It boasts an impressive zero-shot FID score of 6.66 on the COCO dataset. Fréchet Inception Distance (FID) score is a metric used to assess the quality of images created by a generative model, such as a generative adversarial network (GAN).

The score calculates the distance between feature vectors calculated for real and generated images. The score summarizes how similar the two groups are in terms of statistics on computer vision features of the raw images calculated using the inception v3 model used for image classification. The FID score compares the distribution of generated images with the distribution of real images, unlike the earlier inception score (IS), which evaluates only the distribution of generated images. The FID score is a better evaluation metric for the performance of GANs at image generation than the Inception Score.

The FID score is widely used in the evaluation of generative models of images, music, and video.

Structure and usage of DeepFloyd IF model

To use DeepFloyd IF model for text-to-image generation, one needs to follow the instructions provided in the documentation. The model works in three stages to generate high-quality images from text prompts.

The first stage involves encoding the text prompt using the T5-XXL-1.1 language model.

The second stage involves cascaded pixel diffusion, where the model generates a 64x64 pixel image and then upscales it to the desired resolution.

The third stage involves post-processing, where the model applies a series of operations to the generated image to improve its quality. The model can accurately apply text descriptions to generate images with various objects appearing in different spatial relations, something that has been challenging for other text-to-image models. The model also offers zero-shot image-to-image translations, which is achieved by resizing the original image to 64 pixels, adding noise through forward diffusion, and using backward diffusion with a new prompt to denoise the image.

Who can use this model?

The DeepFloyd IF model is available for research purposes to anyone under a non-commercial, research-permissive license. Researchers have the opportunity to use the model to delve into and test advanced text image generation techniques.

How to access this model?

To access DeepFloyd IF model, one needs to visit the official website of DeepFloyd. On the website, one can find information about DeepFloyd IF, including its features, architecture, and usage conditions. The model card and code are available on GitHub. All the links are mentioned under 'sources' section at end of this article.

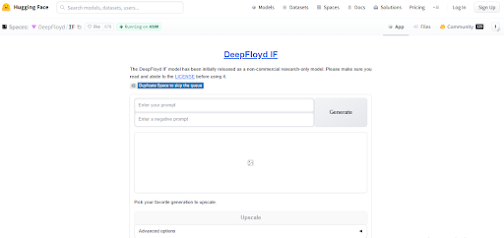

One can Even try the Gradio demo available on the Hugging Face website. The demo allows users to input text prompts and generate corresponding images using the DeepFloyd IF model.

Conclusion

DeepFloyd IF is a remarkable text-to-image model that can create realistic and diverse images from text inputs and smartly incorporate text into images. It has several features that make it stand out from other text-to-image models, such as its high degree of photorealism, its ability to handle different aspect ratios and spatial relations, and its zero-shot image-to-image translation capability. The model has a modular and cascaded structure that uses the T5-XXL-1.1 language model as a text encoder and a pixel diffusion model to generate high-resolution images. The model can be used by researchers, developers, artists, and anyone who wants to explore the possibilities of text-to-image generation. The model is available as an open-source technology on GitHub and can be accessed through the Stability AI website or the DeepFloyd IF app.

DeepFloyd IF is a remarkable text-to-image model that can create realistic and diverse images from text inputs and smartly incorporate text into images. It has several features that make it stand out from other text-to-image models, such as its high degree of photorealism, its ability to handle different aspect ratios and spatial relations, and its zero-shot image-to-image translation capability. The model has a modular and cascaded structure that uses the T5-XXL-1.1 language model as a text encoder and a pixel diffusion model to generate high-resolution images. The model can be used by researchers, developers, artists, and anyone who wants to explore the possibilities of text-to-image generation. The model is available as an open-source technology on GitHub and can be accessed through the Stability AI website or the DeepFloyd IF app.

sources

Forums: https://linktr.ee/deepfloyd

website link : https://deepfloyd.ai/deepfloyd-if

https://huggingface.co/DeepFloyd

https://github.com/deep-floyd/IF

No comments:

Post a Comment