The artificial intelligence field is pushing machines to achieve new capabilities. Its most sought-after advancement is when AI systems can reason well. Today's LLMs work wonderfully on recognizing patterns and making statistical predictions but may fail in line with the problems, which are mainly supported on logical deduction, commonsense understanding, and complex problem solving. This gap between pattern recognition and true reasoning limits the capacity of potential applications of LLMs.

Deepseek-R1 is an innovative approach that attacks this challenge from the front. It uses RL to train LLMs to become more capable reasoners. This is one giant leap in the pursuit of AI systems that do not only process information but understand and reason about it.

Model Variants

DeepSeek-R1 has several variants, each with different characteristics and uses. The base model, DeepSeek-R1-Zero, is trained with large-scale reinforcement learning directly on the base model without preliminary supervised fine-tuning. It has 671B total parameters, 37B activated per token, and a 128K context length. DeepSeek-R1 builds upon R1-Zero, addressing its limitations via a multi-stage training pipeline, which improves reasoning performance. There are also denser, more compact models distilled from DeepSeek-R1 which reach better performance than training them directly with RL. The different variants offer everything from exploring purely RL in foundation models to the final refined DeepSeek-R1 and efficient distilled models.

Key Features of Deepseek-R1

- Explicit Reasoning Ability Focus: One key feature that characterizes the explicit core strength of Deepseek-R1 is the use of reinforcement learning for the specific focus of training the ability of reasoning. While many LLMs primarily utilize supervised learning, RL trains the model to create answers not just correctly but also meaningfully and in coherent well-reasoned explanation towards robust skill-building of reasoning abilities.

- Emergent Chain-of-Thought Reasoning: While nearly every model can be prompted into exhibiting chain-of-thought behavior, the training procedure used for Deepseek-R1 causes this behavior to emerge. The model has learned to produce explanations as a part of its reasoning process and not merely through the use of specific prompting methods. This produces more robust and coherent chain-of-thought behavior.

- Emphasis on Transparency and Explainability: Deepseek-R1 also emphasizes transparency and explainability by explicitly training the model to give explanations. This way, the model could better explain its reasoning process in a transparent manner to the user, fostering trust and support better debugging and analysis.

- Generalization Benefits from RL: Even though the training is focused on reasoning, it has been observed that the general language tasks show improvement in large-scale RL training. This clearly indicates that synergistic benefits in training for reasoning positively impact other abilities related to language.

Capabilities and Use Cases of DeepSeek-R1

DeepSeek-R1's new approach to reasoning opens up unique applications, pushing the boundaries of AI. Its key capabilities include:

- Pioneering Reasoning Research via Pure RL: DeepSeek-R1 provides a groundbreaking research platform by showing effective reasoning development without initial training, providing new insights into how reasoning appears in LLMs. The availability of basic and improved models allows direct study of different training methods.

- Transforming Education: Excellent performance on educational tests suggests DeepSeek-R1's potential to change educational applications. This includes improving AI-driven search, enhancing data analysis tools for education, and creating better question-answering systems.

- Enabling Custom Model Development: The open-source nature of DeepSeek-R1 and its models allows developers to fine-tune them for very specific reasoning tasks, enabling custom AI solutions for areas like scientific research and complex data analysis.

Technological Advancements

DeepSeek-R1 involves a new kind of training paradigm based on reinforcement learning (RL) that can be applied directly to the base model without initial supervised fine-tuning (SFT). It enables fully autonomous development of reasoning skills, like in DeepSeek-R1-Zero. The latter one is the base model that uses a Group Relative Policy Optimization (GRPO) algorithm - a specified RL method - for the exploration of chain-of-thought (CoT) reasoning and complex problem-solving. This process nurtures self-verification, reflection, and the production of extended CoTs toward the potential of enhancing LLM reasoning without the preliminary SFT approach. Reinforcing the validity and format of structured reasoning, there is an intrinsic self-evolution process to allocate more computation power to complex problems, with the resulting behaviors being spontaneous, such as reflection and diverse problem-solving strategies.

Building on top of R1-Zero, DeepSeek-R1 has a multi-stage training pipeline. First, it involves a 'cold-start' stage with a high-quality, curated dataset of long CoT examples, generated via few-shot prompting, direct prompting for detailed answers with reflection, and human annotation of R1-Zero outputs. It is further improved through a reasoning-focused RL stage and rejection sampling and SFT for general-purpose tasks. Finally, there is an RL stage that aligns the model with human preferences, taking care of the limitations that R1-Zero has, such as readability and language mixing. Deepseek uses distillation as well, transferring reasoning patterns learned by larger models to smaller, more efficient ones. Remarkably, the models distilled outperform those directly trained using RL and display an improving pattern over current open-source models. This integrated method of RL, cold-start data, and distillation forms one of the best strategies to gain superior reasoning ability in LLMs.

Performance Evaluation with Other Models

Performance evaluation of DeepSeek-R1 is conducted strictly with various benchmarks and tasks for reasoning. Mathematics, coding, and logical reasoning benchmark comparison as reported in the paper between DeepSeek-R1, OpenAI-o1-1217, and OpenAI-o1-mini in table below reveals how often DeepSeek-R1 performed well to the levels or even above its counterparts and the complexity that the model can actually address.

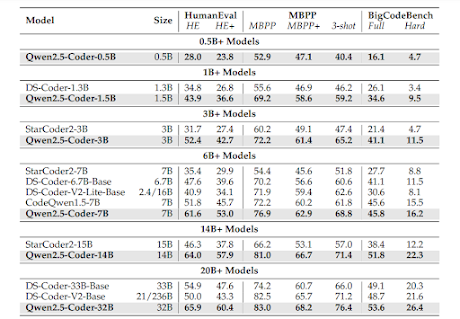

More importantly, DeepSeek-R1 won the length-controlled contest on AlpacaEval 2.0 with an 87.6% win-rate and on ArenaHard for open-ended generation, winning 92.3% of tests, showing how well it was able to respond to non-exam-oriented questions. It had a much larger lead over DeepSeek-V3 on long-context benchmarks, showing long-context understanding is more improved. Note that distilled versions, especially 32B and 70B models, showed new records in dense models' benchmarks for reasoning benchmarks. For example, DeepSeek-R1-Distill-Qwen-7B scored 55.5% on AIME 2024, beating QwQ-32B-Preview.

These distilled models (such as the DeepSeek-R1-Distill-Qwen variants from 1.5B to 32B) were further explored on reasoning benchmarks; notable improvement was observed against other open-source and even some closed-source models. For instance, the 14B distilled model outperformed QwQ-32B-Preview against all metrics, the 32B model, and 70B models significantly exceeded o1-mini on most benchmarks. These findings indicate that the distillation of the reasoning patterns from the models gives a better result than training smaller, base models with reinforcement learning.

How to access And Use DeepSeek-R1

The DeepSeek-R1 model has multiple ways for access and usability. Users can utilize it online at the DeepSeek website or can use an API offered by DeepSeek Platform; this API has compatibility with the OpenAI's API. For users desiring to employ the model on a local setting, instructions on how to access it are within the DeepSeek-V3 repository. Moreover, the light-weight and distilled variants of DeepSeek-R1 are executed on top of the interfaces of tools vLLM and SGLang like all popular models. Official GitHub repository shares the links of research paper and downloadable models, and the result of the evaluations.

Limitations

DeepSeek-R1 need improvements, currently not as powerful as DeepSeek-V3 in terms of reasoning. Issues related to function calling, multi-turn conversations, complex role-playing and consistent JSON output. It's optimized to perform better at Chinese and English. So it may mix up with other languages. The model mostly falls back to English for reasoning and responses. Moreover, DeepSeek-R1 is quite sensitive to prompting, which may result in performance degradation due to few-shot prompting. Therefore, the recommended method is zero-shot prompting. To date, DeepSeek-R1 has not seen improvements over DeepSeek-V3 in software engineering due to the cost involved in evaluating software engineering tasks in the Reinforcement Learning (RL) process.

Future work will leverage longer Chain-of-Thought (CoT) reasoning to improve function calling, multi-turn conversations and role-playing. It is also important to deal with other non-Chinese/English queries. In the following release (or version), software engineering performance will be improved by trying reject sampling on relevant data or by doing asynchronous evaluations during the RL process. Objective of these works is improving robustness and versatility of DeepSeek-R1 on more tasks.

Conclusion

DeepSeek-R1 leverages a novel reinforcement learning paradigm with emergent chain-of-thought reasoning and improved explainability. This makes it better at solving tough problems and communicating. A significant contribution is the introduction of distilled models making sophisticated AI reasoning feasible on resource-constrained devices and thus expanding its use cases. Open-source nature of DeepSeek-R1 models empower community exploration and development of more powerful reasoning AI across science, education, software development, and everyday problem-solving

Source

Website: https://api-docs.deepseek.com/news/news250120

Research Paper: https://github.com/deepseek-ai/DeepSeek-R1/blob/main/DeepSeek_R1.pdf

GitHub Repo: https://github.com/deepseek-ai/DeepSeek-R1

Model weights of Variants: https://huggingface.co/collections/deepseek-ai/deepseek-r1-678e1e131c0169c0bc89728d

Try chat model: https://chat.deepseek.com/