Introduction

Code models have improved by leaps and bounds and now take on much more with higher accuracy levels. At the beginning, they experienced problems in understanding context in long code sequences and certainty about the correctness of the code they were generating. Innovation has finally come with specialized tokens as well as better training techniques that bring out good results. Today's model can generate and complete code efficiently as well as in multiple programming languages while simplifying complex coding problems.

Qwen2.5-Coder is the best example of such developments. It learns about the context and relationships of the code involved in files and repositories to solve those issues that have been encountered previously. Qwen2.5-Coder does not only solve the existing problems but can be further enhanced in the future for future generations using AI-based code writing systems.

What is Qwen2.5-Coder?

Qwen2.5-Coder is a set of large language models fine-tuned and created with an objective for coding tasks, pre-trained on loads of code and text on the basis of Qwen2.5 architecture. This has allowed for pretraining which enables these models to generate code and handle most code-related tasks efficiently.

Model Variants

The Qwen2.5-Coder has various base models with different parameter sizes to satisfy different requirements:

- Qwen2.5-Coder-32B: The largest model with 32 billion parameters produces highly detailed and complex outputs.

- Qwen2.5-Coder-14B: With 14 billion parameters in it, such a model balances capability with resources needed.

- Qwen2.5-Coder-7B: This model includes 7 billion parameters, which is efficient and works good on less powerful hardware.

- Qwen2.5-Coder-3B: A smaller model has 3 billion parameters which can make it more efficient to run.

- Qwen2.5-Coder-1.5B: Built with efficiency through parameters: 1.5 billion.

- Qwen2.5-Coder-0.5B: The lightest resource version with 0.5 billion parameters; the most efficient version to run.

The base models are the foundation for instruction-tuned models and their quantized variants within the Qwen2.5-Coder series.

Key Features of Qwen2.5-Coder

Some of the finest features in Qwen2.5-Coder are

- Multilingual Programming: Supports 92 coding languages, which makes it pretty versatile for different programming needs.

- Repository-Level Code Completion: It understands the relationships between different calls in multiple files from the same repository. This enables effective completion of code.

- Code More: Compared to CodeQwen1.5, much more code data have been trained on Qwen2.5-Coder. That includes source code, text-code grounding data, and synthetic data totalling 5.5 trillion tokens. The above training on such a humongous amount improves code-related tasks considerably.

- Learn More: Inheriting math and general skill strengths from the base model, it really fills in the gaps with additional information about mathematical and general skills for applications that really make use of it, like Code Agent.

- Text-to-SQL: It is the process of transforming natural language questions into structured SQL queries. This helps in allowing non-technocrats to communicate directly with the databases.

- Long Context Support: Text understanding and text generation context length up to 128K tokens.

Capabilities/Use Cases of Qwen2.5-Coder

Qwen2.5-Coder shines in many respects, and so can be applied to everything:

- Multi-lingual programming support: The program understands very many programming languages, and hence it is adequate for any project that will be using a few languages. It promises uniform performance in the other segments.

- Simplified Database Interaction: Using the facility of Text-to-SQL, it can make database querying easy for non-programmers using natural language.

- Learning Applications: It's very useful for the learning process about the concepts of computer programming. It provides code generation assist, debugging support, and explanation of the logic of the code.

- Code-Centric Reasoning Models: It allows for the construction of very powerful code-centric reasoning models, thus pushing the state of the art in code intelligence.

How does Qwen2.5-Coder work?

Qwen2.5-Coder integrates different architectures, training methodologies, and improvements in code intelligence. Specifically, it employs the Qwen2.5 architecture, special tokens for the comprehension of code and increasing differentiation and manipulation of complicated structures in code.

The model adopts a complex three-stage pipeline in training. It starts with file-level pre-training wherein the model is trained on individual code files with a maximum allowance of 8,192 tokens for both next-token prediction and the FIM technique. Then it moves on to repo-level pre-training; it increases the context length to 32,768 tokens and uses YARN mechanism which supports sequences up to 128K tokens. This is important for understanding relationships between files in a repository, which is always going to be important for something like end-to-end repository level code completion. Finally, the model is instruction-tuned fine-tuned on a selected dataset of coding problems and their solutions. It includes both real-world examples and synthetic data created using code-focused LLMs. Thus, it enhances its capability to follow instructions and solve coding tasks.

The extensive curation of data focuses on Source Code Data, Text-Code Grounding Data, Synthetic Data, Math Data, and Text Data. The quality control is ensured through rules-based filtering and hierarchical filtering for text-code data, with validation for synthetic data. Other strengths include decontamination of datasets, chain-of-thought (CoT) techniques on reasoning, and multilingual sandbox verification of code alongside syntactic correctness in a vast number of programming languages.

Performance Evaluation with Other Models

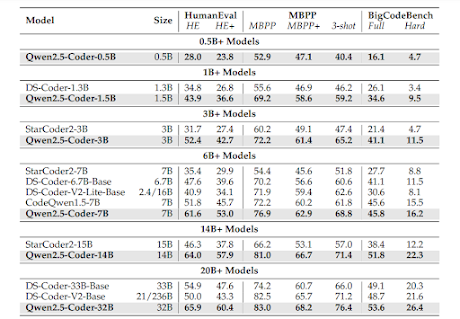

Qwen2.5-Coder obtains state-of-the-art performance against other models, especially in particular key benchmarks such as HumanEval (shown in below table) and MultiPL-E, which measure code generation and multilingual capability, respectively. With the HumanEval task for estimating code generation from Python, Qwen2.5-Coder-7B-Base outperforms the much larger DS-Coder-33B-Base for all metrics across HumanEval, HumanEval+, MBPP, MBPP+, and BigCodeBench-Complete.

Qwen2.5-Coder got leading results in the MultiPL-E (refer below table) benchmark, which measures proficiency in multiple languages. It had an accuracy above 60% in five of the eight languages: Python, C++, Java, PHP, TypeScript, C#, Bash, and JavaScript, for which it was tested.

The Qwen2.5-Coder instruct models are the best in benchmarks like HumanEval and BigCodeBench-Instruct in code generation. For example, the model of Qwen2.5-Coder-7B-Instruct achieves higher accuracy compared to its counterparts, even those with larger parameter size. It showcases an accuracy of more than 80% on HumanEval+ and does well enough on BigCodeBench-Instruct. The same model achieves the most accurate mean accuracy that has been better even than larger models on McEval, which measures the generation performance across 40 programming languages.

Additional testing involved code completion with HumanEval Infilling, code explanation using CRUXEval, math explanation with MATH, GSM8K, MMLU-STEM, and TheoremQA, general natural language understanding with MMLU, MMLU-Redux, ARC-Challenge, TruthfulQA, WinoGrande, and HellaSwag, long-context modeling with 'Needle in the Code' code editing utilizing the Aider benchmark, and Text-to-SQL using Spider and BIRD. These sets of assessment cover all Qwen2.5-Coder capabilities on various tasks involving codes as proofs of its excellent quality performance against existing models in the fields.

How to access and work with this model

To access and make use of Qwen2.5-Coder, options are available for various needs. For full access to offering documents detailing detailed documentation, setup processes, and examples of use, its repository is on GitHub. Furthermore, the same repository draws special terms relating to licensing, in which this model is open source but commercially usable, and developers or organizations may freely incorporate it into their workflows, naturally to meet the requirements of licensing. For direct embedding in projects, the model and variants are available on the Hugging Face Model Collection, which you can look into and make use of the different versions. If you wish to have a go at the model without any setup being required, there is an online demo available on the Hugging Face website. The demo lets you test how well the model performs, and also what it's going to output in real-time.

Limitations And Future Work

Although Qwen2.5-Coder is good at generating code, reasoning, and multilingual support, usage of synthetic data most probably causes bias or issues in dealing with real-world and complex scenarios related to coding. This aspect must somehow reduce the bias that synthetic data can introduce and ensure it functions fairly well in practical applications. In addition, though the YARN mechanism significantly enhances the ability of the model to understand long contexts, there is still a lot of margin to improve when dealing with more extensive and complex codebases.

Future directions on Qwen2.5-Coder include fine-tuning the 32B version to compete with proprietary models. A larger model could push the envelope of code intelligence and allow much more sophisticated applications. Lastly, strong code-centric reasoning models based on Qwen2.5-Coder are a promising direction.

Conclusion

Qwen2.5-Coder supports programming languages in a very powerful way, detects more errors and produces better code than the predecessor. Its flexibility in integration with various systems makes this tool highly valued by developers from various fields. Yet, some aspects need improvements and will be even more efficient and effective with continuous research and development.

Source

Blog: https://qwenlm.github.io/blog/qwen2.5-coder-family/

Technical report: https://arxiv.org/pdf/2409.12186

GitHub repo: https://github.com/QwenLM/Qwen2.5-Coder

Model Collection: https://huggingface.co/collections/Qwen/qwen25-coder-66eaa22e6f99801bf65b0c2f

Try on demo: https://huggingface.co/spaces/Qwen/Qwen2.5-Coder-demo

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.

No comments:

Post a Comment