Introduction

Automated Unit Test Generation is the practice of using software tools that automatically create test cases with assertion for individual units (procedural/unit/module written class encapsulated method) and run as part of continuous integration ensuring a build without any bug pushes to code repository. With their sophisticated reasoning and generation abilities, Large Language Models (LLMs) are making automated unit test generation much more accurate while accounting for the full functionality.

Still, some challenges of automated unit test generation like unconditional hypothesis making because the context is very poor; lack in feedback and arising run‐time exceptions because PTG verification engine does not check or save certain properties at compile time just so we need to wait until an exception which would possibly show up during a first trial discovering that issue before starting investigating it again for real. TestART tackles these by allowing iterations of automated generation and repair to co-evolve, with prompt injection being used together with template-based repair policies that smooth out the process while preventing repeating.

A team of researchers at the State Key Laboratory for Novel Software Technology, Nanjing University, China and Huawei Cloud Computing Technologies Co., Ltd. developed TestART. The State Key Laboratory for Novel Software Technology is one of China's top software engineering research centers, while Huawei Cloud Computing Technologies specializes in cloud computing innovations. The motivation behind the creation of TestART was to address these failures in automated unit test generation and harnessing LLMs strong capabilities, particularly generating high quality, practical-readable tests.

What is TestART?

TestART is a new approach to generating unit tests (that evolutionarily grows from LLM to quality preservation) by co-evolving automatic creation and repair iteration in this fashion. This approach intelligently utilizes the advantages of LLMs as well as overcomes their drawbacks, resulting in highly accurate and human-readable test cases.

Key Features of TestART

- Template-based Correction Mechanism: Uses timely input to guide the next-step automated generation, preventing repetition suppression and fixing bugs in LLM-generated test cases.

- Extract Coverage Info: Extracts coverage information from passed test cases and uses it as testing feedback to strengthen the final test case.

TestART Capabilities / Use Case

- Integrate Generation-Repair Co-evolution: Combines generation-repair co-evolution, testing feedback, and prompt injection into the iteration process.

- High Quality Test Cases: Fixes bugs in generated test cases and feeds coverage information back to output high-quality test cases.

- Example: Can be employed across software development for unit testing.

- Better Quality and Readability: Increases the quality, effectiveness, and readability of unit test cases by orders of magnitude more than prior methods.

How does TestART work? /Workflow/ Architecture

How Automated Generation and Repair Iteration Works? The LLM first generates initial test cases, which are then propagated through various repair templates that serve to correct typical bugs and errors. These reconstructed test cases are then re-injected into the LLM for prompts when generating tests in future rounds, which mitigates problems with repetition suppression.

TestART workflow involves many main steps. Once the source code has been preprocessed, TestART calls ChatGPT-3. 5 model for initial test case generation These cases enter a compilation, execution and predefined templates loop of co-evolution. Successfully repaired tests are then analyzed for code coverage. If the coverage is high enough, the test case will then be emitted as a complete result. Otherwise, TestART leverages the coverage information as feedback by merging it with a repaired test case to create the next iteration of generated tests.

TestART's architecture is intended to exploit LLMs where they excel, but mitigate their shortcomings. It has some modules like test generator, auto repair and coverage analysis etc. The repair module applies a number of general invention to remove compilation errors, runtime errors and assertion failures which results in substantially fewer generated tests that does not pass. The coverage analyser drives the direction for new iterations, so that every next round of generation is an incremental betterment to quality and coverage. This method of working in concert is what enables TestART to generate good, coherent and useful unit tests grades ahead when it comes to pass-rates; the same can be said for code coverage.

Comparison of TestART with Other Models

One area where TestART really excels is pass rates. The pass rate of the test cases that it introduces is 78.55%, making them approximately 18% more reliable than those for other methodologies like ChatGPT-4. 0 and ChatUniTest (see table below). This is a clear indication of the effectiveness of TestART to repair. It employs fixed templates to correct the bugs in its automated test cases. Thus Test cases given by TestART are not just syntactically correct but also semantically meaningful; hence resulting in higher pass rate.

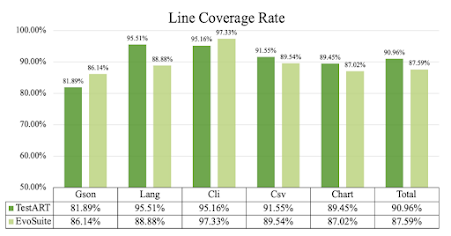

Besides the pass rate, TestART also excels in line coverage. It achieves a line coverage rate of 90.96% on the methods that passed the test, which is 3.4% higher than EvoSuite (see below figure). This is a big improvement because line coverage is a key metric for evaluating how effective a testing method is. TestART’s high line coverage rate means it can generate test cases that thoroughly test the code, leading to more reliable and effective testing.

Apart from pass rate and line coverage, TestART also had tests for metrics like syntax error rate (Types of errors), compile error rate, runtime error rate etc. The results also show that TestART in fact bloated on these composed loss metrics and suffers less errors than other previous methods. Additionally, TestART was tested on multiple projects such as Gson, Lang, Cli,Csv and Chart with a consistent higher performance. In summary, the results confirm that TestART is a very accurate approach for unit test case generation.

TestART’s Edge Over A3Test and ChatUniTest

The unique emphasis of TestART on assertion knowledge and the verification that tests approach cross A3Test makes it easy to compare. Despite both models are using domain adaption techniques to adapt the knowledge inferred from assertion generation into test case generation, TestART outperforms Transformer+Classifier for generating accurate and more complete test cases. Moreover, TestART corrects bugs in the automated test cases using fixed templates hence generating semantic significance and improving pass rate.

On the other hand, ChatUniTest uses an LLM based approach with adaptive focal context mechanism and generation-validation-repair to correct errors in generated unit tests. Though this key knowledge helps to provide a richer context in prompts and detect errors, the TestART method can more quickly generate prompt test cases higher quality due its attention on assertion knowledge and verifying trace values. After passing the test case, TestART can extract coverage information so as to be used back for feedback.This feature identifies that it increases ( or at least decreases )the quality and efficiency of outputted test cases than many GCT tools then lead its Pass rate & line coverage superiority.

This makes TestART distinct from A3Test and ChatUniTest since the domain adaptation principles are employed to adapt existing knowledge in assertion generation to test case generation, which improves accuracy and coverage. Due to the specific assertions knowledge of SUT and fixed templates for correcting bugs, we guarantee that our test cases are syntactically as well semantically correct. That enables TestART to be a test case generation tool that is useful mainly for developers who look at maximizing pass rates and code coverage. Although A3Test, ChatUniTest (also provide useful features), TestART has its way and the first choice when it comes to generating high quality, reliable unit test cases in an efficient manner.

How to Access and Usage TestART?

You can find TestART on GitHub website, click on the links provided at the end to know more about how to install it locally and online demos. It is open source and free for commercial use as per its own licensing policy. Setup instructions and demos are available at the GitHub repository.

Limitations and Future Work

While TestART has more room for improvement and is limited in some areas. These handcrafted repair templates are unlikely to be exhaustive of all error conditions and so it indicates that automatic generation or learning from data is required. Researchers can improve the quality of initial test cases from LLM (i.e., LABASE) by fine-tuning or advanced prompt engineering. Also the performance of TestART on complex projects and big data test scenarios requires validation. Alternatively, future work could investigate porting other programming languages or testing frameworks to TestART.

Conclusion

TestART presents a potential approach to generate good quality test cases by taking advantages of LLMs and minimizing the drawbacks from existing methods. It is essential among developers for its high pass rate and line coverage. This shows that TestART will be able to pay off a lot in terms of improving the quality and development productivity of software.

No comments:

Post a Comment