Introduction

Document-based conversational AI has greatly improved after the advent of Language Models (LLMs), making conversation accurate and contextually relevant. By incorporating a variety of information, these models have the ability to process data and mimic natural language text better than through traditional means leading to higher quality output for responses in document-based conversations. The output, however remains plagued by challenges like ambiguous queries, difficult context handling over a long conversation and lack of data privacy. To help, AI models like Cerebras DocChat are using advanced training techniques and synthetic data generation. They are part of a more general enhancement to AI, stretching the limits of what conversational agents can do.

Who Developed Cerebras DocChat?

Cerebras is a well-established company with innovation in AI and deep learning, it was founded by an impressive team of visionaries. Cerebras Systems, founded in 2015 is revolutionizing AI hardware and software to accelerate deep learning. The motivation to develop DocChat was to build an extremely fast and scalable document-based conversational AI model that could be trained quickly, outperforming GPT-4. This model would go on to be considerably more aggressive than the other pruning techniques tested, and it was made possible due to a combination of their deep experience in LLM training/dataset curation, as well a series edgier approaches they were able implement including automated synthetic data generation.

What is Cerebras DocChat?

Cerebras DocChat is a large-scale document-based conversational question-answering model designed for at the level of GPT 4. It builds upon state-of-the-art training techniques and synthetic data generation methods to improve the performance of its method as well for improving robustness. It excels in learning and creating human-like text: this is what makes it very useful in a wide range of document-based conversational applications.

Model Variants

- Cerebras Llama3-DocChat: Built on the Llama 3 baseline, this model utilizes recent advances in document-based Q&A research — specifically from the Nvidia ChatQA series of models.

- Cerebras Dragon-DocChat: This is a multi-turn retriever model built on the top of Dragon+ and fine-tuned on conversational Q&A data provided by ChatQA.

Key Features of Cerebras DocChat

The Main Features of Cerebras DocChat are:

- Blazing fast training: Llama3-DocChat can be trained end-to-end in just few hours and Dragon-DocChat with quick fine-tuning shortly after.

- Top Performance: SOTA across multiple benchmarks (Doc2Dial, QuAC, and CoQA) in terms of high performance.

- Synthetic Data Generation: Overcomes constraints of real data by introducing synthetic data to strengthen the model.

- Open Source: Model weights, training recipes and datasets are made open source to encourage transparency and a collaborative community.

- Low Recall: The models exhibit substantial improvements —Dragon-DocChat improves by 8.9% over Dragon+ in terms of recall rates.

Capabilities/Use Cases of Cerebras DocChat

- Customer Support: Help document and train the AI to answer customer queries based on information from across all your documentation.

- Legal Document Analysis: A comprehensive legal tool to help analyze and summarize routine legal documents for easy review by lawyers.

- Academic Research: Assists a researcher to pull out the information from academic papers and research documents.

- Information Retrieval: —It makes easy for us to locate the relevant information from a larger datasets which are used in multiple fields of applications.

- Eliminates Manual Documentation: This streamlines the documentation generation and management process for teams working across multiple verticals.

Advanced Architectures and Development of Cerebras DocChat

Cerebras DocChat models use advanced architectures and latest research insights. Llama3-DocChat is based on Llama 3 by Meta. Llama 3 comes in sizes like 8B and 70B parameters. It’s designed for efficient deployment on consumer-size GPUs. This model includes elements from Nvidia’s ChatQA series. It excels at conversational question answering and retrieval-augmented generation. Synthetic data generation addressed limitations of real data availability.

Dragon-DocChat is built on the Dragon+ model. It’s a dense retriever initialized from RetroMAE and trained further. Training used augmented data from the MS MARCO corpus. Fine-tuning used contrastive loss with hard negatives technique. This improves distinguishing between relevant and irrelevant passages effectively. Significant improvements in recall rates were achieved.

Cerebras Model Zoo supported the development of these models. It’s a repository of deep learning models optimized for Cerebras. It provides best practices for coding models for Cerebras hardware. This infrastructure was crucial for building and refining models. It ensures models handle complex document-based conversational Q&A tasks.

Performance Evaluation

The Cerebras DocChat models went through some serious testing to check how well they handle document-based conversational questions.Cerebras has rolled out two DocChat models, both designed for answering questions based on documents.

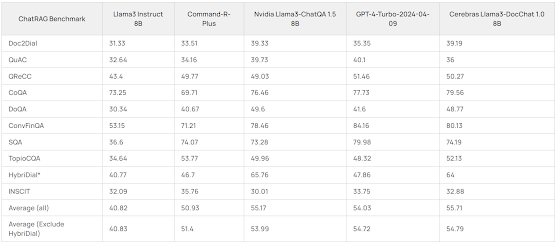

The Llama3-DocChat model was put to the test using the ChatRAG Benchmark, where it showed strong performance across various tasks. As you can see in table above, Llama3-DocChat scored as well as or even better than other top models like Nvidia Llama3-ChatQA 1.5 8B and GPT-4-Turbo-2024-04-09. It scored 39.19 on the Doc2Dial task, beating GPT-4-Turbo-2024-04-09’s score of 35.35.

The Dragon-DocChat model, which focuses on multi-turn retrieval, was tested using several benchmarks, with the results shown in table above. On the Doc2Dial benchmark, Dragon-DocChat got a Recall@1 score of 51.54, outdoing both Facebook Dragon+ (43.95) and Nvidia Dragon Multiturn (50.11). This shows how good the model is at pulling out relevant document passages in response to questions.

The performance of Llama3-DocChat was even tested on general language tasks using the Eleuther Eval Harness, where it kept up competitive scores compared to other models. Both DocChat models were thoroughly evaluated across various benchmarks, including QuAC, CoQA, DoQA, and ConvFinQA. Overall, the Cerebras DocChat models have shown strong performance in document-based conversational Q&A tasks, often matching or beating the capabilities of other leading models in the field.

Cerebras vs. Facebook vs. Nvidia: DocChat Models

Cerebras Dragon-DocChat Facebook Dragon+ Nvidia Dragon Multiturn are unique models. They are designed for document-based conversational question answering tasks. Cerebras Dragon-DocChat excels in multi-turn settings with contrastive loss. It performs best on the Doc2Dial benchmark for accuracy. Facebook Dragon+ uses a dense retriever for multi-turn conversations. Nvidia Dragon Multiturn handles various document-based Q&A tasks effectively. Cerebras Dragon-DocChat distinguishes relevant from irrelevant passages efficiently. Facebook Dragon+ combines supervised and unsupervised learning methods effectively. Nvidia Dragon Multiturn uses supervised and reinforcement learning techniques together.

Cerebras Dragon-DocChat stands out with superior retrieval accuracy always. Its architecture is designed for document-based Q&A tasks. Effective training on conversational Q&A data boosts its performance. These strengths make it a top choice always. Facebook Dragon+ and Nvidia Dragon Multiturn are strong contenders too. Cerebras Dragon-DocChat’s unique features make it especially well-suited.

How do you use this model?

The model weights are available on Hugging Face and the data preparation, training as well as evaluation code can be found at GitHub. The licensing terms allow copying, derivatives and creativity which promotes a cooperation. Source links are located at the end of this article.

Limitations And Future Work

The Cerebras DocChat models are certainly a large step forward, but there remain some serious limitations. A major difficulty is represented by the issue of how to reason about unanswerable questions, for which even state-of-the-art models fail when tested against benchmarks such as QuAC and DoQA. The early versions also showed some performance issues in the arithmetic tasks, which were addressed using a Chain of Thought approach and NuminaMath-CoT dataset. Initially the entity extraction abilities of the models were poor as there weren't any good examples in our training data, but with a focus on integrating SKGInstruct dataset now making progress.

In the future, many more tasks in improvements of Cerebras Doc model are coming up. Potential areas for improvement are by improving support of longer contexts so that coherence can be sustained during longer conversations and larger documents. Also better improvement in the domain of mathematical reasoning and problem solving are necessary as well, like it is observed that CoT approach works on arithmetic related activities. Sharing the models, training recipes and datasets as open-source will further enable innovation in AI by allowing everyone to use these resources at scale.

Conclusion

By using creative strategies and a vast experience, the Cerebras DocChat is built upon an extremely efficient methodology. This can revolutionize industries in various ways using Cerebras DocChat to automate and improve the interactions based on documents. With continued work from the AI community we can look forward to an even brighter future for conversational AI.

Source

cerebras AI blogpost : https://cerebras.ai/blog/train-a-gpt-4-level-conversational-qa-in-a-few-hours

Model Weights: https://huggingface.co/cerebras/Llama3-DocChat-1.0-8B

Github repo (Data, training, evaluation) : https://github.com/Cerebras/DocChat

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.

No comments:

Post a Comment