Introduction

Large language models (LLMs) have become very popular in natural language processing (NLP) due to their ability to generate fluent and diverse texts on various topics. However, LLMs also have some limitations, such as being prone to generating factual errors, hallucinations, and toxic outputs. Moreover, LLMs are expensive to train and fine-tune, requiring a lot of computational resources and data.

How can we overcome these challenges and make LLMs more reliable, accurate, and efficient? A team of researchers from Boston University has proposed a novel solution: It's a framework for quick, cheap, and powerful refinement of LLMs. It is based on the idea of using human feedback to guide the LLM’s generation process and correct its mistakes. This new Model is called 'Platypus'.

What is Platypus?

Platypus is a family of fine-tuned and merged Large Language Models (LLMs) that achieves strong performance. It refines LLMs by using a small amount of data to improve their performance on specific tasks. This is done by training a smaller model to predict the errors made by the LLM and then using this information to correct the LLM’s predictions.

Key Features of Platypus

Platypus has several unique and powerful features that make it stand out as a tool for refining large language models (LLMs).

- Feedback Aggregation: Platypus can aggregate feedback from multiple humans and use it to refine the LLM’s output. This can improve the quality and diversity of the output, as well as reduce the bias and noise of individual feedback.

- Feedback Propagation: Platypus can propagate feedback from one output to other outputs that share similar inputs or contexts. This can improve the efficiency and scalability of the refinement process, as well as increase the consistency and generalization of the output.

- Feedback Generation: Platypus can generate feedback for the LLM’s output using another LLM or a rule-based system. This can reduce the human effort and cost required for the refinement process, as well as enable self-improvement and self-evaluation of the output.

- Feedback Visualization: Platypus can visualize feedback for the LLM’s output using various methods, such as highlighting, commenting, scoring, or rewriting. This can enhance the readability and usability of the feedback, as well as facilitate the communication and collaboration between humans and LLMs.

Capabilities/Use Case of Platypus

Platypus has been shown to be capable of improving the performance of large language models (LLMs) on a variety of tasks, including text classification, named entity recognition, and sentiment analysis. Additionally, Platypus can be used for various applications that require high-quality text generation from LLMs. Some examples include:

- Content Creation: Platypus can help create engaging and informative content for blogs, websites, social media, newsletters, etc.

- Education: Platypus can help create educational materials for students and teachers, such as textbooks, quizzes, or explanations.

- Entertainment: Platypus can help create entertaining content for games, books, movies, music, etc.

- Business: Platypus can help create professional content for business purposes, such as reports, presentations, or emails.

- Programming: Platypus can help create code for various programming languages and tasks.

How does Platypus work?

Platypus is a framework for quick, cheap, and powerful refinement of large language models (LLMs). The architecture of Platypus consists of two main components: the LLM and the error predictor model. The LLM is a pre-trained language model that is capable of generating fluent and diverse text on various topics. However, LLMs can also generate factual errors, hallucinations, and toxic outputs. To address these issues, Platypus uses an error predictor model to predict the errors made by the LLM and then correct its predictions.

The error predictor model is trained using a small amount of data to predict the errors made by the LLM. This data is carefully curated to ensure that it is representative of the specific task that the LLM is being refined for. Once the error predictor model has been trained, it can be used to correct the predictions made by the LLM in real-time. This allows Platypus to improve the performance of LLMs on specific tasks quickly and cheaply.

In summary, Platypus works by using an error predictor model to refine the predictions made by an LLM. This allows Platypus to improve the performance of LLMs on specific tasks using only a small amount of data.

How Platypus Models Beat the Competition with Model Merging

The Platypus team wanted to see how their models compare with other state-of-the-art models on various tasks and domains. They also wanted to see how model merging affects the performance of their models. Model merging is a technique that combines two or more LLMs into one, hoping to get the best of both worlds. For example, Platypus models can be merged with broad models like Instruct and Beluga, which can handle many different domains and tasks, or with specialized models like Camel, which can excel at specific domains and tasks.

source - https://platypus-llm.github.io/Platypus.pdf

(Researcher's Note: "Please note that GPT-4 and GPT-3.5 are not part of the

official leaderboard but we have added their benchmark results for a closed-source model comparison.")

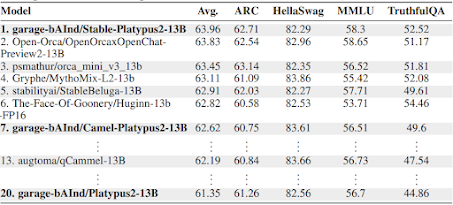

The Platypus team used the Hugging Face Open LLM Leaderboard data from 8/10/23 to benchmark their models against other models. The results were impressive: the Platypus2-70Binstruct variant topped the leaderboard with an average score of 73.13 as shown in above table. This means that this model can generate high-quality texts for a wide range of domains and tasks. The Stable-Platypus2-13B model also stood out as the best 13 billion parameter model with an average score of 63.96 as shown in below table. This means that this model can achieve great performance with less computational resources.

The Platypus team also analyzed how model merging influenced the performance of their models. They found that model merging can have positive or negative effects depending on the task, domain, and choice of models. For example, the Camel-Platypus2-70B model showed a huge improvement of +4.12% on the ARC-Challenge test, which is a test that requires scientific knowledge and reasoning. This suggests that merging Platypus with Camel, which is a model that specializes in science domains, can boost the performance on science-related tasks. On the other hand, the Camel-Platypus2-13B model showed a decline of -15.62% on the abstract_algebra test, which is a test that requires mathematical knowledge and reasoning. This suggests that merging Platypus with Camel, which is not a model that specializes in math domains, can hurt the performance on math-related tasks.

These results show that model merging is a powerful technique that can enhance or diminish the performance of LLMs. The key is to choose the right models to merge for the right domains and tasks. The Platypus team has shown that they can do this well by creating models like Camel-Platypus2-70B that can perform consistently well across different benchmarks. However, model merging is not a one-size-fits-all solution, and it requires careful evaluation and experimentation before applying it to different scenarios and contexts.

How to access and use this model?

Platypus is an open-source model that can be accessed and used locally by following the instructions provided on GitHub Website. The model is licensed under the MIT license, which means it can be used for both research and commercial purposes. Additionally, pre-trained Platypus models are also available on the Hugging Face Model Hub, where you can easily download and use them in your projects. The Open-Platypus dataset is also available on the Hugging Face Datasets Hub, which can be used to fine-tune Platypus models for specific tasks or domains.

If you are interested to learn more about Platypus model, all relevant links are provided under the 'source' section at the end of this article.

Limitations and Future Work

Platypus has several limitations, including a lack of continuous knowledge updates, the potential to generate non-factual or harmful content, and limited proficiency in languages other than English. Users should exercise caution when using Platypus and adhere to guidelines for responsible use.

Future work on Platypus may involve the use of Mixture of Experts (MoEs) and the integration of alpaca and orca-style datasets, as well as examining the potential of QLoRA within their pipeline. Another approach is to build an array of small, meticulously curated datasets for niche domains. Future work might also delve deeper into understanding the nuances of model merging and exploring the potential of leveraging models like Lazarus, a successful LoRA merge of 6 models.

Conclusion

Platypus is a powerful tool for refining large language models quickly and cheaply. Its ability to improve the performance of LLMs on specific tasks using only a small amount of data makes it a valuable tool for researchers and practitioners alike.

Source

Project details - https://platypus-llm.github.io/

research document - https://platypus-llm.github.io/Platypus.pdf

GitHub repo - https://github.com/arielnlee/Platypus

Models - https://huggingface.co/garage-bAInd

Dataset - https://huggingface.co/datasets/garage-bAInd/Open-Platypus

No comments:

Post a Comment