Introduction

Imagine having a chatbot that can understand and respond to natural language queries with ease. A chatbot that can learn from massive amounts of conversational data and generate coherent and engaging responses. A chatbot that can be customized for different domains and tasks with minimal effort.

Meet Llama 2, a new open-source framework for building and deploying chat models. Llama 2 is brought to you by Meta AI, a leading research company that strives to advance artificial intelligence for humanity. Llama 2 is the result of a collaborative effort of many brilliant researchers and engineers from Meta AI, Facebook AI Research and other institutions. The vision behind Llama 2 is to provide an open foundation for chat models that can be easily fine-tuned for various applications. secondly, development of this model enables the community to build on their work and contribute to the responsible development of LLMs.

What is Llama 2?

Llama 2 is a collection of pretrained and fine-tuned large language models (LLMs) developed and released by Meta AI Research1. The models range in scale from 7 billion to 70 billion parameters. The fine-tuned LLMs are called Llama 2-Chat and are optimized for dialogue use cases.

Key Features of Llama 2

Some key features of Llama 2 include:

- A collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion parameters.

- Fine-tuned LLMs called Llama 2-Chat optimized for dialogue use cases.

- Based on human evaluations for helpfulness and safety may be a suitable substitute for closed-source models.

- A detailed description of their approach to fine-tuning and safety improvements of Llama 2-Chat is provided to enable the community to build on their work and contribute to the responsible development of LLMs.

- It is flexible and extensible. Users can modify the architecture, hyperparameters, data sources, loss functions, evaluation metrics, and other aspects of Llama 2 according to their needs. Users can also integrate Llama 2 with other frameworks and libraries.

- It is user-friendly and interactive. Users can easily fine-tune Llama 2 on their own data using a simple command-line interface or a web-based interface. Users can also interact with Llama 2.

The Training Process of Llama 2-Chat

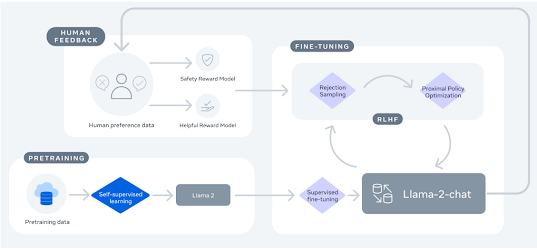

The training process of Llama 2-Chat begins with the pretraining of Llama 2 using publicly available online sources. Pretraining is a process where a model is trained on a large dataset to learn general language representations. This allows the model to learn the underlying structure of the data and develop a good understanding of the language. The pretraining process is crucial for the success of the model as it provides a strong foundation for the subsequent fine-tuning stage.

After pretraining, an initial version of Llama 2-Chat is created through the application of supervised fine-tuning. Supervised fine-tuning is a process where the model is trained on a labeled dataset to improve its performance on a specific task. This involves adjusting the weights of the model to better fit the data and improve its accuracy. The fine-tuning process allows the model to specialize in a particular task and achieve better performance than if it were trained from scratch.

Subsequently, the model is iteratively refined using Reinforcement Learning with Human Feedback (RLHF) methodologies, specifically through rejection sampling and Proximal Policy Optimization (PPO). RLHF is an advanced approach to training AI systems that combines reinforcement learning with human feedback. It is a way to create a more robust learning process by incorporating the wisdom and experience of human trainers in the model training process. PPO is a type of policy gradient method for reinforcement learning, which alternates between sampling data through interaction with the environment and optimizing a surrogate objective function using stochastic gradient ascent.

Throughout the RLHF stage, the accumulation of iterative reward modeling data in parallel with model enhancements is crucial to ensure the reward models remain within distribution. Iterative reward modeling data refers to the process of updating the reward model based on new data collected during the RLHF stage.

Performance evaluation with other Models

Llama 2 is not only a powerful chat model, but also a versatile language model that can handle various tasks and challenges. To test its abilities, Llama 2 was compared with other open-source language models on different benchmarks and tasks, such as reasoning, coding, proficiency, and knowledge tests. Llama 2 showed impressive results and surpassed other models on many external benchmarks.

But how good is Llama 2 at generating helpful and safe responses for chatbots? This is an important question, because chatbots need to be able to provide useful and appropriate answers to human users. To answer this question, human evaluators rated the responses of Llama 2 and other models based on their helpfulness and safety. They looked at both single turn and multi-turn prompts, which are short or long conversations between a user and a chatbot.

The results that researcher come up indicates that Llama 2-Chat models were better than other open-source models by a large margin. Especially, the Llama 2-Chat 7B model was more helpful and safe than the MPT-7B-chat model on 60% of the prompts. The Llama 2-Chat 34B model was even more impressive, with an overall win rate of more than 75% against equally sized Vicuna-33B and Falcon 40B models.

The largest Llama 2-Chat model was also competitive with ChatGPT, which is one of the most advanced chat models in the world. The Llama 2-Chat 70B model had a win rate of 36% and a tie rate of 31.5% relative to ChatGPT. The Llama 2-Chat 70B model also beat the PaLM-bison chat model by a big percentage on their prompt set.

Tearm has conducted many such evaluation tests, to see results and analysis, kindly go through the research paper.

How to access and use this model?

Llama 2 is an open-source large language model developed by Meta AI Research. It is available for free for research and commercial use under the COMMUNITY LICENSE. You can access Llama 2 through Meta AI Research’s website, or through platforms such as AWS or Azure where it can be fine-tuned according to your preferences for training time and inference performance. Instructions on how to use Llama 2 can be found on the Meta AI Research website.

If you prefer to use Llama 2 locally, you can download the code, data, models, and documentation from the Llama GitHub repository. The repository includes detailed instructions on how to install and use Llama 2 on your own machine. You can also access Llama 2 through the Hugging Face platform, where you can find pre-trained models and fine-tune them according to your needs.

If you are interested to learn more about Llama 2 model, all relevant links are provided under the 'source' section at the end of this article.

Limitations of Llama 2-Chat

- It may not always give accurate or helpful answers, because it does not update its knowledge after training, it may make up things that are not true, and it may give bad advice.

- It may not work well for languages other than English, because it does not have enough data to learn from in other languages.

- It may say things that are harmful, offensive, or biased, because it learns from online data that may contain such content.

- It may be used for bad purposes, such as spreading false information or getting information about dangerous topics like bioterrorism or cybercrime.

- It may be too careful and avoid answering some requests or give too many details about safety.

Conclusion

Llama 2 is a breakthrough in the field of chat models and natural language generation. It offers an open foundation and fine-tuning capabilities for various chat applications. It demonstrates remarkable performance and versatility on various benchmarks and tasks. It is a milestone in the journey of AI towards human-like communication.

Source

Research paper - https://arxiv.org/abs/2307.09288

Research document - https://arxiv.org/pdf/2307.09288.pdf

Website Links-

https://ai.meta.com/llama/

https://ai.meta.com/blog/llama-2/

Repository and other links -

https://github.com/facebookresearch/llama/tree/main

https://huggingface.co/meta-llama

https://github.com/facebookresearch/llama/blob/main/LICENSE

No comments:

Post a Comment