Introduction

In order to empower large language models (LLMs) to follow complex instructions, Microsoft (as referenced on the GitHub website) has developed a project using a technique called Evol-Instruct. The project has been successful in training LLMs with open-domain instruction following data. The contributors of the project are highly motivated students and researchers who work on improving the project, training on larger scales, adding more training data, and innovating more advanced large-model training methods. The project is called ‘WizardLM’. All links can be found in the ‘source’ section at the end of the article.

Key Points of The Project

It aims to improve the ability of large language models to follow complex instructions. Large language models like OpenAI's GPT have demonstrated impressive language generation capabilities but struggle with following instructions. Creating instruction data with varying levels of complexity is time-consuming and labor-intensive for humans. The proposed solution is to use LMs themselves to generate instruction data using evolve instruct, a method constructed by the researchers of this project.

Using Evolve Instruct

Evolve instruct consists of two main components instruction revolver and instruction filter.

- Instruction revolver - It generates open domain instructions of varying levels of difficulty using LMs through five types of operations: adding constraints, deepening and criticizing, increasing reasoning steps, complicated input, and in-breath evolving.

- Instruction filter - It refines the foundation LM by sending all generated data into an instruction pool.

This approach results in creating Wizard LM which has impressive contextual generation capabilities compared to other models like OpenAI's GPT.

Benefits of Using Wizard LM

Ability to generate a large amount of open data for open domain instructions of varying complexity levels. Utilizes evolving struct method which utilizes elements to generate instructions with different levels of complexity and diversity. Shown promising results in improving the performance of LMS and instruction following tasks.

Wizard Language Model vs Chat GPT vs other LLMs

Wizard Language Model is able to generate complex and accurate responses to detailed instruction-based prompts. Chat GPT is not able to provide the same level of detail in its responses as compared to Wizard Language Model. Both models are capable of generating good responses for simpler prompts, but Wizard Language Model outperforms Chat GPT when it comes to complex instruction-based prompts. This is because of the framework used by Wizard Language Model which allows it to continuously work towards solving complex problems.

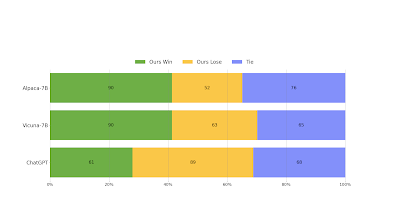

To assess the performance of WizardLM, the team conducted a human evaluation using a diverse set of user-oriented instructions from their evaluation set. This set, which was collected by the authors, includes challenging tasks such as Coding Generation, Debugging and many more. In comparison to Alpaca and Vicuna-7b, WizardLM achieved significantly better results.

To assess the performance of WizardLM, the team conducted a human evaluation using a diverse set of user-oriented instructions from their evaluation set. This set, which was collected by the authors, includes challenging tasks such as Coding Generation, Debugging and many more. In comparison to Alpaca and Vicuna-7b, WizardLM achieved significantly better results.

source-https://github.com/nlpxucan/WizardLM

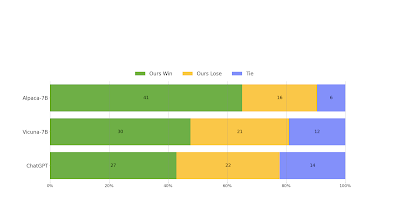

In the high-difficulty section of the test set, where the difficulty level is 8 or higher, WizardLM outperforms ChatGPT with a win rate that is 7.9% higher (42.9% compared to 35.0%).source-https://github.com/nlpxucan/WizardLM

Conclusion

WizardLM is a groundbreaking project that uses a technique called Evol-Instruct to empower large language models to follow complex instructions. However, it is important to note that the resources associated with this project are for academic research purposes only and cannot be used for commercial purposes.

sources

WizardLM is a groundbreaking project that uses a technique called Evol-Instruct to empower large language models to follow complex instructions. However, it is important to note that the resources associated with this project are for academic research purposes only and cannot be used for commercial purposes.

sources

https://6f8173a3550ed441ab.gradio.live/

https://github.com/nlpxucan/WizardLM

https://arxiv.org/abs/2304.12244

No comments:

Post a Comment