Introduction

Retrieval-Augmented Generation (RAG) offers the perfect blend of retrieval and generation models to provide rich contextually-generated responses based on other sources of information. This approach is gradually improving by offering more accurate and related data retrieval and using that for creating better and more precise outputs. These enhancements are evident in C4AI Command R+ which is designed to produce responses literally based on document excerpts provided with citations which refer to the specific source of the information. Multi-step tool use on the other hand enables the models to perform a number of operations where the result of one step is utilized in the subsequent steps, which enables models to handle more complex tasks and processes in real-world cases and thus increasing versatility. C4AI Command R+ shines in this aspect as this model has been developed to plan and perform sequences of actions with various tools including a simple agent.

Who Developed the C4AI Command R+?

The C4AI Command R+ model was designed by Cohere which is a start-up company that focuses on large language models in the business domain. The model was developed with inputs from Cohere's team of specialists. Cohere's aim is to provide language AI technology to the developers and large-scale enterprises to create new-age products and gain commercial benefits. ; Cohere For AI, a newly established research-oriented section of the company, also played a significant role in this model's creation.

What is C4AI Command R+?

C4AI Command R+ is Cohere's recent large language model designed for conversational engagement and continuous context involvement. It is most effective in the mixed RAG and multiple tool execution scenarios making it a perfect fit for high tier applications. We can even refer it as 'Command R+'.

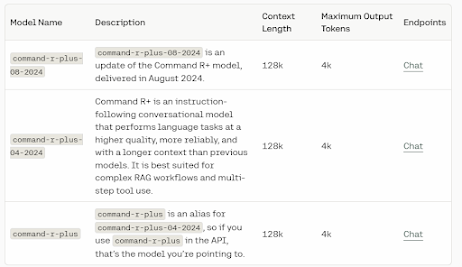

Model Variants

C4AI Command R+ comes in a few key variants. 'command-r-plus-08-2024', released in August 2024, is an updated version of the original 'command-r-plus' with enhanced tool use decision-making, instruction following, structured data analysis, robustness to non-semantic prompt changes, ability to decline unanswerable questions, and execute RAG workflows without citations, along with significant improvements in throughput and latency. It serves as an alias for 'command-r-plus-04-2024'. The differences lie in their performance optimisations, feature updates, and release timelines, with 'command-r-plus-08-2024' representing the most recent model so far.

Key Features of C4AI Command R+

- Longer Context Length: Conveys up to 128k tokens as it facilitates the computation of greatly comprehensive and sequentially interdependent interactions.

- Multilingual Capabilities: It is trained on 23 languages and it is evaluated in 10 languages. Besides, the overall usability is Optimized for multiple languages that are English, French, Spanish, Italian, German, Brazilian Portuguese, Japanese, Korean, Arabic and Simplified Chinese.

- Cross-Lingual Tasks: It can do things that include but are not limited to translation and question and answer sessions in various languages.

- Retrieval Augmented Generation (RAG): Creates replies with references in accordance with a context.

- Multi-Step Tool Use: Interfaces with other external machinery in order to complete a number of different tasks.

- Massive Parameter Size: This model has 104 billion parameters which can help perform complex tasks with high accuracy.

Capabilities and Unique Use Cases

C4AI Command R+ performs exceptionally in understanding at depth, paraphrasing and summarizing as well as in providing accurate answers to questions, and specific purpose based on its utilization in businesses, industries and corporate such as in customer relations, content generation, and data analysis wherein new execution may lead to higher productivity automation. Here are few use cases:

- Multilingual Legal Contract Analysis: Provides an overview and highlights of legal contracts in different languages for global businesses as well as provides an identification of the potential risks of the clauses included in these contracts.

- Cross-Lingual Literary Translation: Translates texts from one language into another, maintaining the original style and purpose of the work, as well as identifies themes and manners of composition in literature.

- Real-Time Meeting Summarization: Real-time simultaneous interpretation into preferred languages, taking notes, providing summaries, and actionable follow-up points helpful to the international teams for meetings.

- Generative Code Documentation: Supports various languages code and generate document in that particular language along with information about code, its summary and tutorial to help development teams that work on multiple languages.

- Cross-Cultural Marketing Campaigns: Using cultural data and language differences to develop marketing-related commercials and other related products to assist global organizations growth.

Architecture and Advancements of C4AI Command R+

C4AI Command R+ is designed on an optimized transformer model that is a type of deep learning that was intended for use in the processing of sequential data such as text. This architecture is designed for convergence so that the best results can be achieved and this is very much useful where there is a need for high order arithmetic such as language modelling and text generation. In terms of text generation, this model follows an autoregressive model where the next word is predicted based on the previously generated words. This way the generated text is meaningful and stays in context because each word depends on the context as given by the preceding words.

Some of the developments include the supervised fine-tuning and preference training. These characteristics are achieved during supervised fine-tuning and preference training to match human-perceived helpfulness and safety behavior. This entails feeding the model with huge volumes of text and code data, and then tweaking its responses in line with, feedback from people. This process also help to make sure that C4AI Command R+ gives out responses which are correct, secure and conforming to human ethic.

There is also an improvement of multi-step tool use in the C4AI Command R+ version. Unlike single-step tool use where a model is allowed to request for external tools just but once, multi-step tool use allows the model to strategize on the flow of actions to complete his or her program using as many tools as possible. This capability greatly extends the applicability of the model and opens up a vast number of opportunities for its usage which now can cover complex real-world tasks that often involve decision making, multi-step computation, and if necessary, interaction with external systems. Furthermore, C4AI Command R+ integrates the Accelerated Query Attention (AQ+), an extension of the original GQA which improves the speed of the attention mechanism within the transformer model while keeping the model’s ability to form responses and process and analyze information at the same rate as the original model.

Performance Evaluation

Command R+ perform well in RAG tasks that contrast with other models in the scalable market category. In the comparison against a number of benchmark models, including the GPT-4 and Claude 3 Sonnet, as well as in the samples of head to head human preference evaluation of writing tasks, Command R+ scored higher in such aspects as text coherence and non-redundancy of citations. This evaluation employed a custom test set of 250 diverse documents with ornate summarization requisitions. Command R+ leveraged the RAG-API, while the baselines were proved to be heavily prompt-engineered, which demonstrated the usefulness of Command R+ in real-world commercial scenarios.

Another major experiment involved Tool Use features where it was necessary to automate business processes. Microsoft’s ToolTalk (Hard) benchmark was employed to assess Command R+ as well as Berkeley’s Function Calling Leaderboard (BFCL). Single-turn function calling as well as conversational tool usage were also excellent as depicted by the model. In the ToolTalk benchmark, high success rates were achieved in the recall of tool calls and in the prevention of unwanted actions in the Command R+ test In the BFCL evaluation, acceptable function success rates were achieved in Command R+ across the various subcategories of executables.

Other assessments included a multilingual support aspect, as well as tokenization effectiveness. In both FLoRES and WMT23 tests, Command R+ performed very well in translation tasks in 10 selected business languages. The model’s tokenizer was also highly efficient in data mincing of non-English text with the level of cost reduction reaching to 57% compared to others in the market. This efficiency was observed particularly so in non Latin script languages where the Cohere tokenizer generated fewer tokens compared to the string representation of the same text.

How to Access and Use C4AI Command R+

C4AI Command R+ provides options in deciding how one can have easy access and use the software. It is provided on Hugging Face where one can try it in a web-based environment or pull the model weights to run locally. The model can also be utilized by the Cohere API where the setting and the usage instructions are highlighted on the Hugging Face model card. C4AI Command R+ is a free software and released under the CC-BY-NC, permitting non-commercial use with proper attribution.

Limitations And Future Work

- Language Support Limitation: Some of the more complex functionalities such as Retrieval-Augmented Generation (RAG) and multi-step tool use are currently only supported in English.

- Context Window Issues: Writing prompts between 112 k and 128 k tokens lead to poor quality of the generated work due to performance issues on longer input.

Future Focus Areas are Increasing language coverage for additional features and addressing the issues of the context window to further increase applicability of the model for a non English speaking audience.

Conclusion

Among all C4AI commands, C4AI Command R+ is the unique solution that can help businesses that focus on the implementation of AI in complex processes. The ability to handle RAG, multi-step tool use, and multilingual further makes it a tool of great value in managing extended-context tasks and workflow interactions. This not only promotes productivity but also expands several doors of opportunities of enterprises’ applications.

Source

Website: https://docs.cohere.com/docs/command-r-plus

Hugging Face C4AI Command R+ weights: https://huggingface.co/CohereForAI/c4ai-command-r-plus-08-2024

Hugging Face Predecessors: https://huggingface.co/CohereForAI/c4ai-command-r-08-2024

Performance Evaluations : https://cohere.com/blog/command-r-plus-microsoft-azure

Disclaimer - This article is intended purely for informational purposes. It is not sponsored or endorsed by any company or organization, nor does it serve as an advertisement or promotion for any product or service. All information presented is based on publicly available resources and is subject to change. Readers are encouraged to conduct their own research and due diligence.