Introduction

Hugging Face, a prominent American enterprise, is renowned for crafting state-of-the-art solutions for building machine learning-based applications. Their flagship offering, the Transformers library, is a revolutionary development in the field of natural language processing. Among its many features, Transformer agents stand out as a cutting-edge API that has the capability to execute a diverse spectrum of tasks encompassing various modalities, including but not limited to, text, image, videos, audios, and documents. These agents, being experimental in nature, herald a new era of sophisticated problem-solving mechanisms that can transcend conventional boundaries and push the envelope of innovation to new heights.

What are Transformer Agents?

Transformers Agents is a fully multimodal agent that provides natural language API on top of the Transformers. It has access to models related to text, images, videos, audios, documents and more. It allows users to provide instructions in natural language which are then executed by different models using agents and tools. Hugging Face released their own version of this system called "Transformers Agents" which is part of the Hugging Face Transformer library.

Features of Transformer Agents

Transformer Agents is a fully multi-modal basic feature that allows working with various types of data such as text, image, videos, audios, and documents.

It can generate images using prompts and transform them by giving it another prompt.

It can read out the content of an image and provide an audio file.

It is incredibly versatile and can handle different types of NLP tasks across various industries.

How do Transformer Agents work?

Users can use natural language to communicate with the agent and execute different ranges of tasks across different modalities such as text, image, videos, audios, and documents.

To process natural language requests, a large language model is required. The user provides instructions in natural language which creates a detailed prompt based on those instructions.

Different tools are defined as part of prompt creation that the large language model has access to. The agent utilizes tools from the hugging face library and pre-trained models to execute instructions given in natural language.

Based on the initial prompt, a set of steps is created for executing instructions using available tools. A pipeline executes these steps in Python and returns results to the user. The generative content is achieved by leveraging the power of generative models like GPT3.

Main Features of Hugging Face Transformers

Hugging Face Transformers is an open-source platform that offers state-of-the-art machine learning tools such as PyTorch, TensorFlow, and Jax framework.

It provides APIs and tools to download and train pre-trained models, which saves time and resources.

The Transformer supports a wide range of common tasks in natural language processing, computer vision, audio, and multi-model applications.

For natural language processing, it provides tools for text classification, entity recognition, question answering, summarization, translation, multiple choice questions and text generation.

For computer vision tasks it offers image classification object detection and segmentation.

For audio tasks it offers automatic speech recognition as well as audio classification tool.

For multi-modal feature applications it focuses on table question answers optical character recognition information extraction from scanned documents as well as video classification and visual question answering.

Pre-Trained Models and Custom Models

The range of pre-trained models available on Hugging Face, which can be fine-tuned on specific datasets to achieve high performance for specific tasks.

Over 100 supported frameworks are available on Hugging Face.These frameworks can be utilized to fine-tune pre-trained models on specific datasets.

Fine-tuning pre-trained models helps achieve high performance for specific tasks.

Custom models can be built using projects like the Projects API and tools provided by Hugging Face. Custom models can be tailored for specific needs.

Multi-Modal Models and Model Exporting

Multi-modal models can process text, images, videos, audios, and other types of data sets simultaneously. These models can be exported using formats like TorchScript for deployment and production environments.

Running Code with Hugging Face Google Colab Notebook

Based on the initial prompt, a set of steps is created for executing instructions using available tools. A pipeline executes these steps in Python and returns results to the user. The generative content is achieved by leveraging the power of generative models like GPT3.

Main Features of Hugging Face Transformers

Hugging Face Transformers is an open-source platform that offers state-of-the-art machine learning tools such as PyTorch, TensorFlow, and Jax framework.

It provides APIs and tools to download and train pre-trained models, which saves time and resources.

The Transformer supports a wide range of common tasks in natural language processing, computer vision, audio, and multi-model applications.

For natural language processing, it provides tools for text classification, entity recognition, question answering, summarization, translation, multiple choice questions and text generation.

For computer vision tasks it offers image classification object detection and segmentation.

For audio tasks it offers automatic speech recognition as well as audio classification tool.

For multi-modal feature applications it focuses on table question answers optical character recognition information extraction from scanned documents as well as video classification and visual question answering.

Pre-Trained Models and Custom Models

The range of pre-trained models available on Hugging Face, which can be fine-tuned on specific datasets to achieve high performance for specific tasks.

Over 100 supported frameworks are available on Hugging Face.These frameworks can be utilized to fine-tune pre-trained models on specific datasets.

Fine-tuning pre-trained models helps achieve high performance for specific tasks.

Custom models can be built using projects like the Projects API and tools provided by Hugging Face. Custom models can be tailored for specific needs.

Multi-Modal Models and Model Exporting

Multi-modal models can process text, images, videos, audios, and other types of data sets simultaneously. These models can be exported using formats like TorchScript for deployment and production environments.

Running Code with Hugging Face Google Colab Notebook

Hugging Face provides a Google Colab notebook to play with Transformers Agents. The code requires running on GPU and Transformer version 4.29 or higher. The Transformer agent project provides a free GPU with Google Colab to fine-tune pre-trained models for your own data.

Some additional libraries are downloaded automatically when running the code. Pre-trained models like BERT and GPT3 can be easily downloaded and loaded using the Transformer agent project. The agent will download specific tools from Hugging Face as needed.

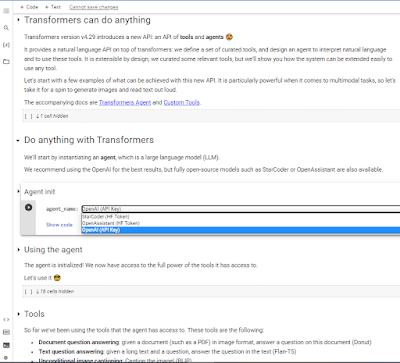

There are three different options for using Transformer Agents: OpenAIs and LLM, Open Assistant, or Star Coder.

Currently, there are two commands supported: run and chat. 'Run' is one command that works on the agent and performs better for multiple operations at once. 'Chat' keeps memory across runs but performs better at a single instruction.

User can customize both existing tools' descriptions and your own prompts. This gives user more control over how your agent operates in different scenarios.

User can generate images of boats in water or write short stories about detectives solving mysteries. User can perform audio and video tasks by passing variables directly.

All desired links are provided under 'source' section at end of this article.

Conclusion

Transformer agents by Hugging Face are a remarkable innovation in the field of machine learning-based applications. The experimental API is versatile in handling diverse modalities and utilizes tools from the Hugging Face library and pre-trained models to execute instructions given in natural language.

There are three different options for using Transformer Agents: OpenAIs and LLM, Open Assistant, or Star Coder.

Currently, there are two commands supported: run and chat. 'Run' is one command that works on the agent and performs better for multiple operations at once. 'Chat' keeps memory across runs but performs better at a single instruction.

User can customize both existing tools' descriptions and your own prompts. This gives user more control over how your agent operates in different scenarios.

User can generate images of boats in water or write short stories about detectives solving mysteries. User can perform audio and video tasks by passing variables directly.

All desired links are provided under 'source' section at end of this article.

Conclusion

Transformer agents by Hugging Face are a remarkable innovation in the field of machine learning-based applications. The experimental API is versatile in handling diverse modalities and utilizes tools from the Hugging Face library and pre-trained models to execute instructions given in natural language.

The Hugging Face Transformers library has democratized natural language processing by providing immediate access to over a large number of pre-trained models based on the state-of-the-art. The Hugging Face Hub has made it easy for programmers to interact with these models using the three most popular deep learning libraries: Pytorch, Tensorflow, and Jax.

source

Twitter link: https://twitter.com/huggingface/status/1656334778407297027

Notebook: https://colab.research.google.com/drive/1c7MHD-T1forUPGcC_jlwsIptOzpG3hSj

Transformer: https://huggingface.co/docs/transformers/index

Blog post: https://huggingface.co/docs/transformers/transformers_agents

No comments:

Post a Comment